Leaderboard

Popular Content

Showing content with the highest reputation on 12/17/20 in all areas

-

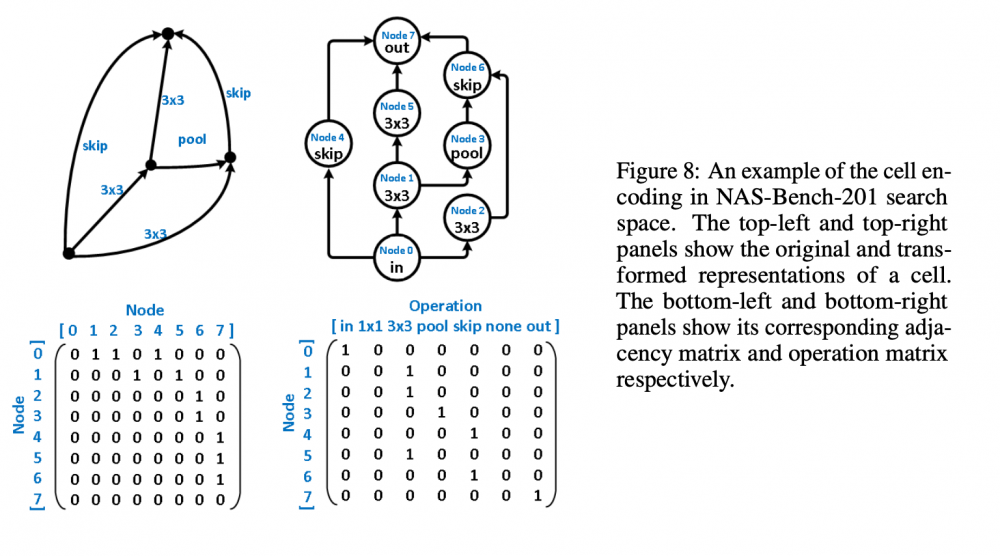

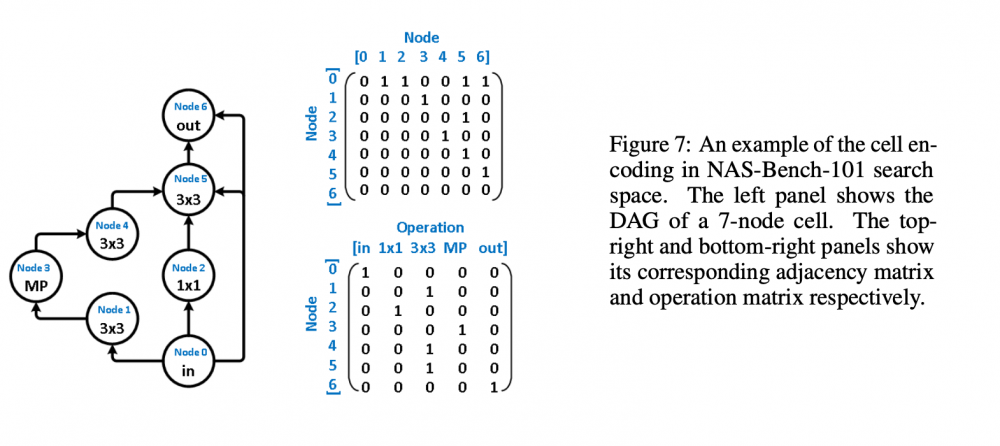

Greetings everyone, I am new to this forum and am excited as such. Another forum that I frequent - The Fossil Forum - seems to adopt the same the software or platform as this one; it's familiarity I find comforting alongside the fact that there are, supposedly, many people interested in science here. Now for the what I am creating this post for. My topic here concerns a paper I am trying to reproduce for a challenge. I have about a month remaining before I need to finish my reproduction and am a ways off from having achieved a solid foundation from which I can begin running the tests I need to run. The paper - https://arxiv.org/pdf/2006.06936v1.pdf - I am trying to reproduce is Does Unsupervised Learning Architecture Representation Help Neural Architecture Search? and employs a method of pre-trained embeddings, as opposed to supervised embeddings, to cluster the latent search space in such a way as to slightly increase the efficiency of the search algorithms used later on (this is all under the umbrella or context of Neural Architecture Search). In the author's words, "To achieve this, arch2vec uses a variational graph isomorphism autoencoder to learn architecture representations using only neural architectures without their accuracies. As such, it injectively captures the local structural information of neural architectures and makes architectures with similar structures (measured by edit distance) cluster better and distribute more smoothly in the latent space, which facilitates the downstream architecture search." I have had many troubles thus far in tackling this reproduction: my understanding of the mathematics, my ability to use Google Cloud's computing services, my creativity in devising tests of robustness, and my judgment in selecting which parts of their code base https://github.com/MSU-MLSys-Lab/arch2vec to port from their implementation in PyTorch to TensorFlow. Also, if I do everything I need to, I still have to write it up in a scholarly fashion, which I do not think will be too difficult, but which I think will require much editing and my available time is but a month. So, I come to you today to take a look at one of these troubles, my understanding of the mathematics. I will introduce the first part of their paper that I think is important, the Variational Graph Isomorphism Autoencoder. I think that before I do this, a recap of Neural Architecture Search (NAS) is due (I am certainly not qualified to introduce this but will do the best I can with the knowledge available to me). In NAS, the goal is to generate and/or find the best neural architecture for a particular dataset. There is also the goal of searching for the best performing architecture amongst a dataset of architectures. All this involves many steps. First, a neural architecture must be represented in some way. How would you naturally break down a CNN? Well, researchers use these graph cells which have nodes consisting of operations and edges that connect nodes to one another. The operations can be something such as a 3x3 convolution or 3x3 max-pooling. A single architecture consists of some number of these nodes (it depends on the particular datasets of architecture, with the two important ones in the paper NAS-Bench 101 and NAS-Bench 201). From the former dataset, here is a representation: And from the latter dataset Mathematically, the "node by node" matrix is the upper triangular adjacency matrix \(\mathbf{A} \in \mathbb{R}^{N x N}\) and the "node by operation" matrix can be represented by a one-hot operation matrix \(X \in \mathbb{R}^{N x K} \) where is \(N\) is the number of nodes and \(K\) is a set of predefined operations (remember like a 3x3 convolution). One thing this group of researchers does is augment the adjacency matrix -> \(\tilde{\mathbf{A}} = \mathbf{A} + \mathbf{A}^T\) to allow for bidirectional information flow, to "transfer original directed graphs into undirected graphs". I do not really understand this and would appreciate a contextual description of un/directed graphs in context of isomorphisms. Moving on, the rest of NAS consists of developing some embeddings that a search algorithm (random search, the reinforcement learning REINFORCE algorithm, bayesian optimization) can use to find the best performing architectures in the architecture datasets. The researchers here use a Variational Graph Isomorphism Encoder: Which I am having some trouble mentally turning in TensorFlow code. I feel somewhat lost now, but will probably return to this post with a more strategic mindset in a little bit. For now, are there any resources on graph theory, graph isomorphism autoencoders, autoencoders, that you think would benefit me? Alternatively, does anyone have a broad scoping explanation for the intuition behind graph isomorphism autoencoders and for why they are used here instead of some other method? Thanks to everyone reading this and I hope I can find interesting things to contribute in the future, hopefully in a less verbose manner. Have a nice day and stay safe!2 points

-

In fact, the Python code that I inserted above is quite different from the methods employed in the paper. The code I added was a very rudimentary presentation of what the structure of something written using Keras may appear like. Nonetheless, I agree with @Ghideon that @zak100 should attempt to piece together which major components of Keras correspond to the methods used in the paper. As an example, if the paper mentioned a Convolutional Neural Network, then two appropriate answers would be to look at (1) Google search: keras layers -> https://keras.io/api/layers/ -> look at convolutional layers (WHAT IT LOOKS LIKE ON THE WEBSITE) or (2) keras CNN tutorial -> https://victorzhou.com/blog/keras-cnn-tutorial/1 point

-

For the paper Zak100 provided in opening post my understanding was that they provided experimental evidence that their approach was working and had good performance. Hence I recommended checking that there was possible to get hold of data first. But your contributions allows for a shift of focus as you suggested above; there are now multiple ways to get data and possible alternative approaches. I support your approach to start with prototype model, unless Zak still wants to pursue exactly the approach described in the paper in OP. I agree. @zak100 Here is a quick attempt at providing a little quiz (Hope @The Mule corrects me if I get this wrong) The model in the Keras code example provided by The Mule differs slightly from your initial question and the approach in the paper. Which Keras class may be a reasonable starting point for the kind of neural network that the paper* in your first post use? *) The paper in opening post: Towards Safer Smart Contracts: A Sequence Learning Approach to Detecting Security Threats1 point

-

We don't know what the Conscious experience is for Blind people. Blindness is a degenerate case of Vison or non Vision. All we can do for now is explore and figure out what Redness is for normally developed Sighted people that can see Redness. I See Redness but I don't know what it is. It is some sort of Conscious Phenomenon. I say the Conscious Experience of Redness, to emphasize that we are talking about a Phenomenon of the Mind. So how about if I just say Redness. Can you see the Color Red? That's what I'm talking about. I like to say Redness instead of Red. I have found that if I talk about Red that people start talking about Wavelengths of Electromagnetic Light. If I say Redness it makes them stop and think a little Deeper about the Perception of the Red or the Redness of the Red.1 point

-

Of course all safety measures has to taken in account. Conc. Hydrochloric acid can release HCl gas. The reaction develops hydrogen. Good ventilation is necessary. Also PSA has to be worn. Goggles, gloves, maybe a mask.1 point

-

I'm a little reluctant to butt in, but I'm slightly concerned that the OP seems somewhat new to chemistry (I don't have much hands-on experience but I've done a few chem courses in college and watched a lot of chem videos) so I figured just in case OP plans on doing this indoors I should ask whether or not rebar releases toxic fumes when dissolved in hydrochloric acid like silver does in nitric. Because if it does, it might be an idea to do this outdoors or in a fume hood.1 point

-

What do you mean by conscious experience? Do dogs have conscious experiences? Do amoebas have conscious experiences? Do trees have conscious experiences?1 point

-

1 point

-

I unfortunately am not of any help, but am curious to see how this advances and hope some people can hep you out! Well written account of what is up, although I do think you may need to elaborate more on what type of help you exactly want. The resources on various topics you ask for may be too broad (but you never know!). It would probably also help if you explain in more detail what you have done so far regarding the TensorFlow implementation and where you can't see a good way of doing it. Otherwise it may feel to some members that you are asking them to walk you through from start to finish (but as long as you continue providing well written posts that probably won't happen). Good luck!1 point

-

I find the topic interesting! I'll try to read through the material and see if I can contribute. I must say though that this is outside my area of expertise so I can't guarantee that I'll be successful within a reasonable time. I do not have enough experience to intuitively address your questions. That said, there are plenty of members quite skilled in mathematics. If necessary we may be able to extract some mathematics specific question and post in that section of the forums.1 point

-

Ahh, but Kirchoff, and Weins, just had a set of rules. And Stefan-Boltzmann, as well as Raleigh-Jeans, didn't work, and headed for infinity at high frequencies ( UV catastrophe ). Planck was the first to accurately describe Black Body radiation … in 1900 . I wonder... The fact that we all know QM to some degree, leads us to recommend textbooks which are fairly advanced. But to a noob, a lot of material is left out ( or taken for granted as common knowledge ), leading to the confusion that prevails among people new to the subject , or the general population. While the historical approach includes knowledge which is later discarded, a fully modern view leaves a lot of gaps. Maybe a textbook which starts from basic principles, and gives a theoretical ( not historical ) foundation, before tackling advanced material is the best choice.1 point

-

Once again, in general terms autism isn’t a condition that needs to be “cured” - it’s a difference in brain connectivity, hence “neurodivergence”. Suggesting to someone on the spectrum that they need to be “cured” so that they can better fit into a neurotypical world is not just unhelpful, it’s deeply disrespectful. This really sums up everything that is wrong with the medical establishment’s current approach to autism. Furthermore, you cannot “fix” autism any more than you can fix other forms of neurodivergence such as Down Syndrom (e.g.) - these are not learned or acquired traits, they are differences in brain structure. Instead, the thing that would be helpful to people on the spectrum who struggle with sensory issues, executive functioning, social interaction etc is to give them specific supports to develop techniques that will help them address their specific challenges in everyday life. For example, if someone struggles with organising and coordinating everyday tasks (quite common for autistics), then there are specific organisational techniques that can help with this, and these can be learned. People who are more severely impacted could be offered “assisted living” arrangements, and so on. I am of course aware that there are certain very severe manifestations of autism, and people affected by those will struggle greatly. But even here, the answer is to offer the specific supports that they need, not try to somehow turn them into neurotypicals - which isn’t possible. Apart from the fact that I don’t know what FMT even is, the answer is no, I wouldn’t. The reason is simple - I have made peace with being on the spectrum, and I am very content this way, even given the various challenges I face in everyday life. If I was somehow magically given the opportunity to be reincarnated, and be given the choice if I was to be ND or NT, I would choose to be on the spectrum again, without a moment’s hesitation. I can’t comment on this, as I don’t know what it is like to be NT. Sure...it’s just that my idea of relaxation will likely be very different from yours. Generally speaking, relaxation to me means silence and solitude; quiet contemplation and investigation; or intellectual stimulation by working out some mathematical/physical/philosophical problem, just for the fun of it. I often spent extended periods out in nature, in some remote and beautiful place far away from people with just my tent and my eReader. Or I thru-hike long-distance trails. Or I volunteer for some social cause that is meaningful to me. After I do these things, I feel a sense of peace, insight and meaning. What I would never do is spent my relaxation time in a crowded and noisy place full of people, while intentionally altering my mind through ingesting intoxicating substances. How anyone could possibly consider this “relaxing” or in any way meaningful is so far beyond me that I am not even trying to understand it. Each to their own, I guess. Yes, this seems to be fairly common among people on the spectrum, but it’s by no means a universal trait. I don’t experience this at all, for example, and neither do most of the other autistics I know. The opposite - being overly and unduly fearful and cautious - appears to be more common. Precisely Which does not mean we trivialise the very real challenges people on the spectrum face...but yes. No need to apologise, I didn’t take offence at all Yes, because being neurodivergent in a world designed by and for NTs is no stroll in the park, since most NTs don’t appear to possess enough metacognitive introspective awareness to see their very own social conventions as being social conventions, but mistake them for solid unchanging reality. This makes it very hard to accept anyone who does not conform to those conventions...and since too many NTs also don’t appear to possess the cognitive empathy required to tell that they are causing hurt and suffering through their rejection of those who don’t fit in, a proportionally higher suicide rate amongst people on the spectrum is the inevitable consequence. Am I the only one who sees the sad irony here? This is true, but the reverse is just as true - the most severe forms of autism also affect only a small proportion of people on the spectrum. The vast majority of us sit somewhere in the middle. Honestly - can you not see what a condescending statement like this makes an autistic person feel like? And that’s after you claiming earlier that it is us autistics who have no sense of cognitive empathy...go figure 🤨 Generalisations of this nature are not helpful to anyone, least of all to autistics themselves. What are you trying to really tell us here? I usually hold back when discussing autism with NTs, but on this occasion I’ll be perfectly blunt with you : unless you are on the spectrum yourself, and have close relationships with other people on the spectrum, you are not in a position to make any claims about what it is like to be autistic, and what autistic people really need and want (hint: mostly it’s just being accepted for who we are, and given some basic supports for the challenges we face). Academic study of this subject does not qualify you to claim you know what it is like being autistic, or how “disabling” you think it is. I can tell you, based on some of your remarks here, that you know less than you think you know about the actual experience of it. So I strongly suggest you dial it back a notch, because the direction this thread is now going is not a good one.1 point

-

1 point

-

Hi @The Mule and @Ghideon-Thanks for discussions and providing me the essential steps for creating a python model. <bench-marking the performance of some baseline or prototype model that you can train on smaller datasets> Yes you are right. First I have to come up with some proto-type mode. <Once the major limitation becomes how much data you have, then I would consider the problem of getting a ton of data to be your first priority. However, at the moment,> Yes, I would appreciate your help as much as possible. I got some idea. I hope once I read the paper, I would know more things. < I would think it is more crucial for you to read the articles that me and @Ghideon have discussed. > Surely I would look at the articles which you (@The Mule )and @Ghideon have pointed. God blesses you. Zulfi.1 point

-

! Moderator Note SteveKlinko, this obviously isn't how an experiment should be run to be trustworthy. It's not acceptable as support for the claims you've made in the thread. Results should be repeatable. The rest is just repeating reasoning that hasn't persuaded anyone after four pages. This doesn't meet the requirements of our Speculations section, so I have to shut this down. Don't bring this topic up again.1 point

-

Hello @zak100, I think a more important step to take before assembling 100,000 instances of data is bench-marking the performance of some baseline or prototype model that you can train on smaller datasets. Once the major limitation becomes how much data you have, then I would consider the problem of getting a ton of data to be your first priority. However, at the moment, I would think it is more crucial for you to read the articles that me and @Ghideon have discussed. With regard to me assisting you through this process, I meant that I can provide a simple model for you in Keras, not a model for SC vulnerability detection, but rather one to just minimally acquaint you with Keras. Thank you @Ghideon showing me the way to write code on here. Here is the general model pipeline in Keras: 1. Create the instance of your model 2. Compile the model 3. Fit your data to the model 4. Evaluate your model's performance 5. Predict new batches of data or datasets using the saved model weights # Example from some project I did. # data = a pandas dataframe with features from a import pandas as pd import tensorflow as tf import numpy as np import itertools as it import matplotlib.pyplot as plt from sklearn.linear_model import LinearRegression from sklearn.linear_model import ElasticNet from sklearn.linear_model import Lasso from sklearn.linear_model import SGDRegressor from sklearn.preprocessing import normalize from sklearn.preprocessing import MinMaxScaler from sklearn.metrics import mean_squared_error from tensorflow import keras from tensorflow.keras import layers # the 'target' is what you want to predict # since you do not have the data I used here, this code will not actually do anything when ran, # it purely for illustrative purposes only target = data.pop('Wattage') data = MinMaxScaler().fit_transform(data.values).reshape(len(data),5) # your data would go in the inn dataset = tf.data.Dataset.from_tensor_slices((data[:,:4], data[:,-1].reshape(-1,1))) # create the training, testing, and validation datasets train_size = int(len(data)*0.7) test_size = int(len(data)*0.15) train_dataset = dataset.take(train_size) test_dataset = dataset.skip(train_size) val_dataset = test_dataset.skip(test_size) test_dataset = test_dataset.take(test_size) # THIS IS THE IMPORTANT PART, FOR BUILDING A MODEL # a keras Sequential model with three dense layers, the last being the output layer # in this case we put a '1' for the 'units' parameter because we are predicting one target model = keras.Sequential( [ layers.Dense(units=32, activation='relu', name='layer1'), layers.Dense(units=64, activation='relu', name='layer2'), layers.Dense(units=1, name='end'), ] ) # compile the model with the correct optimizer, loss, and metrics model.compile( optimizer='adam', loss='mse',#tf.keras.losses.MeanSquaredError(reduction="auto", name="mean_squared_error"), metrics=['mse'] ) # fit your model to the training dataset and specify the validation dataset model.fit( x=train_dataset, epochs=20, validation_data=val_dataset, verbose=1, callbacks=[tf.keras.callbacks.EarlyStopping(patience=5)], shuffle=False, ) # evaluate the model's performance model.evaluate( x=test_dataset, verbose=1, callbacks=[tf.keras.callbacks.EarlyStopping(patience=5)], ) # save model for future use, so you do not have to retrain it model.save( filepath='/tmp/trained_on_cleaned_02', )1 point

-

John Von Neumann. There is no other book that has been cited more times by people who haven't read it. After it was published, QM would never be the same.1 point

-

Hello zak100, I think I can help you with the implementation of statistical models for what you want, for "Smart Contract (SC) related vulnerability detection". However, before I do this, I am going to need you to describe more about smart contract vulnerability detection. What exactly is this? A machine learning pipeline, or more generally, a modeling pipeline, begins you assembling or locating a dataset that captures the information you desire to use. So, do you know of any datasets with the vulnerability levels of smart contracts quantified? Next, you'd proceed by implementing or employing a statistical model. In this case, if the vulnerability levels are on a scale of 1-10 or something like this, we'd use a multi-class classification model, and if they are regressive, meaning that if they are some float value like 12.3 or 69.87, we'd use a regression model. In the case that the data are a time series, we then might employ an LSTM. Remember that Neural Networks are not always necessary and may even be unoptimal if we don't have that much data. Once we have our model, we can adjust and fine-tune it to produce the best results while not overfitting, which is when the model learns the dataset it was given too well and reduces how well it generalizes, or phrased differently, how well it performs on new datasets. Using Google search, I have come across this website: https://smartbugs.github.io/ It has listed several Smart Contract datasets, although I am uncertain if these are the type you are searching for. There are also research papers that came up, such https://www.ijcai.org/Proceedings/2020/0454.pdf and https://alfagroup.csail.mit.edu/sites/default/files/documents/2020. Exploring Deep Learning Models for Vulnerabilities Detection in Smart Contracts.NLeSimple-Master_Thesis.pdf . These might have code to them that you can use. If you search on https://paperswithcode.com/ for "smart contract vulnerabilities" I am hopeful that a few papers would come up. Perhaps you can clone their repositories and play around with their code. I mean, if what you are looking to do already exists, then there is little more efficient that simply using the existing implementation, unless you are trying to reinvent the wheel, which does not seem to be the case here. A good resources for learning about CNN's from a mathematical standpoint is https://cs.nju.edu.cn/wujx/paper/CNN.pdf and for LSTM's is https://colah.github.io/posts/2015-08-Understanding-LSTMs/ . I do admit that I have not read these yet in full and absorbed their significance. Scikit-Learn and Keras would probably be good for you for ML implementation in Python. Also, how do I write a code block on this website? Once I learn the answer to the above question I will write a simply keras model in Python for you to see.1 point

-

! Moderator Note You really need to stop bringing these sources up outside of the Religion/Philosophy sections. They aren't scientific at all. Isn't the real challenge keeping the mind functioning along with the body? Who wants to live past 100 if it's all retirement village and senility after 85? How advanced is the science when it comes to being spry and sharp-witted when you're 120? Of course science can make us live longer, but can it make those later years more worthwhile? I'd settle for sound mind alone, really. Pull me around in a wagon if you have to, as long as I can think straight and tell the young folks all about it.1 point

-

1 point

-

1 point

-

You cannot know for example if a Blind person is incorporating Visual Experiences in with their Hearing experiences. If they were, then they probably could not possibly know that they are experiencing Conscious Light phenomena with their Auditory Experience. Just a thought, because we cannot know what their Experience is and they cannot properly tell us. Conscious Experiences are not explainable in language. They must be Experienced.-1 points

-

The "Planck Satellite" discovered that there is an axis for the whole universe and the axis goes through the Earth's orbit. 😀 🎉 Based off this evidence, it's pretty clear to me that everything was put out there for us to enjoy as God's special creation. What an amazing discovery. Thank you science! Has anyone else heard about this? Here is a link to the full video review https://bit.ly/3hYe2hF-1 points