Everything posted by wtf

-

Mathematics is a Fairy Tale.

Fictionalism. https://plato.stanford.edu/entries/fictionalism-mathematics/

-

Relationship between a programming step and a math function discovered.

Was this for me? A TM program is a finite sequence of symbols taken from an at most countably infinite alphabet. There are therefore at most countably many TM programs of length 1, countably many of length 2, countably many of length 3, and so forth. The countable union of countably many sets is countable. Therefore there are at most a countable infinity of TMs. And for TMs you can substitute programs in any Turing-complete language: FORTRAN, COBOL, Python, C++, or whatever. Since there are uncountably many sets of natural numbers (per Cantor), there must necessarily be sets of natural numbers whose members can NOT be generated by any computer program. That contradicts your claim, to the extent that I can interpret it. https://en.wikipedia.org/wiki/Turing_machine This does not, by the way, contradict your thesis. Your premises are wrong but your conclusion is right! A step in a computer program is a transition from one state of the computer's hardware to some other state. Practical computers -- real-life digital computers implemented physically -- are finite state machines. They make a succession of transitions from one state to another, and those transitions can be taken to be mathematical functions. https://en.wikipedia.org/wiki/Finite-state_machine

-

Relationship between a programming step and a math function discovered.

I'm not sure what you mean. There are uncountably many sets of natural numbers, but only countably many Turing machines. So most (in the sense of all but countably many) sets of natural numbers can not be described by programs. Can you justify your claim or put it into context? To me it seems manifestly false.

-

Usage of the Empty Set.

-

Philosophical Implications Of Infinite Parallel Multiverses

That point was made by the great American philosopher Donald Rumsfeld, when he said: "Reports that say that something hasn't happened are always interesting to me, because as we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tends to be the difficult ones." You aren't planning to invade Iraq under false pretenses by any chance, are you? https://en.wikipedia.org/wiki/There_are_unknown_unknowns

-

Flawed Understanding of Deduction/Induction - where does it come from?

Worth pointing out that mathematical induction is a deductive process. It's one of the Peano axioms and follows in ZF from the axiom of infinity. Proofs using mathematical induction are deductive, not inductive in the scientific or philosophical sense.

-

Mathematics is Inconsistent!

There's a point in the proof of the paradoxical decomposition of the free group on two letters where you have a decomposition into five pieces, but one of the pieces is a single point that appears in both copies of the decomposition. You have to get rid of it by "Hilbert shifting" as in Hilbert's hotel. You move every point "up one room" to remove the point from one of the copies without losing any points. That's what I thought OP meant, but I don't know if that's actually what he had in mind.

-

Mathematics is Inconsistent!

Measure theory and fractals are not involved in the proof of Banach-Tarski. It's true that the pieces of the decomposition are nonmeasurable, but the existence of nonmeasurable sets is not difficult to prove without invoking any measure theory beyond the definition of a measure. At the very least, one need not study or look up measure theory in order to follow the proof of Banach-Tarski. Fractals don't enter into it at all AFAIK. The Vsauce video on Banach-Tarski is very good, as is the Wikipedia page on the subject. In particular, the proof outline on Wikipedia is straightforward, and not all that difficult to follow, if one is willing to patiently work through it. The proof has a lot of moving parts, but each part is relatively simple. The essence of the proof is that there's a paradoxical decomposition of the free group on two letters. This does not involve the axiom of choice or nonmeasurable sets. I can't find a clean, elementary discussion of this online and it's a bit lengthy but perhaps I can write something about it later. There's a brief description here. https://en.wikipedia.org/wiki/Paradoxical_set The bottom line is that Banach-Tarski is a theorem that does not contradict any other mathematics. Vsauce vid: https://www.youtube.com/watch?v=s86-Z-CbaHA https://en.wikipedia.org/wiki/Free_group ps -- I see that Step 1 of the proof sketch on Wikipedia has a pretty decent explanation of the paradoxical decomposition of the free group on two letters. https://en.wikipedia.org/wiki/Banach–Tarski_paradox#Step_1 Once that's established, the next step is to show that the group of rigid motions of Euclidean 3-space contains a copy of the free group on two letters, and therefore has a paradoxical decomposition. After that, it's just a technical matter of applying this decomposition to the unit ball in 3-space.

-

What do you see as the difference (if any) between rational thinking (analysis) and logical thinking (analysis) ?

Two examples to illustrate the difference between logic and rationality. Spock is logical. He always proceeds correctly from premises to a conclusion. Kirk is rational. He always does exactly the right thing, even if it's not logical. Kirk's correct actions often baffle Spock. Another example is the famous logician Kurt Gödel. He was often called the greatest logician since Aristotle. Later in life he became convinced that people were trying to poison him, and he refused to eat any food not prepared by his wife. When his wife fell ill and could not cook, Gödel refused to eat, and died of starvation. Gödel was supremely logical; and completely irrational.

-

Market Analysis - Is it science?

That's technical analysis. It's bullshit. The stock doesn't know what it did yesterday any more than a coin knows what it did in the last 50 flips. However, it's still rational to know a little about it, because so many other players believe in it! You need to know what the chart watchers are thinking in order to make your own moves. It's rational to pay attention to the irrational beliefs of others. Strange but true.

-

How much of me is in my memory?

And the converse question. The Flying Spaghetti Monster created you five minutes ago, with all your memories as they are. Does it matter that you've never had the experiences you thought you had, as long as you have the memories?

-

What computers can't do for you

There's a test called Winograd schema, named after Terry Winograd, an early computer scientist. https://en.wikipedia.org/wiki/Winograd_schema_challenge Winograd's original example was: The city councilmen refused the demonstrators a permit because they [feared/advocated] violence. A human knows that "they" refers to the councilmen if the verb is feared, and to the demonstrators if the verb is advocated. An AI presumably would have a hard time with that, because you need to know what the words mean. Winograd worked in the pioneering days of AI, I have no idea if the current generation of machine learning algorithms can figure these kinds of things out. Although the following page refers to a Winograd schema challenge from 2016, and there's a link on that page to a report on Winograd schemas issued in 2020. So evidently these are still considered an interesting challenge for AIs. This page has a lot of other relevant links. https://cs.nyu.edu/~davise/papers/WinogradSchemas/WS.html Computers have no ideas of anything, they only consist of switches that can each be in one of two states, 1 or 0. The meaning is supplied by the humans who program and interpret the bit patterns. Just as we can program a computer to do finite arithmetic, we can program a computer to do infinite arithmetic. For example given two cardinal numbers [math]\aleph_\alpha[/math] and [math]\aleph_\beta[/math], we have [math]\aleph_\alpha + \aleph_\beta = \aleph_\alpha \cdot \aleph_\beta = \max(\aleph_\alpha, \aleph_\beta)[/math] In other words cardinal addition and multiplication are ridiculously simple; the sum and product of two cardinals is just the larger of the two. And cardinal subtraction and division aren't defined. Computers can also represent many of the countable ordinals, namely all the ordinals below the Church-Kleene ordinal, which is the first non-computable ordinal. The computer wouldn't have any idea that it's computing with transfinite numbers, any more than it knows that it's adding 1 + 1. It's just flipping bits according to instructions. Computers don't have ideas. That's critical to understand in all discussions of machine intelligence. Computers flip bits. Even the latest deep neural net is just flipping bits. You can see that by remembering that the AI programs run on conventional hardware. Their code is ultimately reducible to bit flipping; and, as Turing pointed out, to making marks with a read/write head on a long paper tape. You could in principle execute AI code using pencil and paper. That doesn't mean a computer can't be conscious or self-aware. Some people (not me) believe that the human brain operates by bit flipping. I think that's wrong, but some people do believe it. But whatever it is that AI's do, they do not do anything different than what regular vanilla computer programs do. They're organized cleverly for data mining, but they are not doing anything computationally new.

-

Can an A.I. System Be Considered An Inventor?

I am happy to find some agreement on an online forum. That's so rare! The original post was all about legal issues and patent decisions and so forth. It's perhaps unclear to some that courts and legal and political processes are not subject to philosophy or cognitive science or computer science or even rationality. The law is whatever some court says it is. If the OP wanted to ask, "Can a machine be sentient?" or some variation on that theme, that's what they should have asked. Point being that tools are tools, and just because a washing machine has a chip does not mean that it's sentient. Ok this is a common point made by the proponents of machine learning (ML) and neural network (NN) approaches to weak AI. Weak meaning specialized for a purpose, chess or driving or whatever, and not the mythical general or human-like AI which we are no closer to now than we were in the 1960's when the AI hype train got started. Let me make some brief points. The algorithms don't come from human programmers? Well that's what 4GL languages have been doing since the 1970's. The classic example is relational databases. You ask the computer to "Show me the names of yellow fruits," and it outputs, "Lemons, bananas, papayas." You did not tell it how to traverse the database. In fact you gave it the "what" and the database system itself figured out the "how," based on optimizing the query. Programs that write algorithms are very common and nothing at all new or revolutionary. It's true that the NN systems assign weights to nodes, and get "trained" on sample data and so forth. I know how this works. But it's not true that the algorithms are not created by humans. In fact they are. We could in principle trace the algorithms using pencil and paper. In fact the ML students find out that no matter how gee-whiz the field first sounds, what they actually end up doing is learning to optimize matrix multiplications. ML and NN's are algorithms created by humans. It may be true that "we can't easily tell what the program is doing." But in fact that's true of every nontrivial program anyone ever wrote. You write a fifty line program and it doesn't do what you think you told it to do, and you spend the rest of the day figuring out why. Or some maintenance programmer gets hired by a bank and has to dive into the hundreds of millions of lines of code that date back to the 1960's. You try to fix things and not break other things. Very few actual professional programmers have the faintest idea how the systems they maintain actually work. Most working programmers work with APIs and inside frameworks or application servers and have no idea even of the local operating system facilities. Let alone the system and hardware layers. The average working programmer has very little idea of how their programs are working, especially in the modern age. I could belabor this point but I hope you catch the sense of my response. The most advance "deep" neural net in the world is a conventional program running on conventional hardware. The nodes and paths are data structures, you train the network on sample data (the "corpus" as they call it), and it cranks out impressive-looking feats of weak AI. But it's in principle a physical implementation of a Turing machine. You could run the code with pencil and paper. It runs on standard, conventional hardware. And frankly a prosaic, everyday system like the global supply chain is more complicated than a chess engine. Nobody knows how the global supply chain works. It's used for grubby commerce so nobody puts it on a pedestal, but it's probably a billion or more lines of code distributed over thousands of individual enterprises. Nobody has any idea how it works. But to your main point: Even an optimizing compiler outputs algorithms that the human didn't write. It improves them, sometimes in creative ways. Programs have been writing other programs for a very long time. We have no idea. We don't know how minds work. Show me the part of the brain responsible for your self-awareness and subjective sense impressions. You can't do that because nobody in the world can. And surely we don't have bits and registers. The mind does NOT work anything like a digital computer. Only in the popular AI-hyposphere is this regarded as even a remotely sensible debating point. There's just no evidence for the thesis. Brains don't flip bits. And even if you push the neural network analogy, even that fails dramatically. NN's do pattern matching, they haven't the common sense or awareness of the world of a newborn human. That's a claim for which there's no evidence. It's just not true. It could, if evidence for your claims showed up someday. Since our best cognitive scientists haven't the foggiest idea how the mind works, you haven't the evidence to support your claim. Rapidly developing AI? Rapidly developing weak AI, yes. Playing chess and driving cars. Strong, or general AI? Hasn't made a lick of progress since the hype train got started in the 1960's. The question is about what some court might say. Here's one case that comes to mind. In 2017 Saudi Arabia granted citizenship to a robot. This decision was not made by philosophers or computer scientists or, frankly, any kind of deep thinkers. It was decided by politicians. If politicians pass a law decreeing that a washing machine with a chip is sentient. then it's legally sentient. It's not actually sentient, but in legal matters, it's the law that counts and not rationality. "The law is an ass," is an old saying. If the original question was, "Can a machine be sentient?" that would be something we could discuss. But if the question is, "Can a court rule a machine is sentient?" then of course it can. Courts of law do all kinds of crazy things. Well I'll say no on general principles. Since we have no idea what makes sentient consciousness, it's doubtful we could create one. We don't know enough about the mind, by a long shot. Can a computer be capable of "conceptualizing new ideas on its own?" No. At best it can do clever datamining. A classic case was a sophisticated AI that learned to distinguish wolves from huskies, something that's difficult for humans. It had a terrific success rate. Then they realized that the training data always showed huskies in a snowy background. What the program was actually doing was recognizing snow. https://innovation.uci.edu/2017/08/husky-or-wolf-using-a-black-box-learning-model-to-avoid-adoption-errors/ Another recent datapoint was when Facebook labelled the Declaration of Independence as hate speech. https://www.cnn.com/2018/07/05/politics/facebook-post-hate-speech-delete-declaration-of-independence-mistake/index.html With real life examples as those, you cannot make a case that programs are doing anything even remotely like what humans do in terms of creativity and awareness of the world. Weak AI systems do sophisticated pattern matching on their training data, nothing more. You can easily find dozens if not hundreds of similar examples. Here's one, AI fooled by changing a single pixel. https://www.bbc.com/news/technology-41845878 The hype around AI is really off the charts. It's easier to put into perspective if you know the history of the AI hype cycle. This article traces it back to the 1950's. True AI sentience has ALWAYS been "right around the corner." https://www.kdnuggets.com/2018/02/birth-ai-first-hype-cycle.html Regardless of whether the particular system you're asking about can be creative, I hope you can see that the inventor is SUING someone in a court of law. Even if they were to win their lawsuit, that would not make their machine creative. It would only mean they got some court to make a ruling, like the Indiana legislature that once (almost) decreed that the value of pi was 3.2. The only reason the bill didn't pass is that there just happened to be a mathematician present in the legislature that day. https://en.wikipedia.org/wiki/Indiana_Pi_Bill

-

Can an A.I. System Be Considered An Inventor?

You are asking a legal question, not a metaphysical one. If some court rules a washing machine a person, then legally a washing machine is a person. Modern washing machines have chips and "make decisions." That's a lot different than asking if an "AI" in quotes, since there is no true AI, can be sentient or creative or can invent things. We already have programs that write other programs, for example compilers and 4GL languages. Doesn't mean anything. The program in question runs on conventional hardware and is in principle no different than any other conventional program. Machine learning and neural net approaches are a clever way to organize datamining, but all they can do is identify patterns based on what's already happened. By definition they can't create. But again, those are arguments for the metaphysical position that contemporary computers are not sentient/creative/self-aware/whatever. You are asking a legal question though, and the answer to that is, "Whatever a court decides is the law." Are you suggesting that my hammer owns the chair I built with it? Such a view is nonsensical. Does your computer own the words you typed into this forum? A program doesn't invent anything, it just flips bits according to an algorithm. An algorithm written by a human. A computer program is a tool.

-

Proof there are as many numbers between 0 and 1 as 1 and infinity?

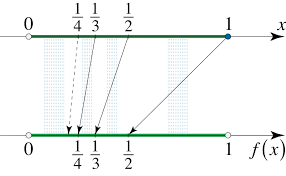

I found a picture of the mapping from (0,1] to (0,1). Instead of using the inverse powers of 2, it uses the sequence 1/2, 1/3, 1/4, 1/5, ... See how this works? The 1 at the right end of (0,1] gets mapped to 1/2. Then 1/2 gets mapped to 1/3; 1/3 gets mapped to 1/4, and so forth. The mapping is reversible so this is a bijection.

-

Proof there are as many numbers between 0 and 1 as 1 and infinity?

Walk through it step by step. Convince yourself that the mapping is: * Injective: Different inputs go to different outputs; and * Surjective: Everything in the target set gets hit. By definition, the mapping is a bijection. Stepping through the details will make the idea clear. To see exactly how it works, I suggest using the method I showed at the end (instead of my solution). Map 0 to 1/2, map 1/2 to 1/4, 1/4 to 1/8, etc. What happens is that 0 gets absorbed and all the powers of 2 get slid down one space, mapping [0,1) to (0,1).

-

Proof there are as many numbers between 0 and 1 as 1 and infinity?

1/x is a bijection from (0,1) to (1, infinity). There's a bijection from [0,1) to (0,1). How do we do this? The rationals in (0,1) are countable so they can be enumerated as [math]r_1, r_2, r_3, \dots[/math]. Now you define a bijection [math]f: [0,1) \to (0,1)[/math] as follows: If [math]x[/math] is irrational, then [math]f(x) = x[/math]. That is, [math]f[/math] leaves all the irrationals unchanged. [math]f(0) = r_1[/math]. And [math]f(r_n) = r_{n+1}[/math]. In other words [math]f(r_1) = r_2, f(r_2) = r_3[/math], and so forth. Now you can see that every rational in (0,1) is in the range of [math]f[/math], and [math]f[/math] is reversible; that is, it's both injective and surjective. So it's a bijection. Graphically, we've mapped the rationals like this: [math]0 \to r_1 \to r_2 \to r_3 \to r_4 \to \dots[/math], in effect sliding 0 into the enumerated rationals in (0,1), which do NOT include 0. This trick amounts to embedding Hilbert's hotel in the unit interval. Initially the hotel is full, with guest 1 in room 1, guest 2 in room 2, etc. Now an "extra guest" named 0 shows up. We move guest 1 to room 2, guest 2 to room 3, and so forth, leaving room 1 empty. Then we move the extra guest 0 into room 1. In effect we've made room for the extra point at 0 by sliding everyone else up one room. To biject [0,1] to (0,1) we just do the same trick twice. Map 0 to [math]r_1[/math], map 1 to [math]r_2[/math], map [math]r_n[/math] to [math]r_{n+2}[/math], and leave the irrationals alone. Then to map [0,1) or [0,1] to (1, infinity), just compose the bijections. First map [0,1) or [0,1] to (0,1), then map (0,1) to (1, infinity). There are some other clever solutions in these Stackexchange threads. https://math.stackexchange.com/questions/28568/bijection-between-an-open-and-a-closed-interval https://math.stackexchange.com/questions/213391/how-to-construct-a-bijection-from-0-1-to-0-1 The checked answer by Asaf Karagila in the first link gives a completely explicit solution by sliding along 1/2, 1/4, 1/8, etc., without the need for a mysterious enumeration. That is, to map from [0,1) to (0,1) you map 0 to 1/2, 1/2 to 1/4, etc., and leave everything else alone. To map [0,1] to (0,1) you map 0 to 1/2, 1 to 1/4, 1/2 to 1/8, 1/4 to 1/16, etc. I like that one, clean and simple.

-

If we didn't have the stars...

I've wondered the same thing, but about the scientific rather than philosophical effects. We looked at the stars and drew pictures in the sand and worked out trigonometry and early theories of astronomy. What if everything else had been the same but the earth were covered by clouds? We'd still have gravity, but we couldn't look at the heavens. How would science have progressed? Hope this isn't too much of a thread hijack but it's a question that's long been on my mind.

-

prime dilemma

Yes exactly. I suppose you'd have to subtract the two integers and see if the result is integer-divisible by the modulus. That seems like the most sensible way. Probably not the only way.

-

prime dilemma

Great question. Yes they are "the same but a little different." In math, mod is an equivalence relation on the integers. We say that [math]a \equiv b \pmod n [/math] for two integers [math]a, b[/math] if it happens to be the case that [math]n | a - b[/math], where the vertical bar means "divides." So for example [math]5 \equiv 3 \pmod 2[/math] and [math]17 \equiv 5 \mod 3[/math]. You can verify that this is an equivalence relation: It's reflexive, symmetric, and transitive. It partitions the integers into [math]n[/math] pairwise-disjoint equivalence classes. It's a fundamental concept in number theory. In programming languages, mod is a binary operator that inputs two integers and outputs a third: 5 % 3 = 2. It inputs 5 and 3, and outputs the remainder when 5 is integer-divided by 3. The math and programming concepts are closely related. [math]a % n[/math] is the remainder when [math]a[/math] is integer-divided by [math]n[/math]; that is, the result of [math]a % n[/math] is the smallest positive element of the equivalence class of [math]a[/math] under the [math]\pmod n[/math] equivalence relation. This turns out to be the same as the remainder under integer division. The tl;dr is that in math, mod is an equivalence relation that inputs a pair of numbers and a modulus and returns True or False; whereas in programming, mod is a binary operator that inputs an integer and a modulus and outputs the smallest positive member of the equivalence class of the integer mod the modulus. The difference is shown by, say, the fact that [math]17 \equiv 14 \pmod 3[/math]; but [math]17 \ \% \ 3 = 2[/math] and not [math]14[/math]. That is. even though mathematically [math]17 \equiv 14 \pmod 3[/math], in a programming language, [math]17 \ \% \ 3 == 14[/math] would evaluate to False. That's an example that illustrates the difference.

-

prime dilemma

I don't think they have names, but there's a famous theorem of Fermat that says an odd prime is the sum of two squares if and only if p = 1 (mod 4). https://en.wikipedia.org/wiki/Fermat's_theorem_on_sums_of_two_squares

-

Could the Internet become self aware?

I think it's interesting that this question always gets asked in terms of the Internet, because that's the only complex computer system people have a daily experience of. But it's far from the most complex and mysterious computer system. If any computer system were to become self-aware, my bet would be the global supply chain. The system that moves raw materials from here to component factories there to integration sites somewhere else to distribution points somewhere else and ultimately puts a finished consumer good on the shelf at your local big box store, at a price point attractive to buyers yet high enough to ensue a profit for every single actor along the chain. The global supply chain is an immensely complicated system, far more complex than the Internet, whose architecture is generally well understood. It involves maintaining just-in-time inventories, tracking taxes and tariffs across international and local borders, integration of air, sea, and land transportation, predictions of consumer demand and raw material supply, and all the rest of it. It's a system that nobody sees but that affects literally every physical thing around us, from the furniture we sit on to the food in the fridge, and the fridge itself. It touches everything. You can turn off the Internet in your home, but not the global supply chain. If the thesis is that a sufficiently complex system can become self-aware, the global supply chain would be my candidate. Not the Internet, whose architecture is simple by comparison.

-

GNSV operators

Yes, the Leibniz series is known to converge extremely slowly. Fun beginning programming project. I think he was continuing the series, not any previous conversation.

-

Something that looks like a contradiction in ZF

Aha. I did help you. I am pointing out your specific error. You are not asserting the question of whether one set is an element of another. You are interpreting the left hand side as a string that specifies a set. That's your error. You're equivocating "n in N such that n is prime" as a specification, on the one hand, and as a set, on the other. That is YOUR error, not an error in logic books. The logic books DON'T make that error. If it's a string, then you have to take its Godel number to ask if it's prime. If it's a set, you don't. But you can't have it both ways at the same time.

-

Something that looks like a contradiction in ZF

You replied before I edited that out. I actually don't think I can help. I agree with you that [math]\{n \in \mathbb N : n \text{ is prime}\} has a Gödel number, which in fact is not prime (since it will be the product of a bunch of prime powers). Aha I have a bit of insight. if [math]S[/math] is a statement in the language of set theory, let [math]G(S)[/math] be its Gödel number. Let [math]P[/math] be the set of primes. Then [math]G(\{n \in \mathbb N : n \text{ is prime}\}) \in P[/math] is a valid statement with a definite truth value, which in fact we can determine to be False. But [math]\{n \in \mathbb N : n \text{ is prime}\} \in P[/math] is NOT a valid statement, because it mixes up the metalanguage with the model. I think if you rewrite your argument, including the notation [math]G(S)[/math] whenever you intend to take some sentence's Gödel number, the error in your argument will be more clear. So I can restore my edit. I think I did help you. Let me know when you get the million. My contribution is worth at least a few bucks.