Everything posted by Prometheus

-

Artificial Consciousness Is Impossible

Your figure of a being and an environment reminds me of Markov Blankets, which relate a set of internal and external states as conditionally independent from each other. In this framework, i think the distinctions between intelligence, consciousness and self-awareness are on a continuum and so not qualitatively different - unless there is some kind of 'phase transition' when markov blankets are embedded in one another to a sufficient extent. (The free energy principle from which this model is derived draws heavily from physics so might be of interest to you).

-

Suggestions for using AI

I guess it's easier to take the law into your own hands and destroy things than it is collecting evidence and constructing a well-reasoned case against something.

-

Artificial Consciousness Is Impossible

You might be interested in the work of Michael Levin who, as i understand it, talks about layers of computation in organisms - organelles performing computations which in concert with other organelles perform computations at a cellular level and similarly up through tissues, organs, individuals and societies.

-

Large Language Models (LLM) and significant mathematical discoveries

I'd also add cellular automata (maybe rule 30) to invoke the idea that we can have simple and precisely known rules which generate unpredictable iterations in order to communicate an intuition as to why at a high level we don't know how deep learning architectures produce their outputs.

-

AI is Dumb!

It does seem domain specific. In medicine, blinded trials have been assessed by panels of physicians to give comparable or better answers to medical questions than physicians. I couldn't find equivalent papers for maths but there are papers assessing it in isolation. It makes sense that LLMs would struggle more with maths than medicine as the former is more abstract and less talked about while medicine is more embodied in our language and a more common topic of conversation. If you can find a copy of Galactica you might find it more useful as its training included LaTeX equations, and it's also designed to give intermediary steps in its workings.

-

How does ChatGPT work?

There's an additional step, called tokenisation, in which words and punctuation are broken down in 'tokens', which can be roughly thought of as components of words. I imagine this makes it even less like how humans learn language.

-

How does ChatGPT work?

With models such as Chat-GPT there is another potential source of bias (and for mitigating bias). People have already mentioned that the model is selecting from a distribution of possible tokens. That is what the base GPT model does, but this process is then steered via reinforcement learning with human feedback. There are several ways to do this but essentially a human is shown various outputs of the model, and then they score or rank the outputs ,which go back into the objective function. The reinforcement during this process could steer the model either towards bias or away from it. This is one argument proponents of open source models use: we don't know, and may never know, the reinforcement regimen of Chat-GPT. Open source communities are more transparent regarding what guidelines were used and who performed RL. Makes me consider what we mean by learning. I wouldn't have considered this example learning, because the model weights have not updated due to this interaction. What has happened is that the model is using the context of the previous answers it has give. Essentially asking the model to generate the most likely outputs, given that the inputs already include wrong attempted answers. The default is 2048 tokens, with a current (and rapidly increasing) max of 4096. I would put this in the domain of prompt engineering rather than learning, as it's up to the human to steer the model to the right answer. But maybe it is a type of learning?

-

How does ChatGPT work?

The foundation models of chat-GPT aren't trying to be factual. A common use of chat-GPT is for science fiction writers - they will at times want accurate science and maths and at other times want speculative, or simply 'wrong', science and maths in service of a story. Which you want will determine what you consider a 'right' or good answer. Prompt engineering is the skill of giving inputs to a model such you get the type of answers you want, i.e. learning to steer the model. A badly driven car still works. Or wait for the above mentioned Wolfram Alpha API which will probably make steering towards factually correct maths easier. BTW, question for the thread, are we talking about chat-GPT specifically, LLMs or just potential AGI in general? - they all seem to get conflated at different points of the thread.

-

ChatGPT and science teaching

More attention should be spent on Galactica which is specifically trained on scientific literature. Even though it is a smaller model trained on a smaller corpus, that data (i.e. scientific literature) is much higher quality, which results in much improved outputs for scientific ends. They also incorporated a 'working memory token' to help the model work through intermediate steps to its outputs - i.e. showing your working. Would love to use this for literature reviews, there's just way too much in most domains for any human to get through.

-

ChatGPT logic

Has anyone tried repeating the same question multiple times? If chatGPT works in a similar manner to GPT3 it's sampling from a distribution of possible tokens (not quite letters/punctuation) at every token. There's also a parameter, T, which allows the model to preferentially sample from the tails to give less likely answers.

-

LHC costs money, is it worth it?

No, but our aspirations should be as big as the universe. The LHC costs roughly $4.5 billion a year. The global GDP is $85 trillion/year. The LHC represents 0.00005% of humanities annual wealth, or 0.0003% of the EU's annual GDP. A small price to pay to push at the borders of our ignorance.

-

Artificial brain, mind uploading : what level of difficulty ? what do you think about it ?

Computational neuroscience would cover most foundations.

-

Artificial Consciousness Is Impossible

If he was accessing other 'processes' then he was not dealing with Lamda. If he has been giving information out about Google's inner workings I'm not surprised he had to leave, I'm sure he violated many agreements he made when signing up with them. But given what he believed about the AI, he did the right thing. I don't know anything more about him than that.

-

Artificial Consciousness Is Impossible

It's not an analogous situation for (at least) 2 reasons. Someone without any senses other than auditory are still not only 'trained' on words, as words only form part of our auditory experience. Nor does Lambda have any auditory inputs, including words. The text is fed into the model as tokens (not quite the same as words, but close). The human brain/body is a system known, in the most intimate sense, to produce consciousness. Hence, we are readily willing to extend the notion of consciousness to other people, notwithstanding edge cases such as brain-stem death. I suspect a human brought up truly only on a single sensory type would not develop far past birth (remembering the 5 senses model was put forward by Aristotle and far under-estimates the true number).

-

Artificial Consciousness Is Impossible

If you skip the click bait videos and go to the actual publication (currently available in pre-print) you'll see exactly what lamda has been trained on: 1.56 trillion words. Just text, 90% of it English. Level 17 and level 32.

-

Artificial Consciousness Is Impossible

The entire universe exposed to LaMDA is text. Is doesn't even have pictures to associate to those words, and has no sensory inputs . By claiming LaMDA, or any similar language model, has consciousness, is to claim that language alone is a sufficient condition for consciousness. Investigating the truth of that implicit claim gives us another avenue to explore.

-

Artificial Consciousness Is Impossible

LaMDA is a language model designed for customer interaction. The google employee was a prompt engineer tasked with fine-tuning the model to be suitable for these interactions, because out of the box and unguided it could drift towards anything in its training corpus (i.g. it could favour language seen in erotica novels, which may not be what google want - depending on exactly what they're selling). Part of its training corpus would have included sci-fi books, some of which would include our imagined interactions with AI. It seems the engineer steered the AI towards these tendencies by asking leading questions.

-

The Futility of Exoplanet Biosignatures

Dunno, but the PI of that nature paper is very active on twitter: he came up with the idea and would probably answer your question.

-

The Futility of Exoplanet Biosignatures

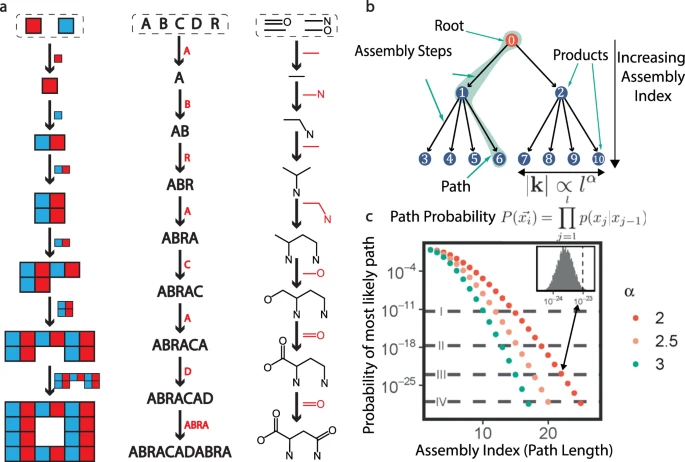

Assembly theory posits that complex molecules found in large abundance are (almost surely) universal biosignatures. From their publication: https://www.nature.com/articles/s41467-021-23258-x At the moment it only has proof of concept with mass spectrometry, but it's a general theory of complexity so could work with other forms of spectroscopy. Interesting direction anyway.

-

First use of 'soil' from the Moon to grow plants.

It was unknown whether the plants would germinate at all - the fact they did tells us that regolith did not interfere with the hormones necessary for this process. The plant they chose was the first one to have its genome sequenced, allowing them to look into the transcriptome to identify epigenetic changes due to the regolith, particularly what stress responses were triggered. They also compared regolith from 3 different lunar sites, allowing them to identify differences in morphology, transcriptomes etc between sites. Full paper here: https://www.nature.com/articles/s42003-022-03334-8

-

The Consciousness Question (If such a question really exists)

Sounds like you're describing panpsychism. There's a philosopher called Philip Goff who articulates this view quite well.

-

The Consciousness Question (If such a question really exists)

Some people have tried to develop methods of measuring consciousness in the most general sense. I think the most developed idea is integrated information theory put forward by a neurologist in 2004. It measures how integrated various systems in a whole are. Even if you accept this as a reasonable measure, to actually apply the test all possible combinations of connectivity are sought, so to 'measure' the consciousness of a worm with 300 synapses would currently take 10^9 years.

-

What computers can't do for you

So a matter of complexity? Fair enough. Thanks for answering so clearly - i ask this question a lot, not just here, and rarely get such a clear answer. Not any closer? There are some in the community who believe that current DNNs will be enough - it's just a matter of having a large enough network and suitable training regime. Yann Lecun is probably the most famous, the guy who invented CNNs. Then there are many who believe that symbolic representations need to be engineered directly into AI systems. Gary Marcus is probably the biggest advocate for this. Here's a 2 hour debate between them: There are a number of neuroscientists using AI as a model of the brain. There are some interesting papers that argue what some networks are doing is at least correlated with certain visual centres of the brain - this interview with a neuroscientist details some of that research - around 30 mins in, although the whole interview might be of interest to you: An interesting decision by Tesla was to use vision only based inputs - as opposed to competitors who use multi-modal inputs and combine visual with lidar and other data. Tesla did this because their series of networks were getting confused as the data streams sometimes gave apparently contradictory inputs - analogous to when humans get dizzy when their inner tells them one thing about motion and the eyes another thing. Things like that make me believe that current architecture are capturing some facets of whatever is going on in the brain, even if its still missing alot, so i think they do bring us closer.

-

What computers can't do for you

If you're going to ask someone to guess when fusion is going be reality, you'd still give more credence to engineers and physicists guess than some random people on the internet wouldn't you?

-

What computers can't do for you

This survey of researchers in the field gives a 50% chance of human level intelligence in ~40 years ago. It's probably the most robust estimate we're going to get.