-

Posts

3887 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Enthalpy

-

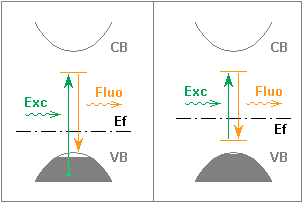

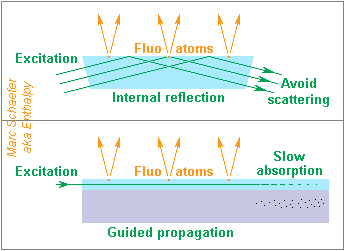

There was still room at the bottom. You guessed: I want individual atoms as smaller light sources. Take an atom that de-excites in, say 50ns. Pump it enough that it emits every 500ns as an average. The optics under test shall catch 1/4 of the photons and the CCD convert 1/2 to carriers. It gives 5000 electrons in 20ms, but we want to observe the diffraction pattern. Imagine the sidelobe carries 1/10 of the power and spreads over 150 pixels, then each pixels gives 3 electrons. The pixel can leak 500 electrons at room temperature with a fluctuation of 23 electrons. But here are solutions. If the CCD integrates over 60s instead of 20ms, the signals becomes 9000 electrons and the noise 1200 electrons: 7 sigma are usable. Software that integrates 20ms frames over 60s can compensate vibrations. Also, a field of view of 1mm2 has 10µm2 for each of 100,000 emitting atoms. Use the previously suggested software autoadaptive filter to add the signal over all atoms, get 42 sigma in 20ms. Or cool the CCD. Or combine several solutions. -------------------------- 1mm2 emits here some 20nW. This is as much as a pinhole of d=0.03mm over an old green LED. We use a CCD to its noise limit at room temperature: good hobby astronomers achieve it, so it's not easy. Minimize stray light at the CCD and at the source. Diffusion and stray luminescence must be minimal at the matrix that holds the emitting atoms. From idealized band diagrams of luminescence: we see that materials like SiC, GaN, GaP which use to have many radiating deep levels in the forbidden band unlikely fit. This must preclude the injection of minority carriers by a junction to pump the emitting atoms. Instead, the matrix is more likely a very transparent material without fluorescence, like silica, quartz... and the emitting atoms pumped optically. If the transition is between two deep levels of the emitting atom, pumping can be more selective (and the pumping light possibly created elsewhere by the same species), and the bands can be full, improving transparency and hindering the transitions from parasitic deep levels. Note that at such tiny concentrations, the emitting atom must absorb itself. Fluorescence time is documented for lasing species, but we need short times. One example - to be improved - is Cerium doping Yttrium Aluminium Garnet (Ce:YAG) which lasts 20ns to 70ns. Several luminescent species of varied colours in a single matrix can help the user. -------------------------- Because a flat refraction is astigmatic, and the optic under test has supposedly little field depth, all emitting atoms must be few 10nm under the suface - common for semiconductors. Implanting with 1µA spread over 1dm2 during 160µs achieves the unusual dopant density. Undesired impurities that fluoresce must be even scarcer. The pumping light can reach the emitting atoms if undergoing an internal reflection, but confining it in a surperficial layer looks better: the power is where needed and pumps fewer undesired fluorescent impurities, which should then be kept well under 1011 cm-3. The strong pumping light shall not leak from the matrix. Guided propagation allows to absorb it around the emission field over several mm, by means that avoid hard transitions and associated leaks. For instance, some dopant can create non-radiative absorption or lossy conduction there; it can be in the light-carrying layer or within the fading wave in the substrate below, which can itself be a stack. This keeps essentially the same materials and shapes, so the absorption zone's entrance scatters no light. The pumping light can make several passes at the emitting atoms, for instance by internal reflection. Marc Schaefer, aka Enthalpy

-

Let's imagine it takes 1MW mechanical power to move the complete train, of which 100kW are dissipated at the train and the rest is gained back when braking. (By the way, it depends on where the engines are: on the train or at the track, and we didn't tell how power goes to the train). Put infrared radiators at the railway engine: 2m high, 15m long, at each side, with good emissivity. If the tunnel's walls are at 300K the radiators will reach 440K or +167°C. Inconvenient as is, but the design can improve. The tunnel itself gets heat over a huge length and can conduct it away. Take 100kW at 10m/s during acelerations, one train every 5min: 33W/m. 0.4W/m/K in rocks, tunnel r=3m, conduct heat to R=100m: 46K warmer under these heavy conditions. Circulate water in the tunnel to ease the portions of acceleration and braking.

-

Metals have some transparency if they're thin, like under 100nm thickness and below for optical frequencies. There, the index does influence the transmission. It influences the reflection both if the metal is thin or thick. Because light is attenuated so quickly in metals, tables use to give the index as a complex number.

-

First, no tyre can rotate at 700km/h. You need wheels of plain metal. Second, even with downlift, a normal car is too sensitive to wind, accidental uplift, and so on. Even on the best possible flat terrain. There are enough examples of endurance cars taking off (Le Mans and other places) at only 300km/s despite downlift. Such a speed needs the special aerodynamic design used for speed records: http://en.wikipedia.org/wiki/Land_speed_record http://en.wikipedia.org/wiki/ThrustSSC heavy, narrow, and of special shape.

-

http://www.swissmetro.ch/en http://de.wikipedia.org/wiki/Swissmetro

-

For the inference engine and the Prolog interpret (or interpreter), you could try to take the opinion of Yann Lecun. He speaks English and worked at New York university last time I heard from him. He was previously involved in artificial intelligence and special hardware. He might well suggest to program a neural network on the Gpu (he worked on machine learning by gradient back-propagation). I just fear this is banal. ----- For the error-correction codes, if you can associate with a telecom engineer, then programming the soft-decoding of block codes is accessible to a software engineer.

-

Several math paths are possible. I suppose propagation is perpendicular to the surfaces. Microwave people would use S parameters (or scattering parameters): S11, S12, S21, S22 http://en.wikipedia....ring_parameters If you don't want to invest in them, you can just compute in one step the multiple reflections that way: if R is the amplitude of the pair of reflections (complex number, for field, not for power) then 1+R+R2+R3+R4... is 1/(1-R). Or equivalently, you write that some fields are continous at both interfaces, and solve the set of linear equations between the complex amplitudes, including the reflected fields. ---------- I'm interested in CW THz generators for having suggested some there (sorry for the horrible mess): http://www.physforum...opic=15617&st=0 could you describe your generator, possibly through a link? Thanks!

-

The main uncertainty is what epoch and continent the tuning fork is meant for. A is 440Hz in some places, 444Hz in others, many instruments are built for 442Hz, it has been 435Hz for most of 20th century and was something like 415Hz during the Renaissance. The proper way to determine that is to measure the fork with an electronic tuner. It will give you better than 0.5% precision. Half a tone is 5.9% and electronic tuners often divide half tones in "cents". I hear 0.1% frequency difference under good conditions. Other error sources are small: the initial precision of a tuning fork is of course better than 0.2% as compared to the intended frequency and the alloy used, elinvar, compensates the change in Young's modulus by its thermal expansion http://www.nobelprize.org/nobel_prizes/physics/laureates/1920/guillaume-lecture.pdf p469: 36s per day or 0.04% between 0°C / 15°C / 30°C Not bad, is it? This is one reason that makes music instruments difficult to build.

-

A nano-powder of colored ceramic, for instance of iron oxide, needs no special temperature to be disseminated by a flame, for instance of encapsulating polybutadiene. Particle size determines the smoke's opacity and is better defined if the fire doesn't influence the particles. As opposed, a camp fire will destroy most of an organic dye, because the fire's temperature is high and poorly controlled.

-

Not so easy, because programmes benefit more from subtle algorithms than from computing speed, and subtle algorithms tend to run horribly on a Gpu... But on such a parallel machine (a Gpu) you could try to implement An inference engine A Prolog interpret (if this is still any fashionable) as both exhibit a regular uniform parallelism accessible to the machine. It could be less banal than image processing or finite elements. Though, I don't know Cuda enough to tell if it's the right layer for such a purpose. Maybe you need first your own layer, different from Cuda. ================================== You might have a look at error-correcting codes (ECC). A Gpu helps a lot decoding them, but... Codes are full of maths. Not complicated for a mathematician : Galois fields and statistics. Telecom engineers achieve to understand them (...more or less ); with a software background, you would need to have studied this particular math or have a real passion and ease for it. http://en.wikipedia....correcting_code You should first check what is already done on Gpu, because ECC specialists program Digital Signal Processors and are easy with software. Some decoding tasks that might benefit from a Gpu: Decoding bigger Reed-Solomon codes. This means just solving a big set of linear equations, where linear means in a Galois field instead of float numbers. Please check if the Gpu can efficiently access random table elements, since these computations rely on (Galois field) logarithm tables. Decoding of concatenated codes. As they run many codes in parallel, they may fit a Gpu - but often rely on Galois field computations. Decoding of random codes. These codes are optimized for correction efficiency but have no fast formal decoding algorithm, so a Gpu may enable them. Soft-decoding of block codes. Soft means here that the received bits aren't hard-decided to 1 or 0 prior to error correction, but given a continuous value or a probability - it improves the correction performance. Fast and imperfect algorithms exist and work for "convolutional codes", but algorithms correcting block codes properly would perform a lot better. Here near-brute force algorithms may be best (please let check), comparing the probability of all allowed and credible combinations of error positions; they'd need massive computation on float numbers, which a Gpu would enable. Easier maths! Soft-decoding of random codes. Of course. Even more so because they are high-performance block codes and no good decoding method exists. As soft-decoding looks no more difficult for them than hard-decoding, grasp the opportunity. Easier maths! Soft-decoding of turbo codes. I suppose it exists already. The common description of turbo codes claims bits are 0 or 1 (hard decision) and are flipped when decoding along alternate directions; instead, you could attribute each bit a probability and alter it softly according to the code in each direction, which would bring a brutal improvement to the correction capability. Since turbo codes let many codes run in parallel, they could fit a Gpu. Soft-decoding of turbo codes that combine random codes. Of course. I suppose these nearly-brute force methods will stay rather slow even on a Gpu, so the typical use wouldn't be fiber optics transmissions, but rather deep space crafts. Improving data comms for mythical probes like Voyager or Pioneer would of course be fascinating, knowing that the Square Kilometer Array is under construction... Or for planned probes, sure. http://en.wikipedia....wiki/Pioneer_10 and http://en.wikipedia....wiki/Pioneer_11 http://en.wikipedia.org/wiki/Voyager_1 and http://en.wikipedia.org/wiki/Voyager_2 http://en.wikipedia....p_Space_Network and http://en.wikipedia....Kilometer_Array Marc Schaefer, aka Enthalpy ================================== Some big science projects may benefit from Gpu and still not use them... Perhaps! The LHC lets a huge Internet grid of remote computers analyze and store the data collected by the detectors. I suspect it runs on the Cpu of these computers. Use their Gpu, if it's fast, and if the task fits it? LHC's programmed successors? The Square Kilometer Array needs processing power and is to rely on a grid of remote computers. Neutrino detectors, dark matter detectors, gravity waves detectors... DNA sequencing Smaller science or engineering: Compute the shape of a molecule, both from force fields or from first principles Compute the arrangement of (stiff...) molecules in a solid Compute band diagrams in crystals In all these applications, first check what is already done on a Gpu (protein folding, Seti and many more are) and what the people involved desire. Also, as nearly all run on Linux, Cuda may not be the proper layer... A Linux equivalent, or maybe OpenGL.

-

It still doesn't imply that most or all asteroids are made of compact and differentiated matter. Only that some are, and parts of these can be searched on Earth with a metal detector. By the way, I estimated once that a body must have only D=500km to be differentiated, a value consistent with what a recent spacecraft observed. This would be less than a giant asteroid: rather a big one.

-

I need more explanations - other readers maybe as well. Are you making a measurement and inferring the index? As an exercize, or to characterize precisely one sample? Acrylic's index is already well documented. How does light pass through your sample, what do you observe? What do you call "phase change"?

-

I don't want it to sublime, and it doesn't need to. Quite the opposite, fine solid ceramic particles will ascend with the flame and (something uncertain with a dye) resist the heat of the camp fire.

-

You'll change your specialty five times in your career, so don't chose the lessons only to fit the first one. I neglected many lessons because I knew exactly what job I wanted to have and what was needed for it, but meanwhile I could have used some math I neglected then. So, if time allows you, try to learn more than the minimum fitting your first job.

-

The non-contact surveillance by laser was published long ago in a review, perhaps Optics and Laser Europe, as a working tool for metal sheet production. I won't find it again. The acoustic non-linear method may well be new, and then it would need to be tried before anyone can tell how sensitive it is. From the 140dB signal cleanliness I got in the past, my intuition tells it would be sensitive. But not at the rod's ends, for which I see only a tomograph. I like more and more the magnetic pulse to create a mechanical shock in your rod (or in a sheet or other shapes). It induces a clean signal without the time-consuming mechanical contact. But as the sensor, I prefer a capacitive one now, and I still ignore how to protect the sensor against the actuator. At a sheet we could put them on different sides. Maybe more to come as I find time. Google microtomograph and microtomography: http://en.wikipedia.org/wiki/X-ray_microtomography http://www.skyscan.be/home.htm and many more.

-

Slightly better than a resistor, you can use an LM317 and a resistor, combined to make a constant current. This makes the light output less dependant on the battery voltage, which varies a lot, and the lower minimum voltage drop permits to use the battery longer. The TO92 version of the LM317 would fit small currents. Maxim must also have complete solutions exactly for this situation, using a chopper to achieve a high efficiency and at the same time squeeze everything out of a battery. But it's more complicated - probably too much.

-

Proton plus neutron is which state?

Enthalpy replied to alpha2cen's topic in Modern and Theoretical Physics

Wouldn't for instance the deuteron's electric moment say a lot about its composition? Say, if the neutron and the proton share the charge, by exchanging an up for a down, the mean charge will be at the centre. But if the proton and the neutron are side-by-side, the electric centre will be offset from the mass centre. Even if both are delocalized to the entire nucleus, they should have different excitation states which absorb or emit a gamma during the transitions. What's the difficulty? Sorry, I understand very little about this subject. At least Wiki believes to know something about it: http://en.wikipedia.org/wiki/Deuterium#Nuclear_properties_.28the_deuteron.29 with detailed descriptions of states. But maybe Wiki doesn't describe the present knowledge, or knowledge evolves quickly in this field. -

OK. Some 3D linked polymers are hard, like hard rubber. So from its hardness, the thing I obtained could be cross-linked. Though, I believe to remember that when burning, it melted like a polyolefine does, while I imagine heavily cross-linked polymers are thermosetting ones only. What could the thing have been?

-

"The" temperature is defined at equilibrium, of which we're very far here. We could define various temperatures but these differ an awful lot then. For instance, red colour is associated with a temperature, say 700°C. The fine line width of the laser light defines a very low temperature. Nothing abstract: this is what cools ions to some mK in an ion trap. The power concentration defines a very high temperature. Take 20mW (not 200mW) from your laser diode, concentrated to 0.5µm2 as on DVD: if absorptivity and emissivity were equal and through a single face, it would attain 30,000 K. Better keep moving.

-

Hello nice people! As a teenager I once obtained a polymer... But which one? I had dry NaOH in a test tube as d=1mm balls, plus some Aluminium balls, both coming directly from a household product sold to clean toilet flows. I poured Cl3C-CH3 (1,1,1-trichloroethane sold as thinner for tipp-ex) on them. All at room pressure and temperature, and under the best teenager practices of unknown purity, uncontrolled proportions and approximate cleanliness. An emulsion formed and buoyed to the surface where it formed a solid stopper, about 1/3 the volume of trichloroethane (maybe limited by the available NaOH). I had to break the test tube to get the stopper. When breaking the test tube, it smelled like trichloroethane, and I didn't notice odours like hypochlorite (which I recognize in water!) nor chlorine. The reaction hadn't evolved gas neither. The polymer (I suppose it was) looked compact, it was white, softer than polypropylene but much harder than wax, EPDM or glue for hot glue gun - about as soft as polymethylpentene. Its density could match a polyolefine, but I had no means to measure it. It burned like a polyolefin, with the faint smoke and typical flame and odour. An odour very different from to polymers containing oxygen like POM or chlorine like PVC. A quiet flame and faint smoke very different from multiple-bonds polymer like unloaded latex or polybutadiene. A tridimensional network of C-CH3 would have an amount of hydrogen compatible with the reactants, but I'd expect it (wrongly?) to be a soft elastomer. Can you suggest what it was? Thank you! Marc Schaefer, aka Enthalpy

-

A piece of old tyre? Not the expected colour, but abundant smoke. Similarly: Any kind of very finely ground ceramic of the colour you prefer, mixed with polybutadiene before vulcanization. Eco-friendly please. Iron oxide ?

-

Why not. But if you want your project to be useful, it should fit normal users, not exotic research: Work on BOTH nVidia AND Amd processors. I wouldn't try a soft that I lose if I change my card. Works on normal graphics cards, not on specialized parallel processors that nobody has. Use standard compression formats like zip, rar, gzip, 7zip... As you won't write a full user interface (that's a different big project) your software should interface with an existing archiver like 7zip, IZArc... 7zip is an open source project, so people there publish their interfaces and may welcome you. Be faster than a four-core Cpu, on which 7zip uses the SSE instructions and hyperthreading on all cores... Under these conditions, of which I don't see any single one as optional, your compressor would be useful. Other pieces of software that would benefit from Gpgpu: An antivirus file parsing algorithm. At least one antivirus editor (Kaspersky?) has begun doing it. Cryptography. Less new. The two latter are harder to interface with an existing software as these tend to be commercial and non-public, so such a project would be rather academic with little usefulness. One other use, very specialized but badly needed, is a step in the design of lithography masks for integrated circuits production. I believe it's the splitting of patterns into rectangles - sorry it's old story for me. It was a two-weeks task on the biggest computers 20 years ago, and still is today, because computers evolve as slowly as chips do. Costly, and wastes two weeks (often several times) in the time to market, so if you find a good implementation on a many-Gpu machine, the few users will be very interested and angry to interface your implementation in their programme.

-

And the inside of a 6000K "box" will look white like our Sun, which is an approximation of a black body, because the strongest emitted colour doesn't have a photon energy of kT but a multiple of it.

-

This is more or less the standard theory. Whether the whole asteroid belt is composed of dense bodies, I'm not so sure.

-

Hello you all! A decade ago I suggested to mix a nano-powder of high index ceramic in plastic to produce thinner spectacle lens. If the powder is fine enough, the composite acts as a uniform material and doesn't diffuse light. Now I read that at light-emitting diodes (LED), laser diodes, photodetectors, photovoltaic cells... the high index of the semiconductor is said to create parasitic reflections at the chip's surface. I'm not quite convinced this is still the case: maybe books and Internet pages describe the situation several decades ago. Anyway, a solution would be to encapsulate the chip in a transparent plastic loaded with nano-powder of high-index ceramic. This can be cheaper than anti-reflective coatings made by semiconductor processes, and work over a larger bandwidth. It's easier than for spectacle lens, since many opto components accept some diffusion. Plastics for opaque encapsulation of semiconductors are commonly filled with a ceramic like silica. This stabilizes the dimensions against curing, humidity, temperature, to avoid mechanical stress. Opto components could choose a better ceramic like TiO2, ZrO2, GaN, SiC... The ceramic's composition, and its concentration in the plastic, can vary along light's path to optimize performance or price. Mixing several particle sizes eases high filling ratios, like in concrete. Well, just in case this isn't already done... Marc Schaefer, aka Enthalpy