Everything posted by Genady

-

Exiobiology and Alien life:

I don't see finding signs of life far away, long time ago would be changing my mindset at all. Surely two data points are better than one.

-

Exiobiology and Alien life:

Even if we find signs of life in our neighbor galaxy, Andromeda, all we'll know that it was there 2.5 million years ago. We'll not have a way to know what happened to it since then and what is there now. We'll still be alone. The vastness of the universe doesn't help in this respect.

-

Exiobiology and Alien life:

To add to these considerations, there can't be "many [stars] far older than our own." Older, yes, but not far older. Our star is 5 billion yo and the universe is 14 billion yo. That is our star's duration takes about 36% of the whole. Plus, it took time after the beginning to accumulate enough "metals" to start making long lived stars and planets. We are perhaps of the average age, not very young.

-

Do octopuses, squid and crabs have emotions?

So, this question is not about their emotions. It is about our emotions.

-

Do octopuses, squid and crabs have emotions?

What a strange question. Of course they do.

-

War Games: Russia Takes Ukraine, China Takes Taiwan. US Response?

An old fable: A Pan Am 727 flight, waiting for start clearance in Munich , overheard the following: Lufthansa (in German): “Ground, what is our start clearance time?” Ground (in English): “If you want an answer you must speak in English.” Lufthansa (in English): “I am a German, flying a German airplane, in Germany . Why must I speak English?” Unknown voice from another plane (in a beautiful British accent): “Because you lost the bloody war!”

-

Is there any program that can convert letters to numbers and/or words into sums thereof?

Here you are. I'm sure there are more. Word Value Calculator - Sum of Letters Numbers - Online Numerology (dcode.fr)

-

Choice, Chance and Sensible Logic

Hint: Internet search

-

Is there any program that can convert letters to numbers and/or words into sums thereof?

I don't know of such a program, but do you use Excel?

-

How can information (Shannon) entropy decrease ?

IMO, the answer to the first question depends on conditions of usage of the memory. In the extreme case when the memory is added but the system is not using it, there is no entropy increase. Regarding the second question, a probability distribution of the memory contents has information entropy. A probability distribution of micro-states of the gas in the box has information entropy as well. This is my understanding. It is interesting to run various scenarios and see how it works.

-

How can information (Shannon) entropy decrease ?

I don't know how deep it is, but I doubt that I'm overthinking this. I just follow the Weinberg's derivation of the H-theorem, which in turn follows the Gibbs' one, which in turn is a generalization of the Boltzmann's one. The first part of this theorem is that dH/dt ≤ 0. That is, H is time-dependent, which means P(α) is time-dependent. I thing that in order for H to correspond to a thermodynamic entropy, P(α) has to correspond to a well-defined thermodynamic state. However, in other states it actually is "some kind of weird thing −∫P(α,t)lnP(α,t)." This is how I think it's done, for example. Let's consider an isolated box of volume 2V with a partition in the middle. The left half, of volume V has one mole of an ideal gas inside with a total energy E. The right half is empty. Now let's imagine that we have infinitely-many of these boxes. At some moment, let's remove the partitions in all of them at once and then let's take snapshots of the states of gas in all the boxes at a fixed time t. We'll get a distribution of states of gas in the process of filling the boxes, and this distribution depends on t. This distribution gives us the P(α,t) . The H-theorem says that, as these distributions change in time the corresponding H decreases until it settles at a minimum value when a distribution corresponds to a well-defined thermodynamic state. At that state it is the same as -kS.

-

How can information (Shannon) entropy decrease ?

I looked in Steven Weinberg's book, Foundations of Modern Physics, for his way of introducing the thermodynamics' and the statistical mechanics' definitions of entropy and their connection, and I've noticed what seems to be an inconsistency. Here is how it goes: Step 1. Quantity H = ∫ P(α) dα ln P(α) is defined for a system that can be in states parametrized by α, with probability distribution P(α). (This H is obviously equal negative Shannon's information entropy up to a constant, log2e.) Step 2. Shown that starting from any probability distribution, a system evolves in such a way that H of its probability distribution decreases until it reaches a minimal value. Step 3. Shown that the probability distribution achieved at the end of step 2, with the minimal H, is a probability distribution at equilibrium. Step 4. Shown that the minimal value of H achieved at the end of step 2, is proportional to negative entropy, S=-kH. Step 5. Concluded that the decrease of H occurring on Step 2 implies the increase in entropy. The last step seems to be inconsistent, because only the minimal value of H achieved at the end of step 2 is shown to be connected to entropy, and not the decreasing values of H before it reaches the minimum. Thus the decreasing H prior to that point cannot imply anything about entropy. I understand that entropy might change as a system goes from one equilibrium state to another. This means that a minimal value of H achieved at one equilibrium state differs from a minimal value of H achieved at another equilibrium state. But this difference of minimal values is not the same as decreasing H from a non-minimum to a minimum.

-

How can information (Shannon) entropy decrease ?

However, while pointing to some others' mistakes he's clarified for me some subtle issues and I appreciate this.

-

How can information (Shannon) entropy decrease ?

Regarding the Ben-Naim's book, unfortunately it in fact disappoints. I'm about 40% through and although it has clear explanations and examples, it goes for too long and too repetitiously into debunking of various metaphors, esp. in pop science. OTOH, these detours are easy to skip. The book could be shorter and could flow better without them.

-

How can information (Shannon) entropy decrease ?

Thank you for the explanation of indicator diagrams and of the introduction of thermodynamic entropy. Very clear! +1 I remember these diagrams from my old studies. They also show sometimes areas of different phases and critical points, IIRC. I didn't know, or didn't remember, that they are called indicator diagrams.

-

How can information (Shannon) entropy decrease ?

I'm reading this book right now. Saw his other books on Amazon, but this one was the first I've picked. Interestingly, the Student's Guide book is the next on my list. I guess, you'd recommend it. I didn't see when you stated the simple original reason for introducing the entropy function, and I haven't heard of indicator diagrams... As you can see, this subject is relatively unfamiliar to me. I have had only a general idea from short intros to thermodynamics, statistical mechanics, and to information theory about 45 years ago. It is very interesting now, that I delve into it. Curiously, I've never needed it during long and successful career in computer systems design. So, not 'everything is information' even in that field, contrary to some members' opinion ...

-

Schizophrenia (split from Evolutionary role of diversity of personality)

No.

-

Schizophrenia (split from Evolutionary role of diversity of personality)

Experience.

-

Schizophrenia (split from Evolutionary role of diversity of personality)

I know...

-

Schizophrenia (split from Evolutionary role of diversity of personality)

You test it.

-

Schizophrenia (split from Evolutionary role of diversity of personality)

Why the discussion on schizophrenia is focused on believes / world-view / societal judgement while the most obvious and objective disturbance is hallucinations?

-

How can information (Shannon) entropy decrease ?

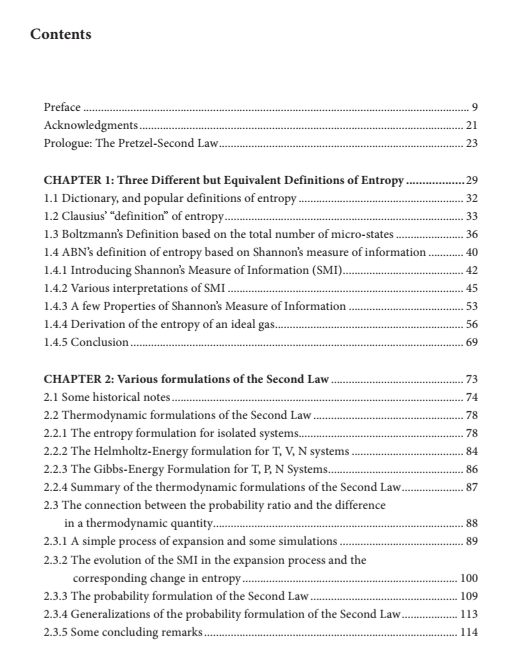

I've got it from Amazon US on Kindle for $9.99. Here is a review from the Amazon page: The contents: Hope it helps. Glad to assist as much as I can.

-

How can information (Shannon) entropy decrease ?

Since this thread has something to do with entropy - 😉 - I hope this question is not OT. Are you familiar with this book: ENTROPY: The Greatest Blunder in the History of science Arieh Ben-Naim ?

-

Maybe someone can help me solve this task, I really need help, please reply

Yes, I've found such a problem on the Internet, too. The difference is crucial.

-

Maybe someone can help me solve this task, I really need help, please reply

If you ever find out a geometric solution to this problem, please show it to me, if you don't mind.

.png.2114e500de98c763fbd44ccdea560f6d.png)

.png.143436301a10f4c310b962a2c3d47497.png)