-

Posts

2578 -

Joined

-

Days Won

21

Content Type

Profiles

Forums

Events

Everything posted by Ghideon

-

perpetual motion machine (split from topic of the same name)

Ghideon replied to JamesL's topic in Speculations

I got curious and checked your first source: Emphasis mine, source https://www.uu.nl/en/utrecht-university-library-special-collections/collections/early-printed-books/scientific-works/das-triumphirende-perpetuum-mobile-orffyreanum-by-johann-bessler Questions: How do we know that what you intend to build is Bessler's design? Where did you get hold of the details of Bessler's work? (I'm also expecting a response to my earlier questions) -

perpetual motion machine (split from topic of the same name)

Ghideon replied to JamesL's topic in Speculations

and for example Sorry, I have some trouble to follow your argumentation and description. To avoid confusion and to allow for discussion: -Are you claiming the device you are building will actually perform perpetual motion; breaking established laws of physics? Or: -Is this a mechanics/engineering project where you want to repeat the any fraud committed by Bessler to make his wheel look like perpetual motion? For instance by hiding springs, batteries, motors or other devices. That generalisation that does not apply to me. -

perpetual motion machine (split from topic of the same name)

Ghideon replied to JamesL's topic in Speculations

Nice build. Bessler did not construct any device that displayed perpetual motion; such devices does not work*. I think any current discussion is more about whether a deliberate fraud was committed or not. Here is a paper you may find interesting: Source: https://arxiv.org/pdf/1301.3097.p (Johann Ernst Elias Bessler was known as Orffyreus) *) according to currently established laws of physics; supported by observations and theories. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Thanks! This helps identifying where I need more reading / studying, I'm of course aware of perfect or ideal processes. My world view though is biased by working with software and models that can be assumed to be 'ideal' but are deployed in a 'non ideal' physical reality where computation and storage/retrieval/transmissions of (logical) information is affected by faulty components, neglected maintenance, lost documentation, power surges, bad decisions, miscommunications and what not. I think I should to approach this topic more in terms of ideal physics & thermodynamics. +1 for the helpful comment. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Thanks for your input. I'll try to clarify by using four examples based on my current understanding. Information entropy in this case means the definition from Shannon. By physical entropy I mean any suitable definition from physics*; here you may need to fill in the blanks or highlight where I may have misunderstood** things. 1: Assume information entropy is calculated as per Shannon for some example. In computer science we (usually) assume an ideal case; physical implementation is abstracted away. Time is not part of the Shannon definition and physics plays no part in the outcome of entropy calculation in this case. 2: Assume we store the input parameters and/or the result of the calculation from (1) in digital form. In the ideal case we also (implicitly) assume unlimited lifetime of the components in computers or unlimited supply of spare parts, redundancy, fault tolerance and error correction so that the mathematical result from (1) still holds; the underlaying physics have been abstracted away by assuming nothing ever breaks or that any error can be recovered from. In this example there is some physics but under the assumptions made the physics cannot have an effect on the outcome. 3: Assume we store the result of the calculation from (1) in digital form on a real system (rather than modelling an ideal system). The lifetime of the system is not unlimited and at some future point the results from (1) will be unavailable or if we try to repeat the calculation based on the stored data we may get a different result. We have moved from the ideal computer science world (where I usually dwell) into an example where the ideal situation of (1) and (2) does not hold. In this 3rd case my guess is that physics, and physical entropy, play a part. We loose (or possibly get incorrect) digital information due to faulty components or storage and this have impact on the Shannon entropy for the bits we manage to read out or calculate. The connection to physical entropy here is one of the things I lack knowledge about but I'm curious about. 4: Assume we store the result of the calculation from (1) in digital form on an ideal system (limitless lifetime) using lossy compression****. This means that at a later state we cannot repeat the exact calculation or expect identical outcome since part of the information is lost and cannot be recovered by the digital system. In this case we are still in the ideal world of computer science where the predictions or outcome is determined by computer science theorems. Even if there is loss of information physics is still abstracted away and physical entropy plays no part. Note here the similarities between (3) and (4). A computer scientist can analyse the information entropy change and the loss of information due to a (bad) choice of compression in (4). The loss of information in (3) due to degrading physical components seems to me to be connected to physical entropy. Does this make sense? If so: It would be interesting to see where control parameters*** fits into "example 3 vs 4" since both have similar outcome from an information perspective but only (3) seems related to physics. *) assuming a suitable definition exists **) Or forgotten, it's a long time since I (briefly) studied thermodynamics. ***) feel free to post extra references; this is probably outside my current knowledge. ****) This would be a bad choice of implementation for this example in a real case, it's just used here to illustrate and compare reasons for loss of information. https://en.wikipedia.org/wiki/Lossy_compression -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

I agree. I (yet) lack the knowledge about the role of control parameters so I'll make a general reflection for possible further discussion. When calculating Shannon entropy in the context of computer science the level of interest is usually logical. For instance your calculations of the coin example did not need to bother about the physical coins and papers to get to a correct result per the definition of entropy. Time is not something that affect the solution*. Physical things on the other hand such as papers, computers, and storage devices do of course decay; even if it may take considerable time the life span is finite. Does this make sense in the context of levels of description above? If so, then we may say that the computer program cannot read out exact information about each physical parameter that will cause such a failure. Failure in this case means that without external intervention (spare parts or similar) the program halts, returns incorrect data or similar bond what builtin fault tolerance is capable of handling. Does this relate to your following statement? Note: I've probed at a physical meaning of "cannot read out" in my above answer; an area I'm less familiar with but your comments triggers my curiosity. There are other possible aspects; feel free to steer the discussion towards what interests you @joigus. I will think about this for a while before answering; there might be interesting aspects to this from a practical point of view. That seems like a valid conclusion; I would end my participation in this thread if it was not fun. *) Neglecting the progress of questions and answers; each step, once calculated, do not change. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Your conclusion is, intentionally or unintentionally, incorrect. Your guess is incorrect. Data and information are different concepts and the differences are addressed differently depending on the context of discussion. Sorting out the details may be better suited for a separate thread. A quick example, here are three different Swedish phrases with very different meaning*. Only the dots differ: får får får får far får far får får A fourth sentence with a completely different meaning: far far far Without the dots the first three examples and the last example are indistinguishable and that has impact on the entropy. The example addresses the initial general question about decreasing entropy. "About the same" is too vague to be interesting in this context. I take that and similar entries as an illustration of entropy as defined by Shannon; the redundancy in the texts allows for this to be filtered out without affecting the discussion. And if the level of noise is too high from a specific sender it may be blocked or disconnected from the channel altogether. *) (approximate) translations of the four examples 1: does sheep give birth to sheep 2: does father get sheep 3: father gets sheep 4: go father go -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Explicit definition of sender, receiver and channel is not required. A difference between 7 bit and 8 bit ascii encoding can be seen in the mathematical definition for Shannon entropy. The example I provided is not about what a reader may or may not understand or find surprising (that is subjective), it's about mathematical probabilities due to the changed number of available symbols. In Swedish it is trivial to find a counter example to your claim. Also note that you are using a different encoding than the one I defined so your comparison does not fully apply. -

Some factors and examples of a cause: -Thickness of the layer of snow (due to amount of snow fallen or wind) -Density/water content; dry or wet snow (temperature) -Iced layer under a (too thin) layer of fresh snow (dry, cold snow falling on wet snow) Relate but maybe not within scope: -Flat or sloped surface (due to wind or terrain for instance) (This may not qualify as a "floor") -Iced surface on the fallen snow (wet snow followed by drop of temperature) (This may not qualify as "freshly fallen") -Snow compacted by for instance a skier (not qualifying as "freshly fallen")

-

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

A note on the initial question @studiot; How can Shannon entropy decrease. It’s been a while since I encountered this so I did not think of it until now, it's practical case where Shannon entropy decreases as far as I can tell. The Swedish alphabet has 29 letters* ; the english letters a-z plus "å", "ä" and "ö". When using terminal software way back in the days the character encoding was mostly 7-bit ascii which lacks Swedish characters åäö. Sometimes the solution** was to simply 'remove the dots' so that “å”, “ä”, “ö” became “a”, “a”, “o”. This results in fewer symbols and increased probability of “a” and “o”. This result is a decreased Shannon entropy for the text entered into the program compared to the unchanged Swedish original. Note: I have not (yet) provided a formal mathematical proof so there’s room for error in my example. *) I’m assuming case insensitivity in this discussion **) another solutions was to use characters “{“, “}” and “|” from 7-bit ascii as replacements for the Swedish characters. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Ok. I had hoped for something more helpful to answer the question I asked. I tried to sort out if I disagreed with @studiot or just misunderstood some point of view, I did not know this was an exam that required such a level of rigor. "List" was just an example that studiot posted, we could use any structure. Anyway, since you do not like generalisations I tried, here is a practical example instead, based on Studiot's bookcase. 1: Studiot says: “Can you sort the titles in my bookcase in alphabetical order and hand me the list of titles?” me: “Yes” 2: Studiot: “can you group the titles in my bookcase by color?” me “yes, under the assumption that I may use personal preferences to decide where to draw the lines beteen different colors” 3: Studiot: “can you sort the titles in my bookcase in the order I bought them?” Me: “No, I need additional information*” I was curious about the differences between 1,2 and 3 above and if and how it applied to the initial coin example. One of the aspects that got me curious about the coin example was that it seemed open for interpretation whether it is most similar to 1,2 or 3. I wanted to sort out if that was due to my lack of knowledge, misunderstandings or other. Thanks for the info but I already know how exams work. *) Assuming, for this example, that the date of purchase is not stored in Studiots bookcase. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

I did not want to encode information, I tried to generalise @studiot's example so we could compare our points of view. You stated I should define encoding: My question is: why? -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Why is encoding important in this part of the discussion? In the bookcase example, does it matter how the titles are encoded? And in the coin example @studiot used "0101" (0=no, 1=yes); Shannon entropy is as far as I know unaffected if we used N=no, Y=yes instead, resulting in "NYNY". ? -

I’m currently on a skiing trip and these natural sculptures of snow-covered trees make me think of pareidolia* and what triggers the phenomenon. How come I could easily spot a “yeti” but no “elephants”? Is pareidolia affected by the context? I do not know but my curiosity is triggered… By the way, here in the middle, is where I see the "yeti" *) Pareidolia is the tendency for perception to impose a meaningful interpretation on a nebulous stimulus, usually visual, so that one sees an object, pattern, or meaning where there is none. https://en.wikipedia.org/wiki/Pareidolia

-

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Thanks for your thoughts and comments. Maybe our example can be expressed mathematically? At least just to find where we share an opinion or disagree? Let I be information. J is some information that can be deduced from I. Some function f exists that produces J given I as input. f:I→J Information K is required to create the function f. This could be a formula, an algorithm or some optimisation. In the bookcase example I is the book shelf and J is the list of books. Function f is intuitively easy, we create a list by looking at the books. Does this make sense? If so, in the bookcase example we have: J∈I K∈I No additional information needed to create a list, we look at the books (maybe K=empty is an equally valid way to get to the same result). In any way the Shannon entropy does not change for J or I as far as I can tell, the entropy is calculated for a fixed set of books and/or a list. My curiosity is about non-trivial f and K. For instance in the initial example with the coin and if J∈I and K∈I holds in this case. And if there is any difference how does entropy of K relate to entropy of I and J if such a relation exists. I. consider the function that produces the questions in the initial coin example to be "non-trivial" in this context. Is the thermodynamic analogy is open vs closed system? Thanks! Something new to add to my reading list. -

How to create back propogation in a robot algorithm

Ghideon replied to Jalopy's topic in Computer Science

Back propagation* is used in machine learning, for instance in neural networks. Are you claiming that none of these have been applied to robotics? The proposed idea does not make much sense, sorry. *) See for instance https://en.wikipedia.org/wiki/Backpropagation -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

(Bold by me) Thanks for your input! Just to be sure, in this context an "ensemble" is the concept defined in mathematical physics* ? I may need to clarify what I tried to communicate by using "globally". I'll try an analogy since I'm not fluent in the correct physics / mathematics vocabulary. Assume I looked at Studiot's example from an economical point of view. I could choose to count the costs for a computer storing the information about the grid, coin and questions. Or I could extend the scope and include the costs for the developer that interpreted the task of locating the coin, designed questions and did programming. This extension of the scope is what I meant by "globally". (Note: this line of thought is not important; just a curious observation; not necessary to further investigate in case my description is confusing.) As far as I can tell you are correct. If we would assume the opposite: "all paths of execution of a program is equally probable" it is easy to contradict by a simple example program. I am not sure. For instance when decrypting an encrypted message or expanding some compressed file, does that affect the situation? *) Reference: https://en.wikipedia.org/wiki/Ensemble_(mathematical_physics). Side note: "ensemble" occurs in machine learning, an area more familiar to me than thermodynamics. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

I’m used to analyze digital processing of information but I’ve not thought about computations that takes place as part of designing an algorithm and/or programming a computer. The developer is interpreting and translating information into programs which is a physical process affecting entropy? In studiots example the questions must come from somewhere and some computation is required to deduce the next question. This may not be part of the example but I find it interesting; should one isolate the information about the grid and coin or try to look “globally” and include the information, computations etc that may be required to construct the correct questions? A concrete example; the questions about columns can stop once the coin is located. This is a reasonable algorithm but not the only possible one; a computer (or human) could continue to ask until the last column; it's less efficient but would work to locate the coin. Do the decision and computations behind that decision affect entropy? I don't have enough knowledge about physics to have an opinion. I agree, at least to some extent (it may depend on how I look at "all the information" ). By examination we can extract / deduce a lot of information from the questions and answers but it is limited. The following may be rather obvious but I still find them interesting. 1: Given the first question “Is it in the first column?” and an assumption that the question is based on known facts we may say that the questioner had to know about the structure of the cells. Otherwise the initial question(s) would be formulated to deduce the organization of the cells? For instance the first question could be "Are the cells organised in a grid?" Or "Can I assign integers 1,2,...,n to the cells and ask if they contain a coin?" 2: There is no reason to continue to ask about the columns once the coin is located in one of the columns. This means that the number of columns can’t be deduced from the questions. The reasoning in 1 & 2 is as far as I can tell trivial for a human being but not trivial to program. (I'm travelling at the moment; may not be able to respond promptly) -

A complete answer would be very long and depends on local jurisdictions. In general: Government procurement or public procurement is the procurement of goods, services and works on behalf of a public authority, such as a government agency. To prevent fraud, waste, corruption, or local protectionism, the laws of most countries regulate government procurement to some extent. (paraphrased from wikipedia) Local policy over here: Public procurement must be efficient and legally certain and make use of market competition. It must also promote innovative solutions and take environmental and social considerations into account. (https://www.government.se/government-policy/public-procurement/) I note that this for some reason is posted in engineering so I'll avoid starting a politic discussion .

-

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

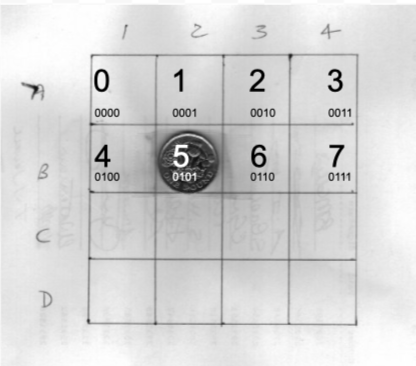

Edit: I x-posted with @studiot, I may have to edit my response after reading the post above. In this post I’ll separate my thoughts in different sections to clarify. Binary encoding of the location vs the questions Studiots questions lead to the string 0101. The same string could also be found by square by square questions if the squares are numbered 0-15. "Is the coin in square 1,A?", "Is the coin in square 2,A?" and so on. The sixth square has number 5 decimal = 0101 binary. This way of doing it does not encode the yes/no answers into 1/0 as Studiot initially required but it happens to result in the same string. This was one of the things that confused me initially. Illustration: Zero entropy @joigus calculations results in entropy=0. We also initially have the information that the coin is in the grid; there is no option “not in the grid”. Confirming that the coin is in the grid does not add information as far as I can tell; so entropy=0. I think we we could claim that entropy=0 for any question since no question can change the information; the coin is in the grid and hence it will be found. Note that in this case we can not answer where the coin is from the end result alone, zero information entropy does not allow for storage of a grid identifier. Different paths and questions resulting in 0101 To arrive at the string 0101 while using binary search I think of something like this*: 1. Is the coin in the lower half of the grid? No (0) 2. Is the coin in the top left quadrant of the grid? yes (1) 3. Is the coin in the first row of the top left quadrant? No (0) 4. Is the coin in the lower right corner of the top left quadrant? Yes (1) Illustration, red entries correspond to "no"=0 and green means "yes"=1 The resulting string 0101 translates into a grid position. But it has a different meaning than the 0101 that results from studiots initial questions. Just as in studiots case, we need some additional information to be able to interpret 0101 into a grid square. As far as I can tell the questions in this binary search example follows the same pattern of decreasing entropy if we apply joigus calculations. But the numbers will be different since a different number of options may be rejected. *) Note that I deliberately construct the questions so that it results in the correct yes/no sequence. The approach though is general and could find the coin in any position of the grid. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Yes! +1 I may have misinterpreted the translation from questions/answers to binary string. To clarify; If the coin is in square 1A, what is the binary string for that position? My interpretation (that may be wrong!) is that the four answers in your initial example (square 2B) "no", "yes", "no", "yes" are translated to / encoded as "0" "1" "0" "1". This interpretation means that a coin in square 1A is found by: Is it in the first column? - Yes Is it in the first row? - Yes and the resulting string is 11. As you see I (try to) encode the answers to the questions and not necessarily the number of the square. The nuances of your example makes this discussion more interesting in my opinion; "information" and the entropy of that information may possibly have more than one interpretation. (Note 1: I may be overthinking this; if so I blame my day job / current profession) (Note 2: I have some other ideas but I'll post one line of thought at a time; otherwise there may be too much different stuff in each post) -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

I've read the example again and noted something I find interesting. Initially it is known per the definition of the example that there is a 4 x 4 square board. In the final string 0101 this information is not present as far as I can tell, it could be column 2 & row 2 from any number of squares? This is not an error, I'm just curiously noting that the entropy of the string '0101' itself seems to differ from the result one gets from calculating the entropy step by step knowing the size of the board. A related note, @joigus as far as I can tell correctly determines that once the coin is found there is no option left; the search for the coin has only one outcome "coin found" and hence the entropy is zero. The string 0101 though, contains more information because it tells not only that the search terminated* it also tells where the coin is. Comments and corrections are welcome. *) '1' occurs twice in the string. -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

I agree that the result of computation is known. How do you retrieve the known result again later; does that require (some small) cost? Note: I'm not question your statement, just curious to understand it correctly for further reading. edit: Here are two papers that may be of interest for those following this thread: 1: Critical Remarks on Landauer’s principle of erasure–dissipation https://arxiv.org/pdf/1412.2166.pdf This paper discusses examples that as far as I can tell are related to @studiot's example of RAM 2:https://arxiv.org/pdf/1311.1886.pdf Thermodynamic and Logical Reversibilities Revisited The second paper investigates erasure of memory: (I have not yet read both papers in detail) -

How can information (Shannon) entropy decrease ?

Ghideon replied to studiot's topic in Computer Science

Initial note: I do not have enough knowledge yet to provide an answer but hopefully enough to get a discussion going. As far as I know entropy is a property of a random variable's distribution, typically a measure of Shannon entropy is a snapshot value at a specific point in time, it is not dynamic. It is quite possible to create time-variant entropy by having a distribution that evolves with time. In this case entropy can increase or decrease depending on how the distribution is parameterised by time (t). I would have to read some more before providing an opinion on decreasing Shannon entropy and its connection to physical devices and thermodynamics. An example of what I mean: (source: https://en.wikipedia.org/wiki/Entropy_(information_theory)) In the example above some actions or computations are required to find the low entropy formula. How is thermodynamic entropy affected by that? This is a part I am not sure about yet. This wikipedia page and it's references may be a starting point for adding more aspects to the discussion: https://en.wikipedia.org/wiki/Entropy_in_thermodynamics_and_information_theory