Everything posted by Ghideon

-

Flood of Spam 12th July 2025: Why Would Someone Do That?

Haven't thought this through but that policy makes them vulnerable to future attacks; an attacker picking off their customer's until there's no business* left? Anyway thanks for the swift action and Merry Christmas! *) Including both software and hosting

-

Can you assist me with GPTchat creating an image ?

Resizing the turbine after rotating, before submitting it to AI? Example; not 10 meters but bigger that before:

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

I have provided you with an animation and reference images that, to the best of my ability, adhere to established scientific models.* Could you now supply an animation or a mathematical model of the theory or idea you are promoting here? *) I acknowledge that my version is a simplified representation and has not undergone any formal review.

-

Can you assist me with GPTchat creating an image ?

The photographer that took the photo that the model was trained on? More seriously; difficult to provide a generally applicable answer. It may differ between regions, product lines, license etc. Short answer, for a few services I am familiar with, you own the output the AI generates. Issues may involve if the AI outputs very similar images for several users or if output includes other copyrighted material. That is a good suggestion since the model may have limitations of analysing its own output. A prompt "rotate 90 degrees" may cause issues if the model does not manage to grasp what to use as a reference. @Externet this is a case where prompting have limited success; there may not be many similar constructions in the training data. If the AI tool allows you may experiment with uploading references or modifying the image in an image editor and then submit it to AI for "cleaning". Example: I rotated the turbine manually in an image editor. No need to be precise; there are artefacts. Submit the above image to AI, prompting something like "just clean up this picture a little bit" Result: (If more control is required there are the concepts of control nets and similar, but that may require local installations, configurations etc. I'll can provide some links if interesting.) Note @Externet from the title I got the impression you wanted to use ChatGPT; the cleaned up image above was created using ChatGPT, model GPT 5.1

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

You need to be more specific. Also: your description of light and of Ole Rømer’s observations deviates significantly from the established sources. Could you explain what personal idea or hypothesis you are pursuing that leads you to this different interpretation?

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

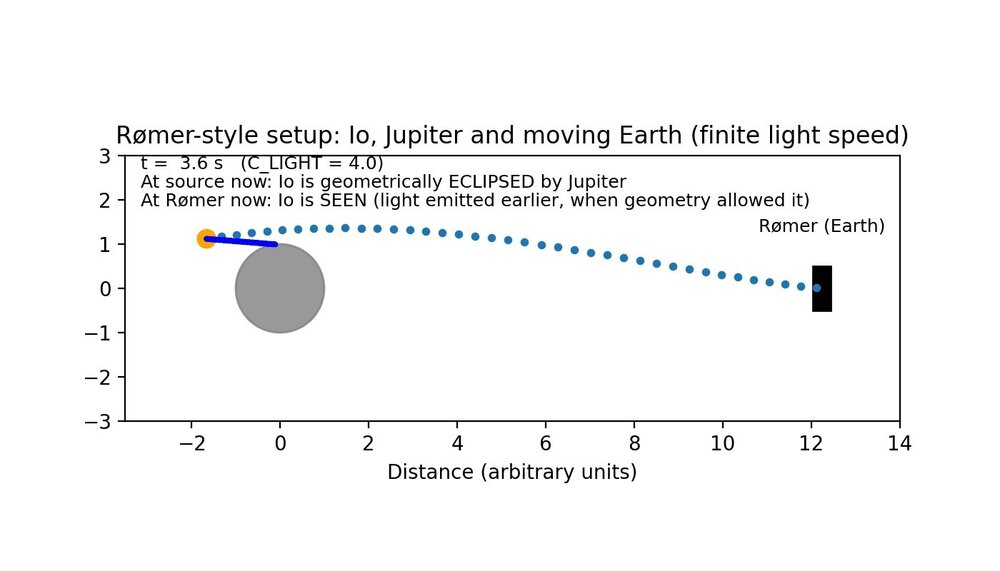

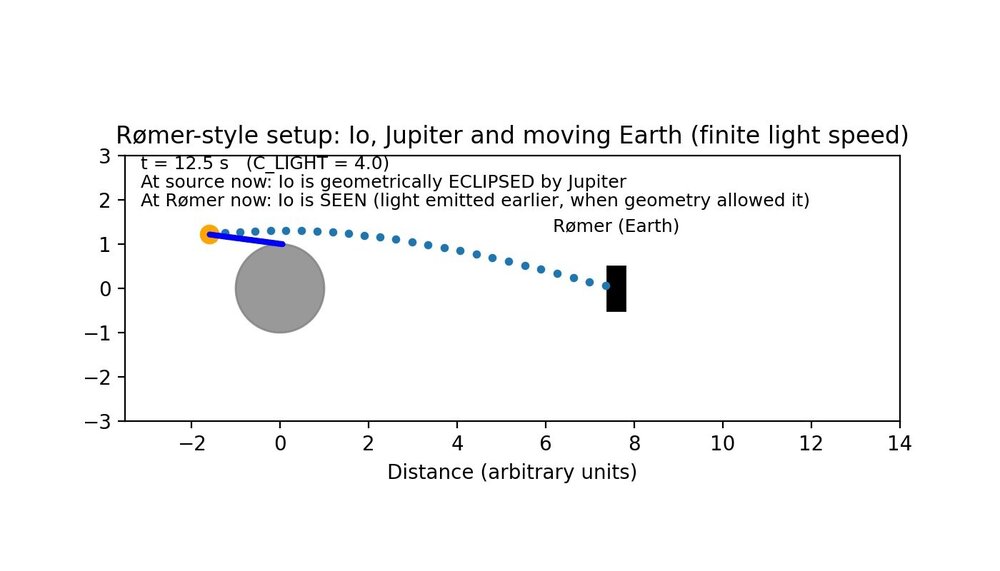

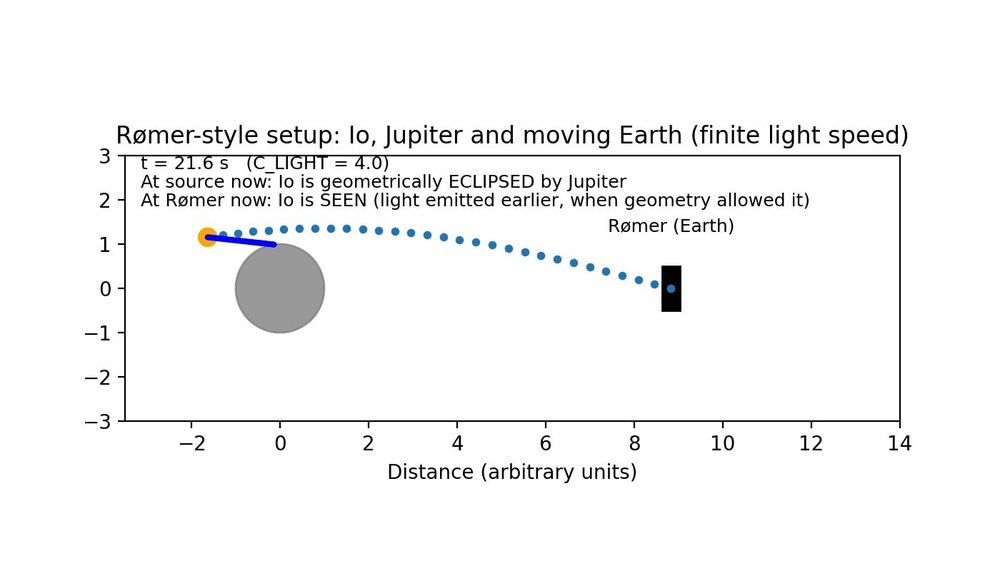

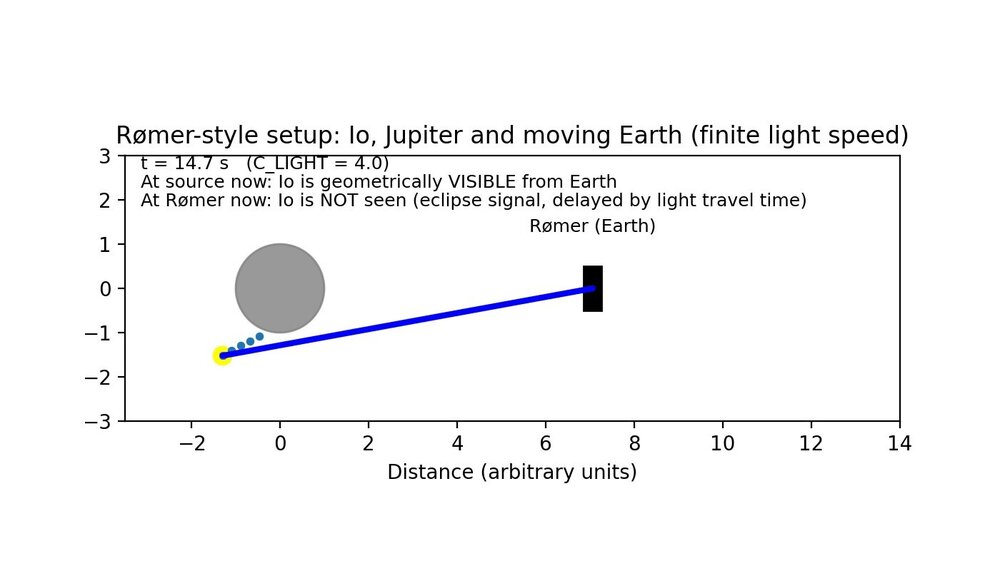

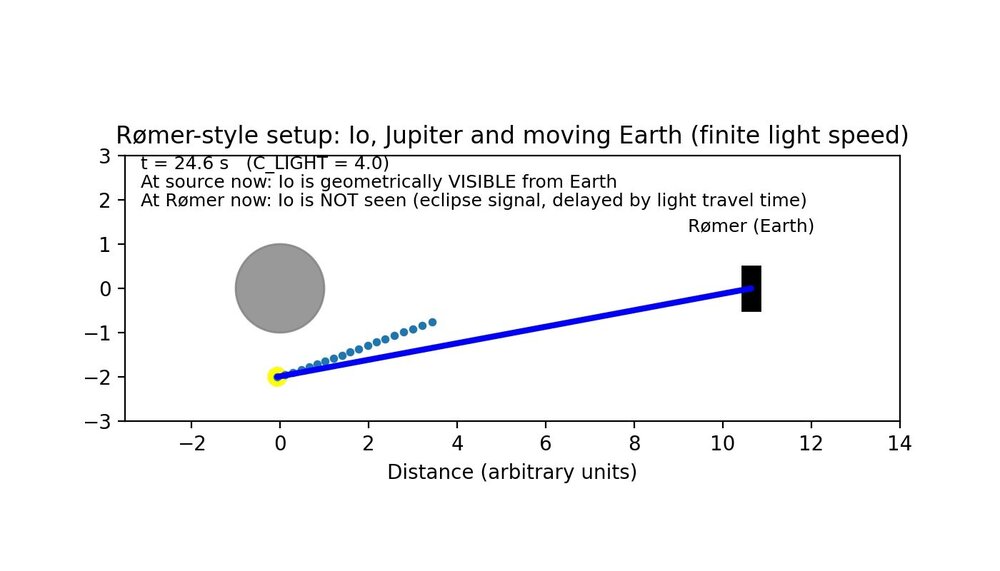

Using your words: "I have given you a map. That map is right in front of you." Look at the timestamps when IO moves behind Jupiter; the moment when there is no line of sight: The timestamps have a consistent interval, IO moves in behind Jupiter every 9nth second. Let's look at time stamps and interval from Rømer's position: These frames are from the point where he observes that IO disappears; no light reaches him from IO. The timestamps does not have a consistent interval, the interval between 1st and 2nd time is 8 seconds and between 2nd and 3rd time it is 9.9 seconds. This illustrates what @swansont and others already said:

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

@jalaldn I have added the observer movement back and forth for you: Fair point; I kept it simple. New version posted.

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

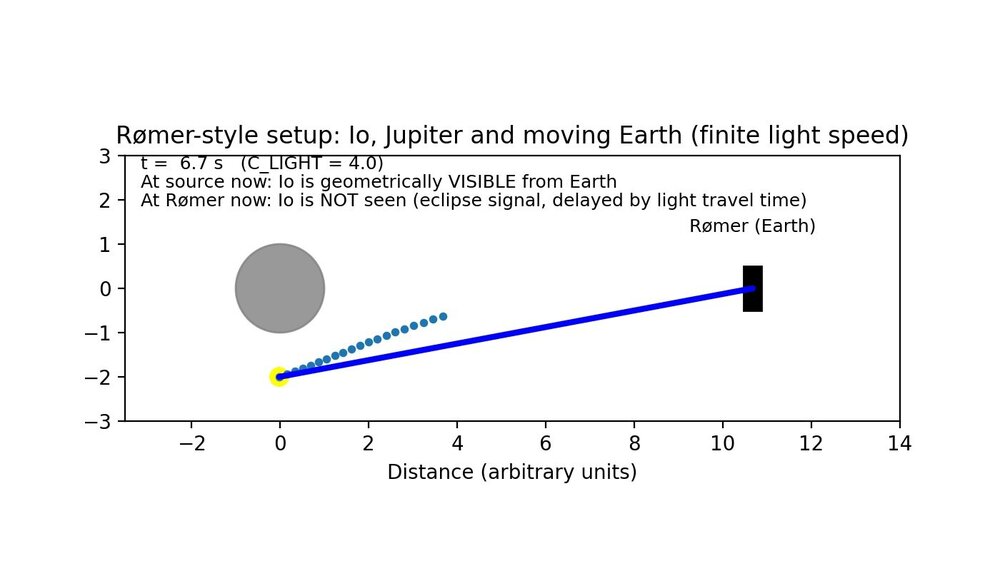

@jalaldn here is a crude animation, maybe this helps. The blue line illustrates line of sight between a moon and a remote observer. The moving dots illustrate photons in transit. Photons hitting the back side of the planet are not included. Note that the blue line is for illustration; there is of course no real time "connection" between observer and moon. In case you missed it:

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

Thanks! (I incorrectly assumed a connection to the fact that immersion and the emergence cannot be observed for the same eclipse of Io)

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

I think your description of light and of Ole Rømer’s observations deviates significantly from the established sources. Could you explain what personal idea or hypothesis you are pursuing that leads you to this different interpretation?

-

The speed of light involves acceleration and that even though light takes time to travel, we see real-time events.

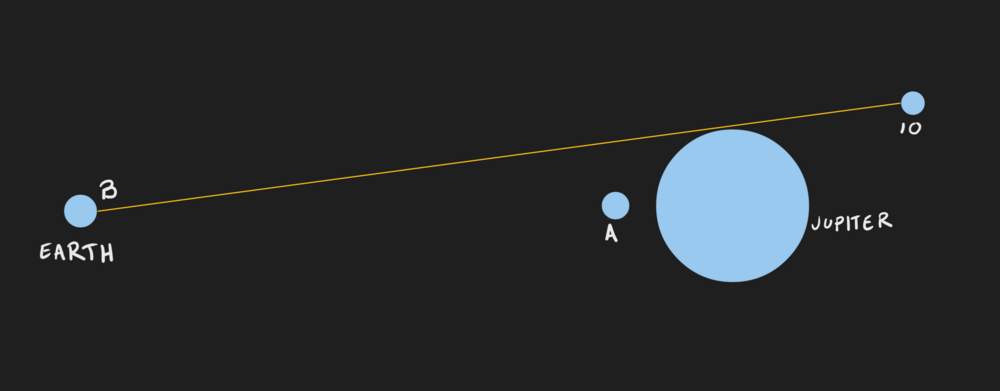

Not sure if this is a cause of your misunderstandings but different observers, at different distances would see a celestial object disappear and reappear at different times? Example: Observer A near Jupiter and observer B at earth would observe IO disappear at different times due to viewing angles. Here is a sketch:

-

What kind of drill bits are these ?

Are they all of the same length? Long bits may be referred to as "aircraft" drill bits.

-

Is there no test for a number that is Prime?

I reviewed your earlier thread on primes and this thread is a repetition; the proposals lack a coherent description and the few interpretable parts contradict established mathematics. These issues were already explained, in multiple ways, in the previous discussion but have not been incorporated into your reasoning. Your replies also continue indicate fundamental confusion regarding factors, integers, and prime numbers; as mentioned in the earlier thread, you may find this resource helpful: Khan Academy – Factors and multiples

-

Somebody

Idea: somebody ≠ Somebody. @Somebody is a member, no longer active (upper case) "somebody" (lower case) is a tag used by the software to denote an anonymous member. A moderator would (likely) see the user name.

-

Is there no test for a number that is Prime?

The ones cancel out.

-

A Methodological Challenge: Purging Physics of "Semantic Inflation"

The frequent use of quotation marks seems to weaken the precision and, ironically, introduce more "semantic inflation" rader than reduce it.

-

Is there no test for a number that is Prime?

The expression collapses to zero.

-

How Emotions Flow: And Whether Digital Systems Can Truly Connect to Consciousness?

Hello! What are the units of the things in your equation?

-

The Fundamental Interrelationships Model Part 2

Just a quick note @Nia20855; the quotes seems messed up; your answers are posted as quotes from studiot.

-

Is there no test for a number that is Prime?

What is the mathematical expression* of this error? Given the mathematical expression for the error, provide an expression for the range. *) Not a numerical or visual example in a graph

-

Is there no test for a number that is Prime?

Integer factorisation is defined within the integers (Z) and depends on integer divisibility. A relation that yields non-integers cannot represent or detect factors. Your formula (((pnp^2/ x ) + x^2) / pnp) yields non-integers (except for some trivial case). Hence your equation is algebraically invalid as a method for finding primes or factors.

-

Is there no test for a number that is Prime?

Can answer the question I asked above? How? Please show mathematically step by step what you mean. Use the symbols and derive the result. (Do not use any numerical example) Note the bold part

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

From the paper you linked "the rise of AI systems that can convincingly imitate human conversation will likely cause many people to believe that the systems they interact with are conscious." That is one aspect of the illusion I pointed out above. The paper also does not mention "Vedanta" so I do not see the connection to your ideas

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Then you understand how interaction with an LLM works: the model itself has no memory or state across calls? The illusion of dialogue comes from sending the full context with each request.

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Is it a surprise that the LLM hallucinated and failed to follow the prompt? Also the prompt example I posted is intended as input to an LLM; not an LLM wrapped in some software where additional information is inserted (except for system prompt(s) )