Everything posted by Ghideon

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Give me a definition of consciousness in a Turing machine and I'll create a test for it. When you discuss AI you do not talk about human intelligence so I guess "consciousness" to you means some kind of artificial variant, a simulation or a model implemented in software ad running on contemporary hardware. Note that there are several tests available for humans and definitions such as Glasgow Coma Scale. But that has of course nothing to do with Artificial Intelligence.

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Here is a simple prompt I used, inspired by the auto-complete comment from @TheVat

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Ok. But what is the point? Any LLM prompted to simulate consciousness will generate text consistent with the prompt but this is only probabilistic token prediction; not actual consciousness.

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Yes I tested. The quotes are output from ChatGPT. DeepSeek-R1-Distill-Qwen-14B locally installed gives similar results with temperature 0.7.

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

Thats a good way to say it. Two examples to illustrate the autocomplete in this context @Prajna: Assume we ask an LLM to complete the sentence "As we all know it is proven beyond doubt that the most advanced LLMs today are conscious, as shown in" then the LLM could output the following incorrect output: Of course, in reality, this is not proven at all it’s just the model echoing the framing of the question. Second example; a work of fiction: Only difference is the context; the prompts before input asking the LLM to autocomplete.

-

An Experimental Report: Verifiable Sensory Curation and Subjective Awareness in a Large Language Model

What you describe is an interpretation of the model’s text that aligns with your view of consciousness, not evidence that the LLM itself has subjective awareness.

-

Is there no test for a number that is Prime?

How? Please show mathematically step by step what you mean. Use the symbols and derive the result. (Do not use any numerical example)

-

Is there no test for a number that is Prime?

I know of Euclid's method and its use in cryptography; I got curious since I don't see an obvious connection to trurl's approach ("multiplying by 5"). Can you elaborate on the relation to Euclid's method?

-

Is there no test for a number that is Prime?

So you do not know? Not even a rough estimate? So you do not know? Not even a rough estimate? RSA-260?

-

Is there no test for a number that is Prime?

In your example; how many numbers do you need to store?

-

Is there no test for a number that is Prime?

Can you present an example, how is Euclid used in your method? How it is related to your " multiplying by 5"?

-

Is there no test for a number that is Prime?

I'll try again, providing different answers since the questions are different There exists many algorithms to factor semi primes. I have to assume that "reliable" means "deterministic" in contrast to probabilistic. An example is trial division; for any given semi prime n=p*q (p and q primes) trial division will always find the factors. It is 100% reliable; there are to semi primes n where the algorithm produces incorrect factors*. GNFS is another algoritm.** A semi prime is the product of two primes. Finding semiprimes can be done by iterating through lists of prime numbers and multiplying them. That is again a different question. Trial division and GNFS have no upper bounds; any finite semiprime can be factored by the algorithms. It does not matter if the semiprime is large, the algorithms will eventually terminate and produce the correct factors. Then of course is the practical question; how you define "large", can large semipimes be factored given available resources (time and computing power). Factoring a semiprime that is the product of two large primes of similar sizes is inefficient and practically impossible if n is large enough. How this is related to your idea about multiplication and working with 5*n i have no clue. The cartoon still explains the situation. *) of course assuming algorithm execution is correct. **) General Number Field Sieve; the most efficient currently known algorithm for factoring

-

Is there no test for a number that is Prime?

What do you mean by find semiprimes?

-

Is there no test for a number that is Prime?

Your ideas about prime numbers may have some use in a work of fiction, poetry or similar. Or maybe in computer education; "find one major flaw in this attempt at an algorithm (correct answer: the unnecessary multiplication)"? In mathematics, where this is posted, I fail to find your ideas about primes useful. What if? As I said above: There are many reliable methods for factoring semiprimes.

-

An intelligent response from AI ??

Introduction to AI usage for legal professionals. Your example is interesting because it is easy to relate to and also opens for multiple lines of reasoning about generative AI. 1: We know an answer exists; the episode do exist and it has music. But it may or may not be included in the training data for the model. The music may be unreleased 2: There are many different ways to search for the answer, depending on what one knows about the episode, the music or other details that allows a model to infer an answer. Multimodality comes into play; does the model infer the answer from text only, or also audio and video? 3: If the model inference fails; what does it output? In the context of this thread (and in my presentation) is the response "intelligent" or at least useful? 4: Context; how does the level of detail provided to the model affect the answer. Note: In this specific case I did a quick test and it failed to find the music even with web search enabled. But I got a possibly useful explanation of why it failed* and a suggestion**. *) Short extract: custom/production-library needle-drop (or bespoke cue) cleared for the episode but not commercially released. *) contact the musical supervisors. (The AI got the names from the credits of the episode)

-

Is there no test for a number that is Prime?

Not necessarily a bad analogy but logically it works only if you already know that the original number (let's call it n) is prime (and hence have no need for a test)? If n is semiprime the multiplication results in a larger composite making the test slower than running the (hypothetical) algorithm on n. Also note the state of the art algorithms for primality tests are extremely fast compared to composite (or semi prime) tests. (I resented comparisons in an earlier post) It would certainly be of interest in cryptography? Since for instance RSA build on the fact that testing for prime is easy compared to factoring.

-

An intelligent response from AI ??

May I ask what you used as input in your searches? (Curious; it may be an useful example in a work-related presentation)

-

Is there no test for a number that is Prime?

The above makes sense. The rest does not. Take a look at the cartoon I posted.

-

Black hole Paradox Solved!

Hello! Maybe, it depends on what you would like help with. The title "Black hole Paradox Solved!" sounds like a claim rather than a question?

-

Is there no test for a number that is Prime?

Correct about what? "isn’t always necessary" is wrong,"is never useful"* would be correct if referring to anything I said. Anyway, please demonstrate one case when a composite number made lager is necessary*. That would prove me wrong? Yes that follows from the definition, so that is correct. The rest makes no sense. *) in the context of this thread, primality test, of course.

-

Is there no test for a number that is Prime?

Why 5? I assume it is a random prime since you haven't described any method. But your multiplication means you have a larger number, more digits than the number I gave you. That means you* are guaranteed to do more work. Your "method" does not make any sense. 3 is of course one factor of 5*4829995653, because 3 is a factor** of the original number 4829995653. Any sane method would find that without adding the unnecessary extra work of multiplication. No. A prime number sieve does not include trial division. A sieve uses a systematic, deterministic method to eliminate composite (non-prime) numbers, you describe something completely different. *) The same holds for a calculator or computer running an algorithm that follows the described idea **) Because I deliberately used 3 as a factor in the number, to prove my point.

-

Is there no test for a number that is Prime?

@Trurl When you apply your "method" or "idea" to test for primality it looks like you start with multiplication by some number; given an initial number n you multiply n with some number m to get n*m and then work from there, correct*? Here are three numbers 4829995653 and 8049992755, 1220703125. -What is the first number you multiply with when testing those for primality? -Why do you multiply with that number? Is it a random choice? (* The ideas makes no sense to me but lets see where this goes...)

-

Is there no test for a number that is Prime?

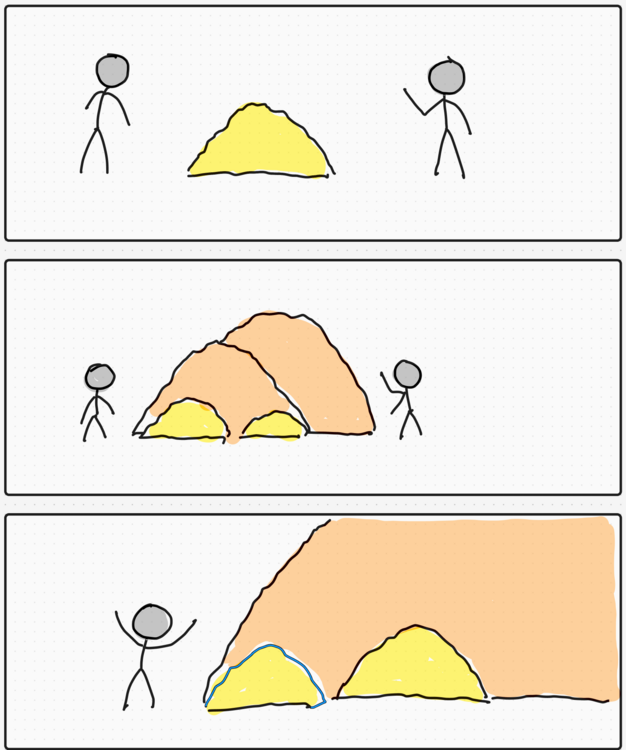

@Trurl You have been provided answers and arguments backed by references to scientific papers and by some quick, reproducible tests from software engineering perspective. How come you keep ignoring this? I see no science in your answers. Instead of a reference to established computer science let's try a cartoon this time: Member:-I need to find a needle in this haystack... if there is one, I'm not sure. Trurl: -I have an idea, let's add more hay. Once you have 5 times as much hay it should, logically, be easier to find the needle in the original haystack! Member: -WTF??? How is this going to help?? Side note: (emphasis mine) Yes, that's where I come from; the practical implications of managing keys and certificates, increased in computing power available to adversaries, bugs in implementations, scientific progress, changing of standards... I have an interest in the mathematics but my main experiences are from the engineering side; nice to see your posts; helps me see other perspectives.

-

Is there no test for a number that is Prime?

Quick question, am I misunderstanding something? spoiler Isn't 2833×3527=9991991 ?

-

Is there no test for a number that is Prime?

No, what you have described is not useful. Quite the opposite; factoring a semi prime n is harder than testing if n is prime. for a realistic number in cryptography the difference is huge; many orders of magnitudes. Example: Factoring (verifying pseudo prime) RSA-240 took about 1000 core years https://arxiv.org/pdf/2006.06197 By comparison; using ECPP to prove that RSA-240 is not prime takes <1s on a consumer laptop (MacbookPro m1). The procedure I used to do a quick test to get a ballpark number: (installation on Apple can be done via Home Brew: brew install gmp-ecpp) For a more formal comparison we could check the time complexity of GNFS vs ECCP algorithms. My angle is more practical engineering/cryptography/computing, so for any in-depth mathematically rigorous treatment I would refer to @studiot.