Everything posted by Ghideon

-

Worldbuilding for a fantasy novel

Solar activity. According to wikipedia attempts to correlate weather and solar activity have had limited success but it may still be useful as a starting point in a work of fiction. https://en.wikipedia.org/wiki/Solar_cycle You could change the 11 years to four years and make it have more impact on the planet. There are papers discussing connection between the 11-year cycle and for instance tree-rings. So in my opinion it would not be too unscientific have a fiction solar system where harsh winters occur due to solar cycle. https://en.wikipedia.org/wiki/Solar_cycle#cite_note-Luthardt2017-10

-

A mass can be be lifted with force less than its weight

You are correct. I did not intend to argue that the force never exceeds the static value, sorry if my post was not clear on that.

-

A mass can be be lifted with force less than its weight

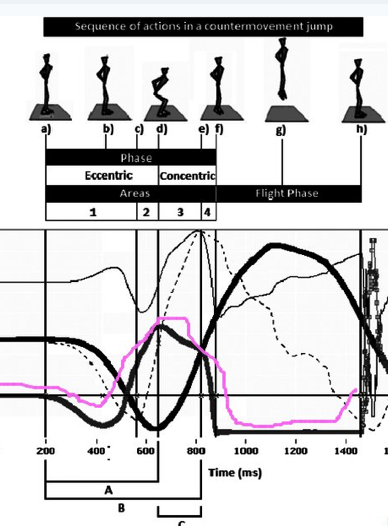

My point is: when during the attempt did they record dip? I did as well, thanks for your comment that made me look again. I now draw the conclusion that the scale is reduced below that of the weight a second time possibly matching @studiot's observation(?). First time is at approximately from a to c, while bending knees to prepare as mentioned before. Second time is near point f just before leaving the scale on the way up. Assume the individual would not actually jump but instead reduce their force just so that they end up standing on tiptoe. Then the flat segment would show the static value (the mass of the individual) at some point slightly later than f. I tried myself and unfortunately my digital bathroom scale locks the value once a static value is reached. My scale is not affected by movement once it has performed a measurement, I need to step off and wait for it to "reset". This has no impact on others observations, I'm just noting that bathroom scales seems to be constructed to behave rather differently under the dynamic conditions described in this topic.

-

A mass can be be lifted with force less than its weight

Please have a look at my attempt at explaining the researchers recording. My interpretation is that the scale reading is low while the body is moving down ("A" in picture). The individual in the measurement bends their knees fast and that results in a low force pressing the scale.

-

A mass can be be lifted with force less than its weight

@studiot Thanks for adding science to the thread. Letters a-h are from your image: a: standing with straight legs without movement. Reading of scale is constant since body is at rest. b: Bending knees. While doing this the centre of mass of body is initially accelerating down; any force from the scale acting on the body is less than the weight of the body. Scale is reading less than mass of body. b-c: tightening of leg muscles stops acceleration down to have the body stationary with bent legs. While the body decelerating the scale reads more than the mass m. c : starting the upwards push. There is no flat segment of the curve so the body is not at rest with bent legs for any extended amount of time. d: beginning to push/straighten legs to jump. e: (approximately) heels leaves the scale since legs are straight, pushing with calves muscles; force is lower than while using upper leg muscles. f: the body is airborne. h: landing There is a brief moment e-f where the scale would read less than the body mass while the body is standing on toes. The body is not at rest relative the scale during that period of time. I am not an expert, my interpretation may be completely incorrect.

-

Applicability of Newton’s laws (split from A mass can be be lifted with force less than its weight)

In the context of this thread I believe that Newton mechanics and specifically F=mg will predict what happens when a mass m is put on a typical household scale. Flesh or wood or bones or quick silver or gravestone or whatever will not have an effect*, Newtonian physics is applicable in this case. OP seems to argue that Newtonian physics fails to predict the force if the mass m consists of flesh. I fail to find any evidence in this thread or in any mainstream physics supporting that opinion. (Disclaimer: Of course not counting engineering limitations, using the scale outside of limits, not keeping the mass stable or other out of context reasons.)

-

A mass can be be lifted with force less than its weight

You might want to use the quote function. The above looks like a support for awaterpon's claims, was that the intention? Can you elaborate; how does what you describe result in awaterpon's claims flesh (and bones) and physics?

-

A mass can be be lifted with force less than its weight

What are the properties of flesh (and bones), according to your idea, that makes the difference?

-

A mass can be be lifted with force less than its weight

Let's try something else. What objects can replace the word "human" in your definition and still give exactly the same results as you claim? Can "human" be replaced by "dog", "humanoid robot" or something else?

-

Is the Fleming's left hand rule valid?

It seems like I do not share that view. I was curious about the posted idea and how someone would draw such conclusions about electromagnetism and as expected this thread contains no support for new scientific progress. But it was a good excuse to return to some books I have not touched for a while. (edit: I just found out that Bill Hayt and John Buck's Engineering Electromagnetics is updated and still in print)

-

Is the Fleming's left hand rule valid?

You are wrong and you have stopped to say anything about physics so its just the relocation left. Which island have you selected? I asked for something supporting your claims or clarifying possible misunderstandings. The formulas and laws of physics in my books on electromechanical engineering seems to disagree with your statements and pictures.

-

Is the Fleming's left hand rule valid?

What theoretical model and experimental results would you like me to present as motivation? It seems reasonable to assume some motivation is required (those invited to propose nominees are sent confidential nomination forms* so full insight is not available to me at this point). Also note that the rules for the Nobel Prize in Physics require that the significance of achievements being recognised has been "tested by time"; "deserving a Nobel prize" means my trip will take place approximately 2041 or later. *)https://en.wikipedia.org/wiki/Nobel_Prize_in_Physics#Nomination_and_selection

-

Is the Fleming's left hand rule valid?

Your claims and explanations seems to be in disagreement with current mainstream physics, so a supporting reference would have been helpful. That would require (a lot) more evidence than presented so far.

-

A mass can be be lifted with force less than its weight

I would like to see a rigorous definition of "alternative weight" "alternative mass".

-

Is the Fleming's left hand rule valid?

Can you provide a reference?

-

A mass can be be lifted with force less than its weight

Let's try another point: "effortless" walking is a psychological effect, not physics, in this case. An experience of "effortless" walking is not an indicator that mass magically is reduced. An example of your flawed logic: Assume a well trained human A runs "effortlessly" at the same speed as a not so well trained human B. A and B has the same mass. The fact that B struggles to keep up with A does not mean that B have some unspecified "alternative mass", A and B have the same mass. B's struggle is more likely due to being less fit than A. Another example: After 10km of running I do not run effortless anymore. How much "alternative weight" have I gained according to your idea? Have you investigated the possibility that human experience "less effort" because human benefits from not having to feel like walking requires a lot of effort?

-

Today I Learned

Today I learned about "tall poppy syndrome". According to Wikipedia*: Thanks @beecee Maybe related to (but not the same as) Law of Jante** ? I need to do some reading. *) https://en.wikipedia.org/wiki/Tall_poppy_syndrome **) https://en.wikipedia.org/wiki/Law_of_Jante

-

A mass can be be lifted with force less than its weight

That got me thinking; maybe this helps OP: In the video below the lecturer finds the force exerted on the Achilles tendon when a person is standing on tip-toes. The video will guide you through a simple model of the foot and lower leg and apply basic mathematics and physics. Talking of archimedes; maybe OP can try standing on toe partially submerged in water and report back....

-

Extended Field Theory

My main problem with this specific debate is the incoherent questions, unsupported claims and undefined concepts... ... add conspiracy tales to the list of issues.

-

Extended Field Theory

I am curious about ideas about physics, that's why I entered this discussion. And a discussion requires some common ground for instance a common set of definitions or some current knowledge as a staring point. That seems to be out of reach in this case. (Don't try to tell me how I will argue regarding current ideas. Of course current mainstream is not "final", there will be progress. I'm currently working in computer science; progress, evaluating new good ideas and adaption to scientific progress is unavoidable.)

-

A mass can be be lifted with force less than its weight

That seems like an attempt at describing something*. It is not a definition. *) It looks like a description of a phenomenon that simply does not exist.

-

A mass can be be lifted with force less than its weight

How is “alternative mass” defined?

-

Extended Field Theory

"Radiant matter" as described above is not connected to the magnetic monopoles in the 2009 experiment* and will not be part of an explanation of the possible violation of LU mentioned in this thread. Does that answer your "what if" question? Nothing moves faster that the speed of light in vacuum so I guess Tesla was wrong in this case. Or maybe the book is not drawing correct conclusions from Tesla's work. Or it is a work of fiction, it does not describe something that can be reproduced. *)https://www.eurekalert.org/pub_releases/2009-09/haog-mmd090209.php

-

A mass can be be lifted with force less than its weight

If I understand your claim correctly: 1: A person with a mass of 57kg could stand on their toes on a surface that would collapse under a force grater that 80N. 2: It follows that when I carry a person with mass 57kg and the person is standing on toe on my shoulders then I would be using 80N of force to support that persons weight and not 570N*. As the above is obviously not how physics works, can you provide a picture showing what you mean so misunderstandings can be sorted out? *) Approximating g=10 in this discussion

-

Falling into a black hole "paradox"

Ok, thanks for the reply! I'll read through the rest of the posts to see where my understanding is wrong. Hovering is not what I meant, but watching the feet continuously, including the brief moment while feet has passed event horizon and eyes have not.