Everything posted by CharonY

-

US Constitution Article 1, Sections 9 and 10 removed from government website

Because we are in the midst of a dumb and dumber version of 1984?

-

Why infants and children died at a horrific rate in the Middle Ages?

Maybe, but I am not entirely sure. The issue here is that whether there are populations that have sufficiently different famine rates so that we can spot potential selection. And obviously finding mechanistic evidence is going to be tricky as metabolic or other adaptations to famine are going to be more diffuse. One of the elements that folks have focused on is our propensity for obesity (i.e. storing fat). There is the hypothesis that most humanity is hunger-adapted in the first place. Now, there are populations who appear to struggle more for obesity and that potentially populations with very high rates of obesity might have genomic signatures of extreme adaptations to famines. However, these were arguments made when there was a rush for the human genome and a desire to look at things through an overly genetic lens with the expectation that high-throughput GWAS would yield terrific insights (the "thrifty genotype" was such an example) . I have not followed up on that, but I am aware that a couple areas of inquiry were pretty much just so stories with little supporting evidence. And I think folks have begun to be a bit more skeptical in the way genetic information have to be contextualized.

-

Why infants and children died at a horrific rate in the Middle Ages?

It depends a bit on the specific question. For example, do we see evidence of genomic adaptation to certain conditions? If so, then I would say yes. The issue is of course that we cannot say for certain, as we cannot really replay the past. Sethoflagos mentioned malaria, and the evidence we have is that a) alleles for sickle cell are more common in areas where malaria is found and b) there is a plausible mechanism for resistance against this pathogen where it was found that folks that are heterozygote are less likely to die from malaria (but homozygous folks, who are therefore symptomatic for sickle cell anemia) are at increased risk. Now, sickle-cell is a very popular example to teach human genetics for a number of reasons and perspectives, but there are a lot of other variants, associated with malaria including other variants of the HBB that do not associated with sickle-cell disease or other mutations that can cause other blood disorders (e.g. alpha thalassemia, which is caused by dysregulation of HBA, IIIRC, but also mutations in a chemokine receptor resulting in the absence of the Duffy antigen, which is used to enter cells by Plasmodium vivax (one of the parasites causing malaria). I am sure there are more that I cannot remember anymore. For other diseases it is a bit trickier, as few have this long, persistent and very strong selective pressure on human population. For example, there is the CCR5-Delta32 deletion mutation which confers resistance HIV. It arose very recently and has reached very high frequencies in Europe, suggesting that it is under positive selection. However, despite the nice narrative regarding HIV-resistance, HIV is actually not around for long enough to put enough pressure on the population to reach the measured frequencies. I.e. it can be tricky to figure out the true origin of genomic signatures. But there is also a more direct story. We carry quite a few viral sequences in our genome and while there can be many different reasons for their presence, one hypothesis is that some might have protective properties for example by competition with viral copies, inhibit synthesis of the "correct" product or somehow otherwise mess with viral functions.

-

Secrets of the world

Moderator NoteNonsense like this does not belong in a Science Forums. And this in fact gets it locked (and trashed).

-

Why infants and children died at a horrific rate in the Middle Ages?

It is one of the examples I love to show in class. Also in quite a few soil samples you will occasionally come across Y. pestis (probably also one of the reasons why you find them in ground squirrels and prairie dogs and other ground dwellers).

-

How can we inhabit Mars ?

Honestly, that just sounds like wishful thinking. Something that is quite popular among the techbro crowd. Real issues will somehow be magically fixed by technological advances while at the same time very current and actual challenges (ranging from undervaccination of folks to rise in antibiotic resistances) are ignored. It is not to say that those feats are impossible but there are no guarantees, either.

-

What is this CRISPR-Cas9? I’m reading this quote right?

That is a very unlikely approach. For the most part, we need our genes and just deleting one or even a full knockdown is normally not good news. It would also not use the actual benefit of CRISPR/Cas9, which is targeted editing (rather than full knockout or just a knockdown). What it can be used is to introduce functional alleles of genes to counter the whatever genetic issues there might be. Another idea is to to directly edit stem cells to replace the harmful with a non-harmful variant, but that can be a bit tricky. Usually you only get part of the reproducing cells (usually using a lentiviral vector) so it is often better to introduce something that can withstand the genetic issue. This is case the for sickle cell disease treatment, where a modified hemoglobin is introduced.

-

How can we inhabit Mars ?

Also, we don't really have any good idea regarding human developmental changes at lower gravity as well as long-term impact on health. What we know about is mostly from few individuals with few going longer than a year. There are also changes in the immune system (which likely is only partially related to gravity) and potentially other rewiring going on. But at this point much is just speculative. It is possible that the main issue is having issues going back to higher g, but there is also the risk that the our bodies are going to experience issues that are not easy to adapt to.

-

Why infants and children died at a horrific rate in the Middle Ages?

Oh there are absolutely cases where certain exposures in farming contexts can provide some form of immunity, especially to zoonotic diseases (though conversely, there is also a somewhat higher risk for agricultural workers to get sick). But I think TheVat might have been referring to the so-called Hygiene hypothesis (not to be confused with Semmelweis' hand hygiene concept). There, the idea is that childhood exposure is boosting the immune system provide overall better immune responses later in life. This is somewhat less well grounded. There is some potential link to things like autoimmune disease and allergies (especially asthma), but regarding net infections the jury is very much still out (at least to my knowledge). The main potential mechanism is low-level exposure to certain pathogens which then either provide immunity and/or cross-immunity. But for the most part it seems that with few specific exceptions, there really is no broad immunity to be gained from agricultural lifestyles and especially in poorer countries the above balance (protection against vs acquiring zoonotic diseases) seems to point to a higher, rather than a lower incidence of zoonotic infections.

-

Why infants and children died at a horrific rate in the Middle Ages?

I think that is somewhat more speculative or at least I have seen any particularly convincing data to this effect. What is known however is that many urban (but also some rural areas, later during industrialization) were heavily exposed to e.g. air, heavy metal and other pollution. These factors are known to adversely affect child development.

-

Why infants and children died at a horrific rate in the Middle Ages?

And just to reinforce a point made earlier- besides antibiotics, vaccines are probably the single largest contribution to population health. Treatments may or may not work, but they certainly do not prevent spread of infectious diseases and even if treated, they can still lead to significant health burdens. Vaccines on the other hand lower the overall risk of adverse health outcomes. Even just considering the last 50 years, where infant mortality has been cut down massively, vaccines have saved the lives in the order of 140 million children (each resulting on an average of 66 life years gained).

-

Why infants and children died at a horrific rate in the Middle Ages?

Absolutely- I forgot where I read it, but I believe that in the 1800s child mortality at birth was higher in urban centres (in rural areas, midwives were doing the work) and the hygiene findings after Semmelweis cut those down markedly.

-

Why infants and children died at a horrific rate in the Middle Ages?

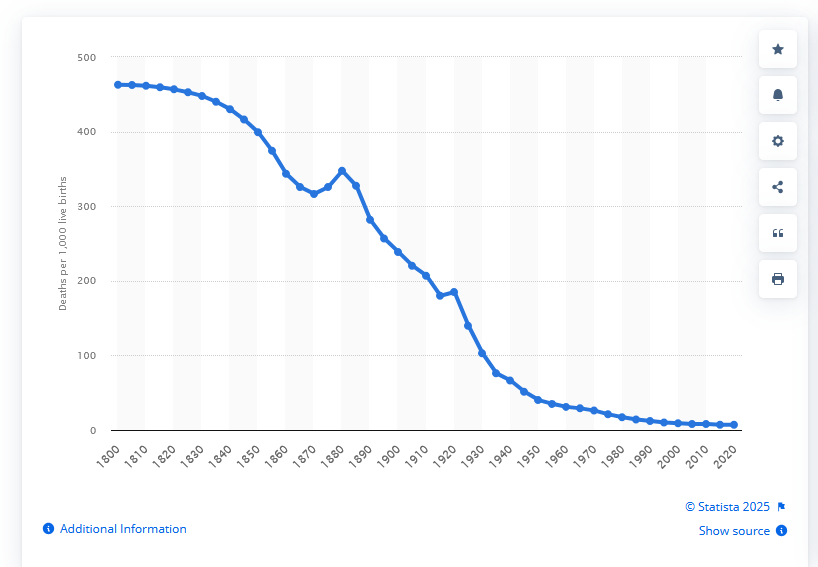

Essentially you can extend the graphs from 1800 all the way back to the middle ages as seen in the second link. In the US, for example, child mortality hovered around 45% through the first half of the 1800s. So rather than asking why child mortality was so high in the middle ages, one could argue that our "normal" child mortality is around 50% and things only started to change around 200 years ago. I.e. the modern times are the anomaly in our history (though the US is trying really hard to reverse that).

-

An appeal to help advance the research on gut microbiome/fecal microbiota transplantation in the US.

No let me try to clarify this: Already approved use -> normal usage no additional paperwork Off-label use -> no "formal" paperwork but physician needs to demonstrate that they have at least informed consent from the patient Obtaining Approval for not yet approved use -> Investigational New Drug approval application, typically an Investigator IND if the physician is administering and monitoring the treatment.

-

An appeal to help advance the research on gut microbiome/fecal microbiota transplantation in the US.

Sorry I wasn't clear, it was just to re-affirm that for FDA (or equivalent) approved use it would be IND, but the other pathway (i.e. without formal approval), would fall under typical off-label-use. I.e. the physician has to be able to defend the use, but does not require formal approval.

-

Death Map United States.

I am sure the food culture plays a role, but it seems France is extra-different. Italy, is only a bit lower than UK (~ 28 UK to ~20 Italy or something around that), Spain a bit lower than that (maybe 19). Greece is on the high end, with 33 (similar to much of Balkan/Eastern Europe). The Netherlands, which is similar to Germany in many regards is below Spain. And then there is France with around 10%.

-

Death Map United States.

Overweight is a general problem, but with regard to obesity the UK is doing a bit better especially compared to the US, but also (slightly surprising to me, tbh) somewhat in European comparison. I believe France was always doing well in that regard.

-

An appeal to help advance the research on gut microbiome/fecal microbiota transplantation in the US.

In addition there is a rectal treatment (Rebyota). Both are human microbiome-derived. IND would be required for approved other uses, but otherwise it should not be different from "regular" off-label use. So using FDA-approved treatments, is obviously the easiest option due to the standardization and available safety information. Now if you want to use stool banks, the issue is more complicated as they would fall under biologic drug regulations, which will differ from jurisdictions. At minimum there must be documentation of the screening procedure and outcomes but there is bit of a regulatory gap in the FDA (or there was a decade or so ago) which basically allowed FDA discretion. It was only really catered for Clostridium difficile treatment, as they considered that more of an emergency situation and the risk/benefit was rather obvious there. But for other uses and given the relative paucity of trial data, I would assume IND or equivalent pathways would be necessary (AFAIK FDA and similar agencies only issued statements regarding C. difficile but have not mentioned other uses, but I may be wrong). I am vaguely aware of the struggle of OpenBiome, and the fight over regulations (though I think they submitted INDs by now). In Europe the legal framework is flexible, meaning that member states have significant discretion in how they regulate FMT. From what I have heard, it is a bit of a mess how FMT are classified and different monitoring criteria (e.g. donor selection vs microbial composition). From second-hand info it seems that at least in some countries it would be easier to conduct research studies on FMTs, but we really never got into details.

-

A number of people say Trump is not listening to the courts?

Well the check there is the senate which has to consent to the appointment. The founding fathers seemed to have envisioned a system where the executive, legislative and judicial system were all participating in governance. They did not expect that folks would simply cede their powers so willingly.

-

Death Map United States.

That is true in most parts in the world, but I believe that lack of calories is limited to a relatively small subset in Western countries (typically elderly and homeless folks). For most others it is more about having enough nutrients in the calories consumed.

-

AI's Tools Lying to it. What are the implications?

Somewhat unrelated, but I started seeing that for google searches, the AI summary keeps pushing random posts from social media (e.g. Reddit) as part of the answers. We are putting a lot of effort into means to make us dumber it seems.

-

Death Map United States.

Interesting. I did now know that, but it seems intuitive. Only partially related, but that also reminds me that in rural areas, access to grocery stores is often poor and perhaps slightly counter-intuitively, in rural areas obesity rates are generally higher.

-

A number of people say Trump is not listening to the courts?

Another element is to look at the points of failure and perhaps consider a redesign.

-

Death Map United States.

That has also been brought up as an issue to the rise of dual-income families, where time for household jobs, including cooking, is diminished.

-

Death Map United States.

While the US has a a poor diet, especially among low income folks, it is a bit of an overgeneralization to state that these things are absent in Europe. Processed foods has been making inroads for some time unfortunately and obesity as well as diabetes rates in many European countries have been climbing. There is still a 10-point gap (30ish vs 40ish) among the high obesity European countries and the US, but it is a bit false to assume that there is nothing to worry about. And this is why video is a horrible way to spread information but a great source for misinformation. There are many farmer's markets in the US and in regular grocery stores there is always a vegetable section with loose vegetables. Not necessarily though, and it boils down to how much is being bought. In fact, buying directly from farmers can be more expensive as an individual, as you do not have the negotiating power, say, McDonald's has.