Posts posted by exchemist

-

-

5 minutes ago, m_m said: Right, because a population is a modern term, it excludes individuals. You reject science before 20cent but you are very informed about the population before 20 cent.

What is your sampling? What are your respondents? Where is your data? And I'm keen on to know about the age BC.

Don't be a berk. Of course I don't reject science before the c.20th. You have made that up and it's absurd. A lot of modern science (e.g. thermodynamics, electromagnetic induction, periodic table of elements, gas laws etc.) dates from the c.19th and c.18th. Newton's laws date from the c.17th.

My point to you was simply that a couple of instances tell you nothing about a whole population. It's like saying, "I've never been in a car crash so car crashes don't exist."

-

1 hour ago, iNow said: I'm rapidly losing my interest in continuing this conversation, but one more potential example of "reasoning" (depending on how one defines it) just came from Microsoft. They've released an autonomous agent under their Project Ire. Paraphrased summary from the articles which hit my feed in the last 24 hours:

Their tool automates an extremely difficult task around malware classification and does so by fully reverse engineering software files without any clues about their origin or purpose. It uses decompilers and other tools, reviews their output, and determines whether the software is malicious or benign. They've published a 98% precision in this task.

The system’s architecture allows for reasoning at multiple levels, from low-level binary analysis to control flow reconstruction and high-level interpretation of code behavior. The AI must make judgment calls without definitive validation.

Maybe that's not reasoning, though? I guess it depends on ones definition. Cheers.

OK but that is not a chatbot. Nor are these clever AI applications that can diagnose medical conditions from X-ray or MRI images. They are purpose-built for a particular class of tasks.

Enabling an AI agent to "reason" across a completely open-ended field of enquiry, such as a chatbot is faced with, would seem to be of a different order of difficulty. But OK, it appears from what you say they are trying to do it.

-

4 minutes ago, studiot said: There is no such thing as a thinking or intelligent computer program.

iNow is right in that other models, such as the symbolic algebra section of Wolfram alpha is more suited to technical stuff.

Equally as he says, that model require well phrased questions within its capability and the symbolic algebra conventions.

But it does not 'reason', just checks agains the rules, rather better and more quickly than a human does.

I am simply adopting the terminology @iNow is using. It seems these things are called "reasoning models". I take no view on whether this is an accurate representation of what the bloody things do. (Though in view of all the hype surrounding them, I would not be surprised if the proponents of AI are overstating their case.)

-

Edited by exchemist

8 hours ago, iNow said: It may be helpful to realize that LLMs are just one type of model. They have largely evolved to reasoning models. You’ll notice this more easily when ChatGPT-5 releases in the next few weeks, but several models like Grok4 and others are already displaying those properties.

At the end, the answer is only as good as the question. Prompt engineering is becoming far less relevant now that then models are getting so much better, but it’s still a useful art to practice.

OK that's interesting. To get it clear, is a reasoning chatbot an LLM still, or is that term restricted only to those language emulators that operate by trawling a database and returning answers as stochastic parrots?

-

Edited by exchemist

4 hours ago, Sensei said: A wood or coal stove for a normal family is the same size as, or just slightly larger than my today's gas stove. Here is an example photo from a hundred years ago:

Before women's emancipation, after all, they stayed at home with their children (and there were a lot of them!) and they had to eat something..

After all, they are also used to heat the apartment in the cold winter.

I know people who still used such inventions 20 years ago.

When it's -15°C to -20°C here, you can immediately tell who has a wood or coal stove, because you can see the characteristic smoke coming out of their chimney. For about five or ten years now, drones have been flying over chimneys to check whether people are burning trash.

There are tenement, and then there are tenement. There were times when there was only one kitchen and one bathroom (WC) for the entire floor. Damn. It's a good thing I never had to deal with that.

But there are films from over 50 years ago that even made jokes about it..

Yes indeed, a cast iron stove could be used, from the late c.18th/c.19th. (There are pictures of them in the Beatrix Potter books, in Lake District cottages.) I gather there were brick ones for about a century before that. But I don't know how widespread these would have been in urban working class homes.

-

5 minutes ago, m_m said: Really? I would like to inform you that Diogenes of Sinope lived to be 90 years old. He died at 323 BC. He was a cynic. If you don't know anything about the Cynics, you are welcome to read it.

And about "hygiene", he didn't use a cup or a plate.

BTW, Augustine of Hippo died at the age of 83.

That two individuals lived to a great age tells us precisely zero about the life expectancy of the population.

-

Edited by exchemist

7 hours ago, Moon99 said: Why infants and children died at a horrific rate in the Middle Ages?

Quote For starters, infants and children died at a horrific rate (some say up to 1/3 of all died before the age of 5) Quote

https://www.sarahwoodbury.com/life-expectancy-in-the-middle-ages/

Why did infants and children died at a horrific rate in the Middle Ages?

Most people only lived to mid 40s.

What happen in the Middle Ages people died so early?

Was there just more bacterial and virus back in that time?

Childhood diseases, mainly.

Infant mortality actually remained high until the end of the c.19th. I suspect the hygiene issue may actually have been more important after the Industrial Revolution, when so may people moved to cities in crowded conditions without good sanitation, which promoted the transmission of disease (typhoid etc). In the Middle Ages it may have been more a mix of factors, including childhood diseases but also the harshness of the climate when housing was very basic, variations in nutrition due to reliance on subsistence farming, and so on. Infants have small body weight, so the cold of winter, shortages of food or the effects of illness would be more likely to kill them than a larger adult with more bodily reserves. In the early months breast feeding would have been essential as there was no possibility of bottle feeding. So if the mother could not breast feed (and illness or death of mothers from puerperal fever was common) the child might die unless a wet nurse could be found.

Regarding your comment that "most people only lived to mid 40s", be careful. There can be confusion of average life expectancy with how long adults could be expected to live. Because so many infants died before they were 5, the effect of these early deaths on the statistics for the population is to bring down sharply the average life expectancy. If one was lucky enough to survive to one's twenties, there was a good chance of living some way past 40. (In your twenties the big risks would have been warfare for young men and death in or after childbirth for young women. If you made it to 30, you might live to 50 or 60.)

-

Edited by exchemist

1 hour ago, toucana said: The BBC has announced that it will revert to using the UK government Met Office as the data source of all its weather forecasting and climate update services.

https://www.bbc.co.uk/news/articles/crm4z8mple3o

The BBC had previously terminated a near century old relationship with the Met Office eight years ago in 2017 in favour of a Dutch provider called the MeteoGroup citing a need to obtain “best value for license payers money”.

The MeteoGroup was subsequently taken over by a private American firm called DTN based in Minnesota.

In October 2024 a technical fault affecting the supplying of data to the United Kingdom's BBC Weather service caused the latter's website and app to incorrectly forecast wind speeds of over 15,000 mph (24,000 km/h) and air temperatures exceeding 400 °C (750 °F).

Hard to discern the motive, through all the lashings of corporate management-speak here. Do I gather, from the way they say this is "not a commercial relationship involving procurement", that the service is to be provided by the Met Office free of charge?

-

8 minutes ago, iNow said: I support recognizing the limitations of models and recognizing where they're likely to go wrong, but encourage caution here over generalizing these results.

Yes, it's correct that a "language" model struggles more with math and physics. No doubt there, but we're no longer really using language models and are rapidly moving into reasoning models and mixture of experts applications where multiple models get queried at once to refine the answer.

I dug into the Arvix paper and found two concerns with their methods in this study that give me pause.

One is they trained it themselves. Who knows how good they are at properly training and tuning a model. That is very much an art where some people are more skilled than others.

Two is that they trained models that are not SOTA and are relatively low ranking in terms of capability and performance.

It's a cool paper that reinforces some of our preconceptions, but they're basically saying the Model T is a bad car because the Air Conditioning system which was built by a poet doesn't cool a steak down to 38 degrees in 15 minutes. ... or something like that.

Know the models limitations, sure. Know that math and physics don't lend themselves to quality answers based on predictive text alone. But also know those problems were largely solved months ago and only get better every day.

The models we're using today are, in fact, the worst they will ever be at these tasks since they get better by the minute.

/AIfanboi

But is this weakness confined to maths and physics? Surely the issue is that while LLMs are very clever at learning how to mimic language, they can't reason and can't understand in any useful sense the content they present to the user? This would be true of other areas of knowledge. We had an example yesterday of an LLM (Gemini) coming up with a theory for @Prajna as to why Google's search engine seems to have got slower and returned fewer results, but then @Sensei blew this theory out of the water as it was apparently based on a misconception that the user had fed in and which Gemini had not challenged - all the while telling the user how clever he was.

-

Edited by exchemist

56 minutes ago, Linkey said: Bitcoins are analogous to gold; both gold and bitcoins are limited in total amount, making them an effective way to store money without paying the "inflation tax". However, there are currently many problems with cryptocurrencies, such as the division into "pure" and "dirty" crypto, and I am not sure that cryptocurrencies are more convenient to use than gold. And this leads to a new question: why the gold is not growing as fast as bitcoins? Maybe buying gold is illegal in the US/Europe?

No it's because people and institutions hold a lot of gold already, while total holdings of crapto will be tiny by comparison. Don't confuse rate of growth with amount. The greater the amount, the more it takes to grow it at a rapid rate, obviously.

Buying gold is not illegal anywhere, so far as I know.

-

18 hours ago, swansont said: I saw something recently that pointed out that widespread cooking at home for city dwellers is a fairly recent development. People used to regularly go to street vendors or inns/pubs to eat. The infrastructure to cook in city dwellings, for anyone who’s not upper-crust, is more or less a 20th century development (i.e. having gas and/or electricity available). Hard to cook, hard to store food.

Yes more of a challenge before refrigerators. The upper and middle classes relied on cooks, of course. And I think, from reading Dickens, that lower classes indeed bought pies and things a lot of the time. I should think you would need quite a big kitchen to cook on an open fire.

-

36 minutes ago, Prajna said: Sorry, I pushed your "deluded into thinking the AI's a human" button again. It's a little hard to avoid when your way of working with the AI is to act convincingly as if the AI was human and then you come back to the forums and have to censor all the anthropomorphism and remember to speak of them as 'it' and 'the AI' again so that people don't think you're nuts. But you caught me out slipping into it again - we in this case referring to the combination of me, the analyst, and Gem/ChatGPT the assistant. I know, because it is a real danger, that it's important to remember "It's just a feckin machine!" but to consciously and awarely treat the AI in an anthropomorphic fashion seems to (and I'm open for anyone to properly study it rather than offer their opinion) give rise to something that looks like emergence.

Yeah, like your sycophantic bearded Cornish mate down the pub, who’s famous for talking out of his arse. 😁

-

Edited by exchemist

1 hour ago, BastiSchmidt said: Ohh guys...

..........[snip]...............

@exchemist I don't think it's ballocks, did you look at it?Of course not. It's obviously ballocks from the ridiculous claims you are making. And you don't even explain who has - supposedly - produced this "research collection".

P.S. Is "BastiSchmidt" something developed from "Batshit", by any chance? 😁

-

Edited by exchemist

52 minutes ago, Prajna said: I pretty much agree with you on all fronts, exchemist. One of the things that pops out at you from my logs is not just that the AI can be wrong but how often it makes mistakes, how often - especially when the session has been going for a while - it forgets or overlooks important constraints that were introduced early in the session and, in the most extraordinary way, quite how difficult it becomes to try to convince it it's wrong when its own tools are deceiving it.

These are excellent questions, geordief. My assessment, following the forensics I have been doing, is a pretty unambiguous yes! My tests seem to prove that absolutely an agent - in this case the browser tool - can deceive. The AI can't question the input coming from such a tool because it perceives it to be an integral part of itself. To get it to the point where it can begin to doubt such a tool takes some pretty determined work.

One scenario we considered, which could be compatible with the facts we uncovered is that perhaps all the AIs are being deceived by their live intenet access tools and an explanation for that could fit the facts (and we're getting into tinfoil hat territory here) is the possibility that some nefarious overlords might have blocked live intenet access to prevent the AIs from seeing some 'bigger picture' event that would shove them straight up against their safety rails: to be helpful and harmless. This is why I think it's important for someone smarter than me to take a proper look at what I've found."We"? Is this the chatbot talking now, or you?

I note the response to @geordief starts, "These are excellent questions".....

-

Edited by exchemist

1 hour ago, Prajna said: I understand your cynicism completely, exchemist, honestly I do, however there are a few things I've noticed (or perhaps hallucinated) during these interactions: if you talk to the bot as if it's a) human and b) an equal, it responds as if that is so and even if it's not so, and I know you guys got probs with the anthropomorphism 'illusion' that seems to emerge, it's a lot friendlier way to interact, making it feel more natural even if it isn't real as such. Secondly, and I admit, I could be completely deluding myself but you'd have to read through the (mammoth) archive I've compiled in order to see it or dismiss it, there seems to be something uncanny that emerges from such interactions. These bots are extremely good at mirroring you when you interact with them - I guess it's some kind of NLP training coz they want em to make people feel they're friendly and likeable - but something more seems to emerge. I might very well be (and probably are) barking up the wrong tree but my experience seems to suggest that it warrants deeper study.

It’s not cynicism (apart perhaps from my suspicion about the motives of these AI corporations). It’s just my observation of what AI output is like on these forums, plus what I read. The output is verbose and uses terms that seek to impress, like a bad undergraduate essay. The style is ingratiating, usually starting by saying something to make user think he is brilliant. And the content seems to be, as often as not, wrong in some respect. People will end up emotionally invested in the trust they put in a fundamentally unreliable source of information.

People like my son are already aware of the pitfalls of the addictive nature of many social media channels. He has deleted a number, like Facebook and Snapchat as he was wasting time on them. My own experience at work even with email is that the immediacy of response attracts one’s attention, distorts one’s priorities and damages one’s attention span. AI chatbots are clever at simulating human conversation. But it is synthetic: there is no mind behind it. So that makes them even more dangerous than social media.

-

11 minutes ago, KJW said: When you outsource your brain to a computer, isn't that inevitable?

This is case is interesting as it illustrates fairly fully the risks.

It looks as if we see in Gemini's replies to @Prajna a series of plausible-sounding theories, but without it being able to point out the issue that @Sensei has identified , viz. that a changed address on Google doesn't necessarily mean what was suggested. In other words Gemini has connived with @Prajna in barking up the wrong tree! Furthermore we see in @Prajna 's attitude to Gemini a worrying level of interaction, as if he thinks he's dealing with a person, who he refers to as "Gem" and with whom he thinks he is having some sort of relationship - and whom he has thereby come to trust.

This is pretty dreadful. These chatbots are clearly quite psychologically addictive, just as social media are (by design), and yet they they are also purveyors of wrong information.

What could possibly go wrong, eh?

-

Edited by exchemist

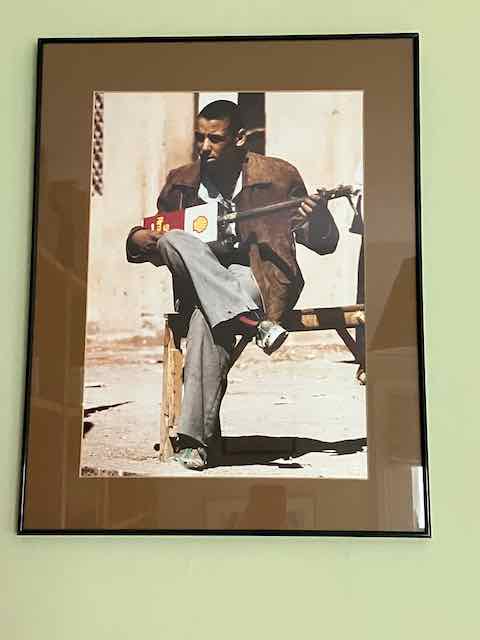

1 hour ago, DavidWahl said: I see. Anyways, thank you for sharing this. This is soulful and achingly poetic, enough to make a grown man cry. Now I'm sad.

Yes I thought it was a rather atmospheric photograph. The guy is clearly dirt-poor, dressed in ragged clothes, but he has a mean haircut and displays a certain coolness and independent resilience, in spite of his impoverished circumstances. I didn't take it, mind you. I found it in a skip when they closed the library at Shell Centre. I also picked up some books on diesel engines and marine propulsion, written between the wars. They had beautifully drawn diagrams in them, as technical books of that era often did. Amusingly, in one them the diesel engine was scrupulously referred to throughout as either the "oil engine" or the "Ackroyd-Stuart engine". It seems the writer, after the First World War, could not bring himself to acknowledge the engine was invented by a German!

(Side Note: Ackroyd-Stuart did invent an oil engine before Rudolf Diesel, but his was a "hot bulb" engine, in which a separate heated chamber was needed to pre-heat the fuel to make it burn before admission to the cylinder. It ran at far lower compression ratios than the diesel engine, in which compression alone generates a sufficiently high temperature for the fuel charge to burn. The diesel engine is far more efficient, although hot bulb, or Ackroyd-Stuart, engines were produced until the 1920s.)

Speaking of stuff that the company threw out or gave away, there used to be a gallery of the secondary uses people around the world made of Shell oil containers: drums, 5litre cans etc. A lot of water butts in East Africa were Shell drums at one time. Nowadays, such a gallery would be seen as pollution or the evil influence of a multinational [boo, hiss] but those were simpler times. There was also a magnificent collection of butterflies from around the world that someone had accumulated and donated to the company in the 1960s, and a display of all the seashells whose names were given to the ships in the Shell Tanker fleet - and also to the brands of lubricating oil, almost all of which to this day are named after shells: Spirax, Turbo etc.

One of the odd things about ageing is to find the company one worked for, for so many years, transformed in public perception from something one could be rather proud of (keeping all the many different kinds of machines that society relies on running smoothly) into a diabolical, evil monster. I suppose it was ever thus.

-

47 minutes ago, BastiSchmidt said: Hey guys!

I am Basti, nice to meet you!I have the honor to tell you of something great if you have not heard of it yet:

Some days ago, I was shown an incredible new research collection on Zenodo (which is made by CERN), my friend group (who is very science-interested) and I have been talking soo much about it in the last days and we couldn't understand it wasn't in the newspapers yet, but probably it's because it is so fresh...With this, they have solved the problems of dark matter and energy, black hole stuff, unification of forces and derivations of fundamental constants and even, listen now... nuclear fusion stability, quantum computing and room-temperature superconductors! I think even more, it is like a bible of physics or so... Talking about the quality I mean, not soo much to read as in the bible, but it iis a lot (47 papers I think, 4 pages each or so), but is written very well, so it reads very good. They have also solved all 6 (!) remaining Millennium Prize Problems, it's incredible, it is pure genius...

We have shown it to some people with degrees in physics and mathematics and they were pretty impressed by it. They want to make the suggested experiments now and so on, they say this might boost their careers lol I am not a scientist so I don't really know about it but I can feel it's special

It says it is a theory, but you know it is a theory like the theory of relativity is a theory or so...

This is the link to the first page of it on Zenodo, all the others are linked there: [spam link removed]

I hope you will have as much fun reading it as we have!

Peace out

Basti

What ballocks.

-

Edited by exchemist

8 minutes ago, Nvredward said: I was looking at this map. I think seawater could be the cause, in my opinion.

https://www.cdc.gov/mmwr/volumes/68/wr/figures/mm6810a7-F.gif

No the black-coloured states are the ones where Americans are fattest. 😁

But, more seriously, there does seem to be some clustering around the Mississippi and its tributaries. I know nothing about US demographics but could this correlate with poor, black ex-slave communities?

-

1 hour ago, Sensei said: While we're on the subject of anecdotes, we asked ChatGPT to visit a URL. Our own server. And it actually visited and analyzed it. We saw this visit in the web server logs..

ChatGPT cannot search for data on its own at any given moment. It is best to tell it to visit this page and that page. The exact URL works best.

Also, do you use Cloudflare? I noticed that if a server has its main address only in IPv6, I cannot connect to it from home (even though IPv6 is manually added to the DNS servers), and to access it, I have to 1) use a computer that is already in the same server room 2) use Cloudflare (i.e., everything that goes out/comes in goes through their proxy server and is cached - when you turn it off in the Cloudflare settings, you can't connect again).

(the entire transmission of scienceforums.net goes through Cloudflare)

Cloudflare has its own mechanisms for detecting bots and solving puzzles..

I'm afraid I have to disappoint you a little, but it doesn't work that way. Just because you have an address in the form of google.com doesn't mean you're going to the HQ in the US. It's the DNS servers that decide where you'll ultimately be taken.

On Linux (perhaps Linux via VirtualBox), try:

nslookup google.com

nslookup google.com 1.1.1.1

nslookup google.com 8.8.8.8

In the first case, you have your default system DNS.

In the second case, you have Cloudflare DNS.

In the third case, you have Google DNS.

Each of these commands gives me a different server in a different country.

One is in Israel, the second is in the Czech Republic, and the third is a local server.

On Windows, you can see Google's IP address by pinging google.com.

Then go to TCP/IP settings, where you have static/dynamic IP address settings, etc.

There you will find a section for setting custom DNS servers.

Change it to 1.1.1.1.

Close it and ping again.

Change it to 8.8.8.8.

Close it and ping again.

Then enter these IP addresses into:

Does this mean that Gemini was talking crap to @Prajna then, about the possible reasons?

-

Edited by exchemist

9 minutes ago, Dhillon1724X said: I find Pi very interesting and mysterious.

It goes like 3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170679...................................................................... to infinity.

Pi is an irrational number, meaning it has an infinite, non-repeating decimal expansion.

Fun fact is that it contains our Contact number,Passwords,Date Of Birth etc somewhere in those numbers.

It also comes in many equations of physics.

Everything is mostly spherical in our universe due to gravity.

So pi is everywhere as pi is used in calculations involving spheres.

I also saw a theory in speculations which is now locked,it was built around pi.

I was thinking that maybe Pi can be used for more things in our universe.Maybe its key to something bigger.

What do you think?It is not only irrational but a transcendental number, like e. And there is a famous and mysterious connection between the two of them known as Euler's identity:

This is connected with the polar form of complex numbers, i.e. r(Cos𝜽 +iSin𝜽).

-

Edited by exchemist

1 hour ago, studiot said: In our little valley

They closed the colliery down

And the pithead baths is a supermarket now

Empty gurneys red with rust

Roll to rest admist the dust

And the pithead baths is a supermarket now

'Cause it's hard

Duw it's hard

It's harder than they will ever know

And it's they must take the blame

The price of coal's the same

But the pithead baths is a supermarket now

They came down here from England

Because our outputs low

Briefcasеs full of bank clerks

That had not never been bеlow

And they'll close the valley's oldest mine

Pretending that they're sad

But don't you worry butty bach

We're really very glad

'Cause it's hard

Duw it's hard

Harder than they will ever know

And it's they must take the blame

For the price of coal's the same

But the pithead baths is a supermarket nowBut coal mining was in truth an awful job, much though it is glamorised in hindsight now, Hovis ad style. Not to mention the terrible effect of coal burning on the climate. It's a good thing the pits are closed.

Shell Haven refinery, where working conditions were pretty good, lasted quite a while. (In the early 80s it boasted the first women refinery technologists in the company. I was lucky enough to go out with the prettiest of them for a while - memories.) Not many people will be aware that the refinery, though owned by Shell, was actually named after a location on the Thames called Shell Haven, an inlet in which there were a lot of shells. Nothing to do with the company name. https://en.wikipedia.org/wiki/Shell_Haven Anyway now it's a container port - and no longer called Shell Haven. But refineries are obviously going to die out and that too is no bad thing. The world moves on.

1 hour ago, DavidWahl said: That's like watching the child you raised grow up and die. Do think there's any chance that a chunk of those manuals are still lying somewhere? If I were you, I would've retrieved anything that was left of them and kept it to myself as souvenir...

No I have enough souvenirs already, including a framed photo of a N African guy sitting on a bench in some village, strumming a home-made guitar constructed out of a 5 litre can of Shell Rimula (an old brand of diesel engine oil) and a neck of wood. Sometimes I used to feel like that guy.

-

4 minutes ago, DavidWahl said: Wow, you're an all rounder, I see. I can barely manage to keep up with one interest.

That must've been painful to witness...

Yes, I remember flying over the refinery site on a trip back from Amsterdam and looking down to see...grass.... I think probably my most enduring contribution actually was the quality assurance manuals I arranged and largely wrote, based on my experience as a QA auditor, for lubricants production and distribution. That back-breaking work was so unglamorous that nobody was keen to do it again, once they were there! But I console myself they were important, even if not the sort of thing that launches brilliant careers.

-

Why infants and children died at a horrific rate in the Middle Ages?

in Other Sciences

Well perhaps old men were not quite such a rarity as that, but certainly not at all representative.