-

Posts

108 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Posts posted by thoughtfuhk

-

-

2 minutes ago, studiot said:

Since you wished to preach about the subject, surely you understand what entropy is and the difference between Shannon Entropy, Classical Thermodynamic Entropy and Statistical Mechanical Entropy?

- Shannon entropy does not prevent the measurement of the difference between conscious and unconscious states. (As indicated by the writers, Shannon entropy was used to circumvent the enormous values in the EEG results. It is typical in programming to use approximations or do compressions of the input space!)

- Reference-A, Quote from paper, describing compression rationale: "However, the estimation of C (the combinations of connections between diverse signals), is not feasible due to the large number of sensors; for example, for 35 sensors, the total possible number of pairwise connections is [1442] = 10296, then if we find in the experiment that, say, 2000 pairs are connected, the computation of [102962000] has too large numbers for numerical manipulations, as they cannot be represented as conventional floating point values in, for instance, MATLAB. To overcome this difficulty, we used the well-known Stirling approximation for large n : ln(n!) = n ln(n)˘n".

- Reference-B, Showing that Shannon Entropy (i.e. a compressed measurement) does not prevent comparison of entropy in systems: https://en.wikipedia.org/wiki/Entropy_in_thermodynamics_and_information_theory

My question to you is:

- Why do you personally feel (contrary to evidence) that Shannon entropy measure supposedly prevents the measurement of conscious vs unconscious states in the brain, and also, don't you realize that it is typical in programming to encode approximation of dense input spaces?

0 -

5 minutes ago, studiot said:

I you wish to mention my post, then address my words.

Don't quote another responder at me.

I apologize.

Those profile pictures looked quite similar for a moment.How do the types of entropy supposedly disregard the study's validity?

0 -

7 hours ago, Strange said:

It seems unlikely. Your idea (as expressed in the title of this thread) is the almost exact opposite of the paper.

They say: increasing entropy may cause consciousness

You say: consciousness causes increasing entropy

(I say: correlation is not causation.)

You could also learn from the cautious language in that article. They are scientists doing research based on real data, and yet they use terms like "could be", "suggests", "might be", "a good starting point", "needs to be replicated", "hints at".

Compare that to your(*) aggressively confident, assertive and insulting tone. Perhaps you could learn something from the thoughtful approach in the article you link.

(*) Random Guy On The Internet with little support for your ideas beyond a few cherry-picked articles.

1. They didn't say that "entropy may cause consciousness". In fact the word "cause" can't be found in the paper!

1.b) Reference-A, showing what they say, contrary to your claim "Strange": They say that the "the maximisation of the configurations" in the brain is what yields higher values of entropy.

2.c) Reference-B, showing what they say, contrary to your claim "Strange": "In our view, consciousness can be considered as an emergent property of the organization of the (embodied) nervous system, especially a consequence of the most probable distribution that maximizes information content of brain functional networks".

2.d) Reference-C, showing what they say, contrary to your claim "Strange": "Hence, the macrostate with higher entropy (see scheme in Fig. 4) we have defined, composed of many microstates (the possible combinations of connections between diverse networks, our C variable defined in Results), can be thought of as an ensemble characterised by the largest number of configurations."

2.) You're correct about the confidence seen in the old purpose thread, and in fact, I had updated my article elsewhere to reflect that Alex Gross' work was hypothesis level. (I even called it hypothesis several times in the earlier OP, although you seem to have missed that!)

3.) Reference-D, showing that I updated the tone, before your advice:

(It looks like duplicate threads were created, but you chose to respond to the one without the words "hypothesis" added)

7 hours ago, EdEarl said:I find the cherry picking less problematic than your point that thoughtfunk seems to misunderstand the research.

I find it odd that you simply took "Strange's" words as valid, without thinking about the problem yourself.

Strange is shown to be wrong above.

6 hours ago, studiot said:It is a great shame that two different branches of Science should employ the same word for quite different meanings, just because the numerical pat has the same mathematical form.

Readers should be aware of which type of entropy is intended.

I don't detect the relevance of your quote above.

Could it have stemmed from your haphazard support of Strange's false claim above?

0 -

- Here's a newish article by another author: Scientists Show Human Consciousness Could Be a Side Effect of 'Entropy'

- Paper in article: https://arxiv.org/abs/1606.00821

- My earlier thread regarding human purpose, entropy and artificial general intelligence may be a good way to help explain the paper above. (See the earlier thread)

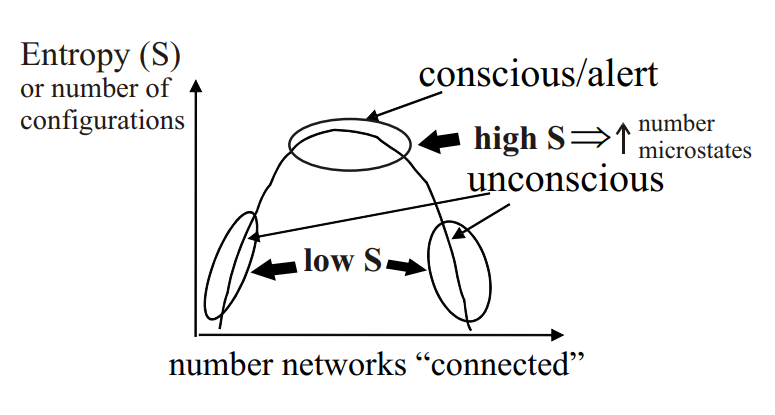

Anyway, the paper from the new article above says the more deep in sleep the mind, the lower the entropy.The converse is true, the more awake the mind is, the higher the information content, the higher the amount of neuronal interactions, the higher the values of entropy.The paper uses "Stirling Approximation" to compute some measure of entropy, "macrostate" [math]C[/math]:[math]S = ( N \cdot ln(N/N − p) − p \cdot ln(p/N − p) ) \equiv lnC[/math] (Figure 1 Stirling approximation on human EEG data)- I think it is reasonable to estimate that "[math]C \in \{X\}[/math]", where "[math]C[/math]" represents an ensemble or macrostate sequence about some distribution of entropy in human neuronal terms as underlined by Mateos et al in new article/paper above, while "[math]\{X\}[/math]" (wrt equation 4 from Alex Wissner Gross' hypothesis/paper from my earlier thread) describes some macrostate partition that reasonably encompasses constrained path capability, that permits entropy maximization, as underlined by Dr. Alex Wissner Gross in his hypothesis.

- Finally, beyond the scope of humans (as indicated by "[math]C[/math]") one may additionally garner of some measure of "[math]\{X\}[/math]" that may subsume higher degrees of entropy. (i.e. Artificial General Intelligence will likely have more brain power than humans, and hence a higher measure of "[math]\{X\}[/math]" compared to humans.)

- In the section 5 or the conclusion of the newish paper by Mateos et al, they also hope to describe the entropy maximization phenomenon in terms of "non-biological" entities (i.e. apparently Artificial General Intelligence), so perhaps my imagination above, about some additional measure of [math]\{X\}[/math] beyond human conscious state (where entropy in humans is denoted [math]C[/math]), is reasonable.

0 -

- Here's a newish article by another author: Scientists Show Human Consciousness Could Be a Side Effect of 'Entropy'

- Paper in article: https://arxiv.org/abs/1606.00821

- My earlier thread regarding human purpose, entropy and artificial general intelligence may be a good way to help explain the paper above. (See the earlier thread)

Anyway, the paper from the new article above says the more deep in sleep the mind, the lower the entropy.The converse is true, the more awake the mind is, the higher the information content, the higher the amount of neuronal interactions, the higher the values of entropy.The paper uses "Stirling Approximation" to compute some measure of entropy, "macrostate" [math]C[/math]:[math]S = ( N \cdot ln(N/N − p) − p \cdot ln(p/N − p) ) \equiv lnC[/math] Figure 1 Stirling approximation on human EEG data- I think it is fair to imagine that "[math]C ∈ {X}[/math]", where "[math]C[/math]" represents an ensemble or macrostate sequence about some distribution of entropy in human neuronal terms as underlined by Mateos et al in new article /paper above, while "[math]{X}[/math]" (wrt equation 4 by Alex Wissner Gross from my earlier thread) describes some macrostate partition that reasonably encompasses constrained path capability, that permits entropy maximization, as underlined by Dr. Alex Wissner Gross.

- Finally, beyond the scope of humans (as indicated by "[math]C[/math]") one may additionally garner of some measure of "[math]{X}[/math]" that may subsume higher degrees of entropy. (i.e. Artificial General Intelligence will likely have more brain power than humans, and hence a higher measure of "[math]{X}[/math]" compared to humans.)

0 -

Just now, swansont said:!

Moderator Note

What part of "Don't start another thread on this topic" didn't you understand?

I didn't provide the source the last time.

0 -

1.) Reasonably, evolution is optimising ways of contributing to the increase of entropy, as systems very slowly approach equilibrium. (The universe’s predicted end)

a.) Within that process, work or activities done through several ranges of intelligent behaviour are reasonably ways of contributing to the increase of entropy. (See source)

b.) As species got more and more intelligent, reasonably, nature was finding better ways to contribute to increases of entropy. (Intelligent systems can be observed as being biased towards entropy maximization)

c.) Humans are slowly getting smarter, but even if we augment our intellect by CRISPR-like routines or implants, we will reasonably be limited by how many computational units or neurons etc fit in our skulls.

d.) AGI/ASI won’t be subject to the size of the human skull/human cognitive hardware. (Laws of physics/thermodynamics permits human exceeding intelligence in non biological form)

e.) As AGI/ASI won’t face the limits that humans do, they are a subsequent step (though non biological) particularly in the regime of contributing to better ways of increasing entropy, compared to humans.

2.) The above is why the purpose of the human species, is reasonably to create AGI/ASI.

- There are many degrees of freedom or many ways to contribute to entropy increase. This degree sequence is a “configuration space” or “system space”, or total set of possible actions or events, and in particular, there are “paths” along the space that simply describe ways to contribute to entropy maximization.

- These “paths” are activities in nature, over some time scale “ [math]\tau[/math] ” and beyond.

- As such, as observed in nature, intelligent agents generate particular “paths” (intelligent activities) that prioritize efficiency in entropy maximization, over more general paths that don’t care about or deal with intelligence. In this way, intelligent agents are “biased”, because they occur in a particular region (do particular activities) in the “configuration space” or “system space” or total possible actions in nature.

- Highly intelligent agents aren’t merely biased for the sake of doing distinct things (i.e. cognitive tasks) compared to non intelligent, or other less intelligent agents in nature for contributing to entropy increase; they are biased by extension, for behaving in ways that are actually more effective ways for maximising entropy production,compared to non intelligent or less intelligent agents in nature.

- As such, the total system space, can be described wrt to a general function, in relation to how activities may generally increase entropy, afforded by degrees of freedom in said space:

[math]S_c(X,\tau) = -k_B \int_{x(t)} Pr(x(t)|x(0)) ln Pr(x(t)|x(0)) Dx(t)[/math] Equation(2)

6. In general, agents are demonstrated to approach more and more complicated macroscopic states(from smaller/earlier, less efficient entropy maximization states called “microstates”), while activities occur that are “paths” in the total system space.

- 6.b) Highly intelligent agents, behave in ways that engender unique paths, (by doing cognitive tasks/activities compared to simple tasks done by lesser intelligences or non intelligent things) and by doing so they approach or consume or “reach” more of the aforementioned macroscopic states, in comparison to lesser intelligences, and non intelligence.

- 6.c) In other words, highly intelligent agents access more of the total actions or configuration space or degrees of freedom in nature, the same degrees of freedom associated with entropy maximization.

- 6.d) In this way, there is a “causal force”, which constrains the degrees of freedom seen in the total configuration space or total ways to increase entropy, in the form of humans, and this constrained sequence of intelligent or cognitive activities is the way in which said highly intelligent things are said to be biased to maximize entropy:

[math]F_0(X,\tau) = T_c \nabla_X S_c(X,\tau) | X_0[/math]Equation(4)

7) In the extension of equation (2), seen in equation (4) above, "[math]T_c[/math]" is a way to observe the various unique states that a highly intelligent agent nay occupy, over some time scale "[math]\tau[/math]"....(The technical way to say this, is that "[math]T_c[/math] parametrizes the agents' bias towards entropy maximization".

8) Beyond human intelligence, AGI/ASI are yet more ways that shall reasonably permit more and more access to activities or "paths" to maximise entropy increase.

A) Looking at item (8), one may see that human objective/goal is reasonably to trigger a next step in the landscape of things that can access more ways to maximize entropy. (Science likes objectivity)

B) The trend says nature doesn't just stop at one species, it finds more and more ways to access more entropy maximization techniques. Humans are one way to get to whichever subsequent step will yield more ways (aka more intelligence...i.e. AGI/ASI) that shall generate additional "macrostates" or paths towards better entropy maximization methods.

0 -

The “Supersymmetric Artificial Neural Network” (or “Edward Witten/String theory powered artificial neural network”) is a Lie Superalgebra aligned algorithmic learning model (created by myself 2 years ago), based on evidence pertaining to Supersymmetry in the biological brain.

A Deep Learning overview, by gauge group notation:

- There has been a clear progression of “solution geometries”, ranging from those of the ancient Perceptron to complex valued neural nets, grassmann manifold artificial neural networks or unitaryRNNs. These models may be denoted by [math]\phi(x,\theta)^{\top}w [/math] parameterized by [math]\theta[/math], expressible as geometrical groups ranging from orthogonal to special unitary group based: [math]SO(n)[/math] to [math]SU(n)[/math]..., and they got better at representing input data i.e. representing richer weights, thus the learning models generated better hypotheses or guesses.

- By “solution geometry” I mean simply the class of regions where an algorithm's weights may lie, when generating those weights to do some task.

- As such, if one follows cognitive science, one would know that biological brains may be measured in terms of supersymmetric operations. (Perez et al, “Supersymmetry at brain scale”)

- These supersymmetric biological brain representations can be represented by supercharge compatible special unitary notation [math]SU(m|n)[/math], or [math]\phi(x,\theta, \bar{{\theta}})^{\top}w[/math] parameterized by [math]\theta, \bar{{\theta}}[/math], which are supersymmetric directions, unlike [math]\theta[/math] seen in item (1). Notably, Supersymmetric values can encode or represent more information than the prior classes seen in (1), in terms of “partner potential” signals for example.

- So, state of the art machine learning work forming [math]U(n)[/math] or [math]SU(n)[/math] based solution geometries, although non-supersymmetric, are already in the family of supersymmetric solution geometries that may be observed as occurring in biological brain or [math]SU(m|n)[/math] supergroup representation.

Psuedocode for the "Supersymmetric Artificial Neural Network":

a. Initialize input Supercharge compatible special unitary matrix [math]SU(m|n)[/math]. [See source] (This is the atlas seen in b.)

b. Compute [math]\nabla C[/math] w.r.t. to [math]SU(m|n)[/math], where [math]C[/math] is some cost manifold.

- Weight space is reasonably some K¨ahler potential like form: [math]K(\phi,\phi^*)[/math], obtained on some initial projective space [math]CP^{n-1}[/math]. (source)

- It is feasible that [math]CP^{n-1}[/math] (a [math]C^{\infty}[/math] bound atlas) may be obtained from charts of grassmann manifold networks where there exists some invertible submatrix entailing matrix [math]A \in \phi_i (U_i \cap U_j)[/math] for [math]U_i = \pi(V_i)[/math] where [math]\pi[/math] is a submersion mapping enabling some differentiable grassmann manifold [math]GF_{k,n}[/math], and [math]V_i = u \in R^{n \times k} : det(u_i) \neq 0[/math]. (source)

c. Parameterize [math]SU(m|n)[/math] in [math]-\nabla C[/math] terms, by Darboux transformation.

d. Repeat until convergence.

References:

-

Although not on supermanifolds/supersymmetry, but manifolds instead , here’s a talk by separate authors at Harvard University, regarding curvatures in Deep Learning (2017).

-

A relevant debate between Yann Lecun and Marcus Gary, along with my commentary, on the importance of priors in Machine learning.

-

Deepmind’s discussion regarding Neuroscience-Inspired Artificial Intelligence.

0 -

40 minutes ago, Strange said:

Thank you.

That wasn't so hard, was it.

I still don't see how you make the logical leap from "a thing that intelligent agents do" to "the purpose of intelligence". But I really can't be bothered to waste another week trying to wring some more detail out of you.

A) Look at item (8.b), and you'll see that human objective/goal is reasonably to trigger a next step in the landscape of things that can access more ways to maximize entropy. (Science likes objectivity)

B) Remember, the trend says nature doesn't just stop at one species, it finds more and more ways to access more entropy maximization techniques. Humans are one way to get to whichever subsequent step will yield more ways (aka more intelligence...i.e. AGI/ASI) that shall generate additional macrostates or paths towards better entropy maximization methods.

0 -

4 hours ago, Strange said:

Sigh. Then AGAIN, please quote the relevant text and/or mathematics from this paper that show that increasing intelligence resulted in improvements in increasing entropy.

So far the only example of a "cognitive task" that you have provided is "reading". Does the paper you linked mention reading? No.

How does reading improve entropy?

How does "optimising" reading further improve entropy?

What does it mean to "optimise reading"?

Can you either answer these questions or provide some relevant examples of cognitive tasks, and explain how they act to increase entropy.

And, in case it isn't clear: I am asking these questions because I don't understand what you are saying. I don't understand what you are saying because you refuse to explain.

So another question: why do you refuse to clarify your idea?

1) There are many degrees of freedom or many ways to contribute to entropy increase. This degree sequence is a "configuration space" or "system space", or total set of possible actions or events, and in particular, there are "paths" along the space that simply describe ways to contribute to entropy maximization.

3) These "paths" are activities in nature, over some time scale "[math]\tau[/math]" and beyond.

4) As such, as observed in nature, intelligent agents generate particular "paths" (intelligent activities) that prioritize efficiency in entropy maximization, over more general paths that don't care about or deal with intelligence. In this way, intelligent agents are "biased", because they occur in a particular region (do particular activities) in the "configuration space" or "system space" or total possible actions in nature.

5) Highly intelligent agents aren't merely biased for the sake of doing distinct things (i.e. cognitive tasks) compared to non intelligent, or other less intelligent agents in nature for contributing to entropy increase; they are biased by extension, for behaving in ways that are actually more effective ways for maximising entropy production, compared to non intelligent or less intelligent agents in nature.

6) As such, the total system space, can be described wrt to a general function, in relation to how activities may generally increase entropy, afforded by degrees of freedom in said space:

[math]S_c(X,\tau) = -k_B \int_{x(t)} Pr(x(t)|x(0)) ln Pr(x(t)|x(0)) Dx(t)[/math] Equation(2)

7.a) In general, agents are demonstrated to approach more and more complicated macroscopic states (from smaller/earlier, less efficient entropy maximization states called "microstates"), while activities occur that are "paths" in the total system space as mentioned before.

7.b) Highly intelligent agents, behave in ways that engender unique paths, (by doing cognitive tasks/activities compared to simple tasks done by lesser intelligences or non intelligent things) and by doing so they approach or consume or "reach" more of the aforementioned macroscopic states, in comparison to lesser intelligences, and non intelligence.

7.c) In other words, highly intelligent agents access more of the total actions or configuration space or degrees of freedom in nature, the same degrees of freedom associated with entropy maximization.

7.d) In this way, there is a "causal force", which constrains the degrees of freedom seen in the total configuration space or total ways to increase entropy, in the form of humans, and this constrained sequence of intelligent or cognitive activities is the way in which said highly intelligent things are said to be biased to maximise entropy:

[math]F_0(X,\tau) = T_c \nabla_X S_c(X,\tau) | X_0[/math] Equation(4)7.e) In the extension of equation (2), seen in equation (4) above, "[math]T_c[/math]" is a way to observe the various unique states that a highly intelligent agent may occupy, over some time scale "[math]\tau[/math]"....(The technical way to say this, is that "[math]T_c[/math] parametrizes the agents' bias towards entropy maximization".)

8.a) Finally, reading is yet another cognitive task, or yet another way for nature/humans to help to access more of the total activities associated with entropy maximization, as described throughout item 7 above.

8.b) Beyond human intelligence, AGI/ASI are yet more ways that shall reasonably permit more and more access to activities or "paths" to maximise entropy increase.

0 -

8 hours ago, Strange said:

If they "demonstrably" got better, you should be able to provide evidence of, or a reference to, such a demonstration. Otherwise it is just another unsupported assertion.

Agan, some evidence supporting this would be nice.

Can you provide some examples? Because the only example so far is "reading" and I don't see how that is a better way to maximise entropy.

1) Please refer to the URL you conveniently omitted from your quote of me above.

2) You claiming what I said to be unsupported, especially when I provided a URL, (aka supporting evidence) which you omitted from your response, is clearly dishonest.

0 -

1 hour ago, Area54 said:

Good. Thank you. We seem to be on the same wavelength on that one. Do you have an approximate notion as to how far "down" the web of life such intelligent behaviour expresses itself? Restricted to primates? Present in amoeba? Somewhere in between?

Also, on to the second question:

Please justify the claim that as such tasks became optimized that intelligence became more generalised.

In the above question "justify" could be replaced by "provide reasoned support for".

An isolated repetition of mine to answer that question:

- As things got smarter from generation to generation, things got demonstrably better and better at maximizing entropy. (As mentioned before)

- As entropy maximization got better and better, intelligence got more general. (As mentioned before)

- More and more general intelligence provided better and better ways to maximize entropy, and it is a law of nature that entropy is increasing, and science shows that this is reasonably tending towards equilibrium, where no more work (or activities) will be possible. (As mentioned before)

- The reference prior given: http://www.alexwg.org/publications/PhysRevLett_110-168702.pdf

0 -

7 minutes ago, Area54 said:

I've read those responses. None of them appear to answer any of my questions. Let's take it one at a time:

Please define, explain, or at least list "cognitive tasks".

Cognitive tasks refer to activities, done through intelligent behaviour. (As long mentioned)

For example, reading is a cognitive task.0 -

5 minutes ago, Area54 said:

I see this has just received a downvote. My hypothesis is that the person with the most likely motivation to downvote that post was you. If I am mistaken then you will be happy to answer those questions. If you choose not to I shall take that as confirmation that you are either trolling or way too smart for me to comprehend. I'll then leave you to your own devices.

I didn't down vote you.

Anyway, please see the responses made to strange here.0 -

1 hour ago, dimreepr said:

Mostly, like this sentence, it just doesn't make sense and I very much doubt Mr. Dawkins has anything to offer in the context of this thread, Try answering the question... But we all know, by now, you're just not capable...

Your comment is 26 minutes old, or 7 minutes older than my latest edited response.(In other words, I removed the typo 7 minutes before your comment, and the forum probably notified you of that, but you still posted anyway)

- So, in case you missed the edit, here is the query I asked: Can you explain why you are not satisfied with my prior summary?

58 minutes ago, Strange said:If people didn't understand your summary there is no point just repeating it.

Nobody has reported lack of understanding of the summary.

If they did, I would be motivated to update it, but they are yet to present that they don't understand the summary.58 minutes ago, Strange said:- provide some specific examples of how intelligence increases entropy: Answer: When life forms are exposed to the pressures of nature, species are biased to maximize entropy, and when they do so, they are doing cognitive tasks or activities in response to nature's pressures.

- provide some specific examples of how evolution optimises this, Answer: As things get smarter from generation to generation, things get better and better at maximizing entropy. (Recall the bias to maximize entropy when faced with nature's pressures)

- explain precisely what you mean by "crispr like routines", Answer: I provided a URL, showing that CRISPR methods are those that can enable us to modify our genome. However, even if we managed to increase our intelligence by that process or otherwise by something like Elon Musk's neural implants, our cognition or cognitive capability (which is a tool for responding to natural pressures and maximizing entropy) are ultimately limited by our skull's size. AGI/ASI won't be subject to that limitation in brain case size.

- explain the logical leap from "this is one thing that humans do" to "this is the purpose of humans" Answer: Humans are only one way that nature maximizes entropy, based on natural pressures. The trend stipulates that nature doesn't just stop at a particular species, but continues to generate or enable the creation of more and more entities that learn to better maximize entropy, from generation to generation. Humans are not the only component in the range of potential general intelligences. (i.e. AGI/ASI are a subsequent step that can better approximate general intelligence aligned cognitive tasks)

And I already explained those things. (See the items in blue)

0 -

22 minutes ago, dimreepr said:

Nope, just explain the following highlights as it pertains to your OP:

- I've already done so. Can you explain why you are not satisfied with my prior summary?

- The following ought to help too, although Alexander advocates very very long term human progression, whereas I describe, like Richard Dawkins does, that the human species may not be required after AGI is created:

0 -

59 minutes ago, dimreepr said:

Strange has been more than patient with you and has done nothing but sensibly critique your repeated nonesense.

This is the abstract from your link:

Start by explaining how it relates to the OP, without the buzzwords.

- Of course, what I've said to strange above, applies to you as well.

- For example, what is it you detect to be invalid, about my prior post (that already summarized the work quite well in relation to my OP that described AGI as a purpose of the human species)?

- Will I have to explain all details down to what year the authors of the references were born, and what year the authors first attended college?

0 -

15 minutes ago, Strange said:

It is not at all helpful to just repeat the same vague comments. Details, please DETAILS.

The problem is, when asked for details you either avoid the question, link to an article with no apparent relevance, or link to an extremely technical article that you are unable to talk about. Which leads me to believe that you don't understand it - but it has some of you buzzwords in it. Feel free to prove me wrong and tell me what the paper actually says. What parts of their mathematical model are relevant to your claims? Which examples from the paper are most relevant to your argument?

- It seems you'll never be satisfied no matter how clear the responses returned to you are.

- You were already on an old agenda to support your false preconceived notions on the matter, and so you continue grovel in some delusion that I don't understand what I am presenting. (Simply because the topic appears to be outside of your typical scope of understanding)

- I've done my best to summarize the work, and I am yet to receive any sensible criticism from you.

17 minutes ago, Strange said:It is not at all helpful to just repeat the same vague comments. Details, please DETAILS.

What more details do you desire? You must grasp by now the connection between intelligence, evolution, and optimization.

- I don't detect where I've failed to show these connections. If the topic did not exist within the scope of your knowledge before I posted the OP, now you should at least be less ignorant on the matter. Nobody is infallible/omniscient.

-1 -

19 hours ago, geordief said:

But a hypersurface is as I described?

If they are , are they solely mathematical curiosities or are there practical applications? (hypersurfaces ,;not surfaces that is)

Hypersurfaces are extremely common in Machine learning/Deep learning:

- We see hypersurfaces in how artificial neurons acquire activity from neighbouring neurons, by integration, summation or averaging.

- Example 1: Weak analogy to biological brain in artificial neuron activation sum (or hypersurface): [math]Neighbouring_{activity}=W \cdot x + b[/math] (Common in old hebbian learning models)

- Example 2: A less weaker analogy to biological brain in artificial neuron activation sum (or hypersurface): [math]Neighbouring_{activity}=W * x + b[/math] (Representing a convolution denoted by [math]*[/math], common in modern Convolutional neural networks)

0 -

10 minutes ago, Strange said:

Any chance you could summarise the main points in your own words?

To the best of my recollection and abilities, that is what I had been doing all along, including what I posted 4 posts ago:

Quote1.a) Your words: "What is evolution optimizing?"

1.b) My response:

1.c) Evolution is optimising ways of contributing to the increase of entropy, as systems very slowly approach equilibrium. (The universe's predicted end)

1.d) Within that process, work or activities done through several ranges of intelligent behaviour are reasonably ways of contributing to the increase of entropy.

1.e) As species got more and more intelligent, nature was finding better ways to contribute to increases of entropy. (Intelligent systems can be observed as being biased towards entropy maximization)

1.f) Humans are slowly getting smarter, but even if we augment our intellect by crispr like routines or implants, we will reasonably be limited by how many computational units or neurons etc fit in our skulls.

1.g) AGI/ASI won't be subject to the size of the human skull/human cognitive hardware. (Laws of physics/thermodynamics permits human exceeding intelligence in non biological form)

1.h) As AGI/ASI won't face the limits that humans do, they are a subsequent step (though non biological) particularly in the regime of contributing to better ways of increasing entropy, compared to humans.

2) The above is why the purpose of the human species, is reasonably to create AGI/ASI.

0 -

11 hours ago, thoughtfuhk said:

1) I changed the "of course" to "reasonably", 19 minutes ago. (Which was 12 minutes before your post)

2) See this reference: The connection between intelligence and entropic maximization.

3) Yes, I am familiar with hundreds of Asimov's novels (including "the last answer", and "the last question" ...), but I don't detect any method to reverse entropy.

4) General Ai will be able to better create Ai than humans, and based on evolution's pattern, they will reasonably create super-intelligence, and so on.

1) Typo: The link above just redirects to this page.

2) Here is the correct link showing the non trivial connection between intelligence and evolution:

http://www.alexwg.org/publications/PhysRevLett_110-168702.pdf

0 -

1 hour ago, Strange said:

So is the purpose of evolution (and hence life) to accelerate the end of the universe?

And how, exactly, does evolution optimise the increase entropy?

You have moved from generic claims of "cognitive tasks" to equally generic claims of "increase of entropy". I think you need to be a bit more specific before anyone can understand what you are saying.

Hold the "of course".

Can you explain, or give examples of, how intelligence contributes to the increase of entropy?

Can you give some examples or references for this?

This conclusion seems to be based on a number of loosely connected and contentious ideas:

- That evolution increases the rate of increase of entropy.

- That this is the "purpose" of evolution, rather than just a side effect

- That if this is the purpose of evolution it is also the purpose of humans. Many would argue that we can invent our own purpose (for example, being good, worshiping one or more gods, helping others, creating art, etc)

- That general AI is both possible and achievable (for example, "laws of physics/thermodynamics permits human exceeding intelligence in non biological form" is currently an unjustified assumption)

- That we should create AI to do this better (rather than for more practical reasons like improving health care, industrial safety, caring for the elderly, etc)

A generic AI that is smarter than humans might come up with their own purpose. They might decide they should find a way to reverse entropy: http://multivax.com/last_question.html

1) I changed the "of course" to "reasonably", 19 minutes ago. (Which was 12 minutes before your post)

2) See this reference: The connection between intelligence and entropic maximization.

3) Yes, I am familiar with hundreds of Asimov's novels (including "the last answer", and "the last question" ...), but I don't detect any method to reverse entropy.

4) General Ai will be able to better create Ai than humans, and based on evolution's pattern, they will reasonably create super-intelligence, and so on.

0 -

4 hours ago, Strange said:

You haven't answered the questions. Again.

Just a reminder, the questions were: What is evolution optimising? Why?

You have not said what is being optimised. (Apart form the so-vague-it-is-meaningless "cognitive tasks".)

And you haven't said why.

I would add to these questions: what are the constraints; in other words what has to be traded off against "cognitive tasks"?

(I am not holding my breath for any sort of intelligent answer, though.)

Here is how science works. You gather some evidence, come up with a hypothesis and then make a prediction based on that hypothesis. The prediction is tested against observations.

For example: my hypothesis, based on the evidence of this thread, is that you don't understand your own idea enough to explain it or even talk about it in any detail. The prediction from this hypothesis is that you will respond to my questions above simply by repeating exactly the same thing you have said before (or just referencing previous answers) and, possibly, adding a link to a source with no obvious relevance (and no explanation of its relevance).

Over to you ...

1.a) Your words: "What is evolution optimizing?"

1.b) My response:

1.c) Evolution is optimising ways of contributing to the increase of entropy, as systems very slowly approach equilibrium. (The universe's predicted end)

1.d) Within that process, work or activities done through several ranges of intelligent behaviour are reasonably ways of contributing to the increase of entropy.

1.e) As species got more and more intelligent, nature was finding better ways to contribute to increases of entropy. (Intelligent systems can be observed as being biased towards entropy maximization)

1.f) Humans are slowly getting smarter, but even if we augment our intellect by crispr like routines or implants, we will reasonably be limited by how many computational units or neurons etc fit in our skulls.

1.g) AGI/ASI won't be subject to the size of the human skull/human cognitive hardware. (Laws of physics/thermodynamics permits human exceeding intelligence in non biological form)

1.h) As AGI/ASI won't face the limits that humans do, they are a subsequent step (though non biological) particularly in the regime of contributing to better ways of increasing entropy, compared to humans.

2) The above is why the purpose of the human species, is reasonably to create AGI/ASI.

0 -

13 minutes ago, Silvestru said:

thoughtfuhk - What is evolution optimising? Why?

Apologies for butting in I know the question above has been asked already but it is very simple and the root to this whole mess.

OP, can you please answer this no references needed, I just want to understand in your own words what your point is.

Here is a portion of a response of mine on the prior pages:

1. Evolution selects increasingly suitable candidates all the time. (optimization also pertains to candidate selection)

2.a) In a range of intelligent behaviours, humans are candidates for optimizing cognitive tasks.

2.b) AGI/ASI is observable as yet another thing in nature (although non-biological), that are also candidates that can theoretically generate better intelligence than humans, thus possessing the ability to better optimize cognitive tasks.

3) Based on (1), (2.a) and (2.b), AGI/ASI is a reasonably non-trivial goal to pursue, much like how nature generated smarter things than Neanderthals or chimpanzees.

Here is an ending note, that is newly written:

As you can see from the sequence above:a) Cognitive tasks are being optimized in mammalian entities, ranging from small mammals to neanderthals, chimpanzees and homo-sapiens.

b) As cognitive tasks were optimized, intelligence got more and more general, and more and more able to do more and more varying tasks.

c) Although AGI/ASI may be non-biological, in a range of intelligent behaviours observable in nature, AGI/ASI shall reasonably occur in that range, such that AGI/ASI exceeds humans in cognitive tasks.

d) There is no law of physics that stipulates that general intelligence stops at mankind, the trend shows that it is inevitable that some advanced form AGI/ASI shall occur.

e) Given the trend, it is reasonable that the purpose of the human species is to create AGI/ASI or some other form of human exceeding intelligence, by non-biological means or by crispr/human augmentation respectively.

Contrary to the latter portion of your request, I shall additionally provide a new reference below:

https://en.wikipedia.org/wiki/Cognition0

Consciousness causes higher entropy compared to unconscious states in the human brain

in Computer Science

Posted · Edited by thoughtfuhk