-

Posts

2573 -

Joined

-

Days Won

21

Content Type

Profiles

Forums

Events

Posts posted by Ghideon

-

-

4 hours ago, myname said:

That explains nothing. Watch the video. The movement starts out slow then a sudden rapid movement south east. The equipment used to film this phenomenon is irrelevant in this context.

Ok. The sun's apparent motion across the sky is due to the earth's rotation. That means that a sudden shift in velocity of the suns movement requires the earth rotation to suddenly change. No such change in earth rotation was observed during the eclipse. It would have been a rather cataclysmic event. So the equipment and how it was used is relevant if you want to know how the video looks the way it does.

0 -

25 minutes ago, Sensei said:

Clouds are moving in the foreground, which gives the impression that the sun is moving..

I agree.

1 hour ago, myname said:You can clearly see the sun dropping south east

Modern equipment (hardware and software, phones for instance) may have image and video stabilisation. So the impression @Sensei mentions might be enhanced if the clouds are kept stable by the hardware/software so that the sun looks like it's moving quick.

0 -

On 4/3/2024 at 9:40 PM, Lucas Bet said:

But after this explanation is provided, not only we can understand why the speed of light is fixed for every different observer (it is the clock speed of the Brain) and time dilation effects (clocks can get out of synchronicity) — we can also clearly understand why the speed of light is the maximum speed limit in the Universe.

Can you show, mathematically, how you transform between two inertial frames of reference in your conjecture? Especially; highlight any differences or similarities (due to your ideas) when mathematical equations are applied to brains and clocks* in different inertial frames of reference. Also highlight if any postulates or assumptions deviates from the mainstream. I know the established physics (Lorentz transformation etc) so I'm curious about a comparison.

(Your explanations raises many questions regarding the logical consistency of your conjecture and explanations; I will return to these issues later)

*) Your explanations seem to propose that human brains and time keeping devices does not follow the same physical laws

1 -

3 hours ago, Lucas Bet said:

At the same time, the Turing machine model requires the Brain must have an internal clock speed which behaves as the maximum speed for reality: we perceive this effect as the speed of light, and it is the reason why this speed must be constant for every different observer in special relativity.

Can you explain this in the context of special relativity? Especially how the "internal clock" works for observers in relative motion.

0 -

2 hours ago, Coxy123 said:

please bring £1000,000 to my house

Done. I bring £1000,000 to your house, zero times.

Maybe you are confusing multiplication with subtraction when you create your examples.

(edit: User banned while I was writing)

0 -

1 hour ago, Rian00077 said:

This is the theory about a different space and time definition.

from your document:

Quotelight in A1 is not photons (which cannot exist here), but rather elements of space with rest mass tending to zero (and energy tending to zero).

It seems completely incompatible with established physics, can you elaborate? For instance, how do your ideas explain the photoelectric effect?

0 -

15 hours ago, PeterBushMan said:

someone told me that ---

How to change you IP address

Windows 10: 1) Press the Win+R keys together to open the Run box dialog.

Type cmd and enter.2) Enter ipconfig /release (make sure to include the space) and hit Enter.

3) Type ipconfig /renew (include the space) and select Enter. Close the prompt to exit.

Why it does not wok?

I may depend on what your computer is connected to. Even if you have a dynamic IP address the device that responds to ipconfig /renew command does not necessarily hand out a different IP address. Note @swansont's spot-on response; renew the lease means that the same IP can be reused.

6 hours ago, swansont said:renew the DHCP (Dynamic Host Configuration Protocol) lease.

An example of two situations where same IP is reused (there are others and a thorough answer would require insight into details):

-Static lease (DHCP reservation); the IP is reserved for a specific device

-the server may assign the same IP address because it's the next (or only one) available.0 -

On 1/27/2024 at 8:18 PM, Falkor said:

I am engineer

Ok!

2 hours ago, Falkor said:Ok so apparently this is tied with solonoids apparently. What’s weird is I am getting very huge spikes in electricity. I tried this with some water in a glass and this weird wave kept flowing back and forth through the water it kept multiplying and I was just getting huge results with my oscilloscope that I fried the circuitry. I was also doing some math and this 2 to 3 number keeps appearing it also seems to be tied in with some fractal patterning going on. I must be really stupid because there is something happening here. If I am reading the literature correctly this phenomenon is basically some quantum effect that is tied in with general relativity and quantum mechanics. This dual nature is extremely bothersome. What’s worse is my water system I have designed is extremely efficient. I’m getting huge numbers I have never seen before just from adding these vibrations to the liquid. It can’t possibly be that what’s moving is not the object but space itself? That just can’t be, but this issue with paper having a poisson ratio of less than zero is making me believe that there is some knot action going on and that mass creates a fractal pattern that propagates through space time which leads to general relativity and can be quantized as qbits? I must be really stupid because I’m missing something here

As an engineer, perhaps you could consider revisiting and rewriting your explanation, focusing on ensuring that the mix of scientific terms and concepts is presented coherently and aligns with established scientific principles and theories?

0 -

Your code does not seem syntactically correct.

12 hours ago, Trurl said:p = 2564855351; x = 3; Monitor[While[x <= p, If[(Sqrt[p^3/(p*x^2 + x)] - p) < 0.5, Print[x]; Break[];]; x += 2;]; If[x <= p, While[x <= p, If[Divisible[p, x], Print[x]; Break[];]; x += 2;];], x]First function corrected:

p = 2564855351; x = 3; Monitor[While[x <= p, If[x*(Sqrt[p^3/(p*x^2 + x)]) - p) < 0.5, Print[x]; Break[];]; x += 2;Same as all my recent descriptions.

0 -

Your descriptions do not match program code you have posted. What program do you try to describe?

0 -

On 1/24/2024 at 9:06 PM, Trurl said:

and display x when it breaks it should be within range of the smaller Prime factor.

What does "within range" mean?

23 hours ago, Trurl said:That y intercept is where x approaches the value of the smaller Prime factor.

What do you mean by "approaches"?

0 -

The question is copied from https://www.iypt.org/problems/, @flyer6 can you be more specific regarding your interest in the competition?

1 -

11 hours ago, Trurl said:

The code I posted was generated by ChatGPT. It simplified the equation. You should try it in Mathematica. It is not meant to be efficient but the first function should break (and print) near zero and estimate the smaller factor x.

You may have misunderstood the output from the LLM*. Your claims does not seem to match the code you posed above.

11 hours ago, Trurl said:If the program from ChatGPT works you should be able to modify the testing steps ( from every odd number to x+=22 or larger)

The one thing that needs checking is how GPT simplified the equation.

What are your results from testing your program?

12 hours ago, Trurl said:There are no anomalies

Ok, you missed the point.

*) LLM=Large Language Model

0 -

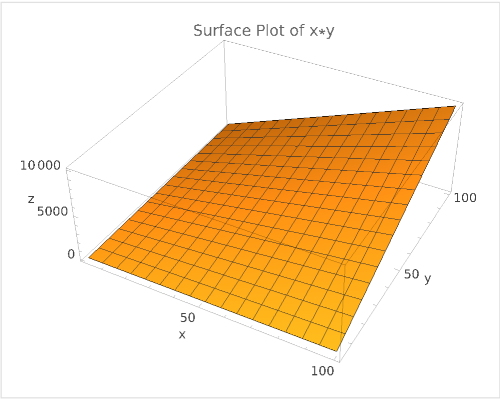

Maybe it helps to view the lack of patterns in more dimensions?

I've generated a surface plot of the function x×y for x and y ranging from 3 to 100. This plot represents a smooth, continuous surface, as expected from the multiplication of real numbers.Within this plot, every prime and semi-prime number in the given range is represented. Notice that the surface is uniformly smooth. There are no distinct features, patterns, or anomalies that visually distinguish primes or semi-primes from other numbers:

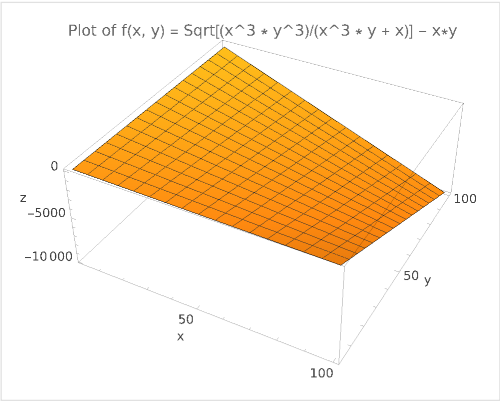

Let's modify the surface plot; the function is based on @Trurl's program code:

This plot is also smooth. Again there are no distinct features, patterns, or anomalies that visually distinguish primes or semi-primes from other numbers.

Using another algebraic function will not help; there are no patterns. Plotting a larger area does not help either; the surface is smooth for any numbers.

20 hours ago, Trurl said:I will try to factor the largest semi-Prime posted earlier.

please report your progress so far.

0 -

2 hours ago, Paulsrocket said:

Nima Arkani-Hamed at the Max Planck institute for physics in Munich

THE END OF SPACETIME. Be sure to let him know what you disagree with

The video, which delves into quantum mechanics and theoretical physics, does not mention 'photons' at all in the transcript. Can you explain how the video is relevant?

(Note: thanks to machine learning and NLP I did not watch the video)

0 -

On 1/8/2024 at 2:19 AM, Trurl said:

Please prove it right by finding large Primes.

You can test using the numbers I provided:

https://www.scienceforums.net/topic/124453-simple-yet-interesting/?do=findComment&comment=1219386

On 1/8/2024 at 2:19 AM, Trurl said:I know such information is valuable, but if no one attempts to find large Primes with it I don’t know if the program even works.

Finding large primes with your approach unfortunately does not work; mathematical reasons why has been presented already.

0 -

15 hours ago, AIkonoklazt said:

If you count "there is no such thing as programming without programming" as a law in computational science, then there is still "a scientific law or theorem that makes artificial consciousness impossible"

I do not count that statement ("there is no such thing as programming without programming") as a law in computational science.

0 -

On 11/22/2023 at 1:57 PM, dimreepr said:

From your link:

Quote“we know of no fundamental law or principle operating in this universe that forbids the existence of subjective feelings in artifacts designed or evolved by humans.1”

To the best of our knowledge, this claim is valid still today.

Thanks! For some reason I did not initially notice your quote @dimreepr

It provides an answer to my initial question in this topic; Is there a scientific law or theorem that makes artificial consciousness impossible?0 -

6 hours ago, Bjarne-7 said:

but you have to give me the data, and to be able to compare forces, you have to tell me how much force is need to keep the the atom in orbit. So please cooperate.

The LHC primarily accelerates protons (and can also accelerate ions). The concept of "atom in orbit" does not apply to the operations and experiments conducted at the LHC at CERN so I do not know what you are asking.

0 -

4 hours ago, Bjarne-7 said:

I prefer that you give an example, but you must know and state how much energy you expect to need to keep an atom in orbit at nearly the speed c. Then I will for comparison calculate how much (little) comparable importance RR has in the worst possible scenario.

Ok. Just show the mathematical equations and we'll insert numbers from publicly available CERN data.

(Note: "atom in orbit" is not related to my question as far as I can tell.)

0 -

2 hours ago, Bjarne-7 said:

I am not sure you quite understand the principles for RR and DFA (etc.) -

That is true. Your descriptions need to be more precise. (as @swansont said above)

2 hours ago, Bjarne-7 said:So you have to ask me a well defined question and also let me know, mass , speed and required energi / force to keep that en orbit... Otherwise we can easy misunderstand each other.

Pick any reasonable example of your liking. Also define what you mean by "orbit" is in reference to the circular motion of particles in LHC. @Bufofrog gave a correct answer above for celestial bodies in orbit and I do not know if that is what you mean here.

0 -

1 hour ago, Bjarne-7 said:

You must be more specific with regard to what you want to know.

Maybe this helps: draw a simple diagram of LHC and show what forces there are, according to your theory, that cancels? Then we can compare your idea with what is happening according to established physics?

0 -

1 minute ago, Bjarne-7 said:

What is the problem ?

One example: The original post discusses RR as a fundamental property of space interacting with moving objects, not something that can be easily nullified by operational forces of a machine.

0 -

Just now, Bjarne-7 said:

Newtons 2nd law, resulting forces, very simple, RR is cancelled out

That does not seem logically coherent with your earlier statements regarding the nature of RR.

0

Turning Information into Energy

in Modern and Theoretical Physics

Posted

Did you read what the AI generated? From your document:

Your post looks like a joke about LLM usage. Was that the intention?