Leaderboard

Popular Content

Showing content with the highest reputation on 05/30/20 in all areas

-

I see there are a few creationist posters here from time to time. So here is a very small amount of the evidence for common ancestry with the rest of the apes: (1) Chromosome Banding Patterns Here is Human Chromosome 2, alongside Chimp, Gorilla and Orang-Utan 2p,2q you can see there that the banding patterns are all pretty much the same. one major difference of course if that the other apes have 2 chromosomes there, whereas humans only have 1. However when we examine the human chromosome in more detail (which you can't from those diagrams) you find that in the centre of the human chromosome we have telomere like structures, which normally exist only at the ends of chromosomes. telomeres are a bit like the cellular lifetime counter, and a bit is lost on each cellular reproduction (with the exception of sex cells and cancer, which repair their telomeres) so if a telomere is '=' and a centromere is '8' (that is the bit of the chromosome containing the genes and so on) then the chimp, gorilla and orang utan 2p and q would look like ===888=== and ===888=== but the human 2 looks like ===888====888=== and you can still see this now in humans. Telomeres are highly conserved sequences, which are primarily the same between all organisms in a group, for example all vertebrates have TTAGGG repeating over and over. In primates, between 300-5000 times. Ajacent to these regions are other regions of repeats called pre-telometric regions, which are highly variable, and vary significantly even within a species, but can be recognised between members of a species and closely related species. In Humans, further evidence for a chromosome fusion, the order of these sequences (in the middle of the chromosome between the two centromere sections) pretelomeric sequence, a telomeric sequence, an inverted telomeric sequence and an inverted pretelomeric sequence. so even these features are conserved. note that only the 2p centromere functions now. the centromere of 2q, while remaining very clear that it was a functioning centromere, is no longer the point where the two chromatids join dusing cellular reproduction. This sort of analysis is not limited to chromosome 2, but can be applied to the entire karyotype: The above image is just of humans and chimps. (2) Endogenous Retroviral Sequences. Retroviruses are a class of viruses that have their genetic material in the form of RNA and consist of groups such as the oncoviruses (e.g. HTLV-1) and lentiviruses (e.g. HIV). Normally DNA is transcribed into RNA before being read in order to produce proteins, however retroviruses use Reverse Transcriptase in order to take their own RNA and integrate it into the organisms own DNA. Like all genetic processes however, there is a risk of inaccuracy, and sometimes a retrovirus may become crippled by a mutation during reverse transcription, and hence may not be able to reproduce itself as a normal virus would. Endogenous retroviruses may embed themselves into any cell in the body, and this includes the sex cells (gametes) as well as the normal body (or somatic) cells. If an ERV occurs in a sex cell that goes on to fertilise an egg (or be fertilised by a sperm) then the ERV will be present in every single cell of the new organism, including it's sex cells (well since it will be in one chromosome, initially it will only be in 50% of the sex cells). Now one of the most important theories within evolution is that of random genetic drift, and this is an element of evolution that was only understood after the discovery of DNA. Genetic drift is a stochastic (statistical definition) process in which a particular allele (version of a gene), or bit of the DNA, will randomly increase and decrease in presence in the population, provided there is no selection pressure on that particlar allele or section of the DNA, and eventually it may become fixed within the population i.e. when it is present in all members of the population. This may happen to an ERV which became embedded within one particular individual; via random genetic drift it may become embedded in the whole breeding population. This occurs more rapidly in smaller breeding groups than large breeding groups. The next step is the consideration of ancestry. If we have a group A, all of whose members have a particular ERV, we will call this ERV 'E1', and this group splits into 2 new groups, B and C, perhaps by a river forming in the middle of the group across which none of the organisms can cross, now both groups B and C will still have this ERV in all members. Now let us say that a new ERV is introduced into a member of group B and becomes fixed in group B. all members of group B will have this new ERV, which we will call 'E2'. now when we look at populations B and C, we see that B has both E1 and E2, and C has only E1. this means that E2 was introduced to the population B after B and C became separated. If B furter splits into Bi and Bii and Bii has a new ERV 'E3' fixed within its poulation, we find that Bi has E1 and E2, Bii has E1 E2 and E3 and population C still only has E1, so we can build up a tree of what order these different groups broke apart. An important point to note, is that we should never find a retrovirus shared between, for example, Bii and C alone, since the common ancestral group between Bii and C is the same common ancestral group with Bi: if an ERV becomes fixed in A, then all of its ancestors should have the ERV. By examining ERVs, we can look at ancestral links between these populations. if we look at the presence of retroviruses within a population we can find when a particular group broke away from a different group due to the presence of the retroviruses within the group. here is a chart of ERV distributions in the primates, and the phylogenetic tree constructed from it the above diagram is from the following paper: Lebedev, Y. B., Belonovitch, O. S., Zybrova, N. V, Khil, P. P., Kurdyukov, S. G., Vinogradova, T. V., Hunsmann, G., and Sverdlov, E. D. (2000) "Differences in HERV-K LTR insertions in orthologous loci of humans and great apes." Gene 247: 265-277. also we have fig 3: Results of the 12 chimeric retrogenes insertional polymorphism study. The chimeras’ integration times were estimated according to the presence/ absence of the inserts in genomic DNAs of different primate species. Note that u3-L1;Ap004289 is a polymorphism within the human species -- it integrated since the LCA of humans. Ref: Buzdin A, et al. The human genome contains many types of chimeric retrogenes generated through in vivo RNA recombination. Nucleic Acids Res. 2003 Aug 1;31(15):4385-90. A common creationist objection to the ERV concept is that of multiple insertions i.e. the idea that a virus might insert itself into the same place in different organisms and it becomes embedded in both organisms i.e. a human might be infected with E1, and this ERV becomes embedded in the human population, and a chimp might become infected with E1 and this also becomes embedded, however there are multiple problems with this hypothesis. First and foremost, Of a genome that is 6 billion bases long, what are the odds that a ERV will be inserted into the same place? 1 in a 6 billion, right? Now, if there are 2 such ERVs, the odds are 1 in 6 billion times 1 in 6 billion for both being inserted into the same places by chance. If there are 3, you must multiply by another 1 in 6 billion. Now, since you have 12 such insertions in humans compared to the common ancestor, you have just passed the creationist number for it having occured by chance! By creationism's own criterion, their argument is invalid. The only creationist rebuttal to this is that there are hot spots, where the odds of a virus being inserted are slightly higher than other places, but there are still a great number of hotspots throughout the genomes, and given the above points, there is no reason why multiple infections would result in the same ERVs being inserted in the same locations with the same crippling errors and showing the same pattern of change with time. Again if there are multiple hotspots and multiple infections, there is no reason that there should not be ERVs that do not match the phylogenetic tree. again we see no deviances from expected inheritance patterns. Secondly, there is no good reason as to why this would form the phylogenetic tree that it does. Even if there was a virus that was simultaneously capable of infecting every kind of primate from new world monkeys through to humans, there is no reason to think that this virus would actually infect every available primate and become fixed in every single population. we might well expect several to be missed i.e. we might see spider monkeys, bonobos, chimps and humans infected, but not gorillas or Orang Utan. we do not find these spurious distributions of ERVs. Thirdly, we just do not find these sorts of retroviruses that have such a wide species affinity. and again, even if we did, there is no reason that the retroviruses would form the phylogenies that they do. Fourthly, the retroviruses are crippled, but still identifiable as retroviruses. the retroviruses that we see in different species are crippled in the same way. If the retroviruses are the result of multiple infections, then there is no reason to expect the retroviruses to be crippled in the same way in different species. Finally, additional alterations have been made to the ERV sequences over time. Since the ERVs themselves are not selected for or against, they themselves may be altered due to the same kind of genetic drift that caused them to be embedded within the population. we see inheritance of these changes too, that also match the phylogenetic tree of the presence of different ERVs. Other Phylogenetic trees can be constructed in similar fashions by looking at ALU sequences (long sequences of repeating DNA) and transposons (kind of like internal viruses that only ever exist within the nucleus and copy themselves around the DNA) (3) Transposons. I will be brief with transposons since most of what needs to be said has already been said in the ERV section. Transposons are a form of internuclear parasite; they are sections of the genome that can copy and paste themselves around the rest of the genome. Again these transposons may become fixed within the population, and form the same sorts of phylogenetic profiles as ERVs. transposons are however completely independent from ERVs and function with a different mechanism (i.e. they do not use reverse transcriptase, they do not have viral coat proteins and they cannot cross cellular boundaries). The only possible mechanism of infection of another organism is via germ line cells - you may infect your children in other words, but nobody else. In this case there is absolutely no possibility for multiple insertions. The same phylogenetic trees can be constructed from independent analysis of transposons. It is these transposons which are responsible for much of the intergenic DNA and are also used in DNA fingerprinting, since cutting of certain chunks of DNA results in the same patterns for a given individual.1 point

-

Hello dear friends! Stretching stiffens polymers a lot, making wonder fibres of banal bulk materials. It brings LCP from 10GPa to 170GPa. Highly stretched polyethylene makes wonder ropes of Dyneema, Spectra and competitors. Stretching *3, easy with a polymer, strengthens much a stripe from a polyethylene shopping bag. Companies that stretch metal (for piano wire and others) could adapt to thicker polymer too. Or polymer manufacturers themselves could stretch or extrude the material cold or lukewarm, so mechanical engineers have stiff strong bulk polymers, lighter and easier to machine without fibre reinforcement. The transverse properties may drop. Rolling a polymer in two directions strengthens both, as polyester (Mylar) films show. This would improve plates. Sometimes the azimuthal stiffness too matters for rods. Schrägwalzen (I ignore the English word, check the drawings) de.wikipedia would improve the azimuthal direction, easy with a polymer. Combine with stretching or extrusion. Marc Schaefer, aka Enthalpy1 point

-

Einstein summation has its topological applications. The summation specifically involves the covariant and contravarient terms of each group. The superscript is being the covariant terms. The subscript contravarient while a mixed group will have both. The full Kronecker delta is an Ideal example to study. Granted the Levi Cevita adds additional degrees of freedom. PS I tend to think more gauge group than topological, while Studiot for example thinks the latter. ( I haven't seen enough of your posts but I am thinking your more the latter as well) @the OP I have zero problems with applying Wilson loops to the SM model In an entirely. It is a viable alternative. So I support the OP on thus methodology though myself I am more up to date on canonical treatments as per GFT. Doesn't invalidate other treatments. I fully support you in showing the Langrangians as per observable vs propriogator action particularly in terms of show to apply the Ops model to the QED and QCD applications. (The Higgs can be addressed later ).1 point

-

Even with remarkably simple iterative systems like cellular automata rule 30 it is still unknown whether the central column is 'randomly' distributed - there is a prize for working out the value of the nth central column without having to run all n iterations - or prove that it is not possible.1 point

-

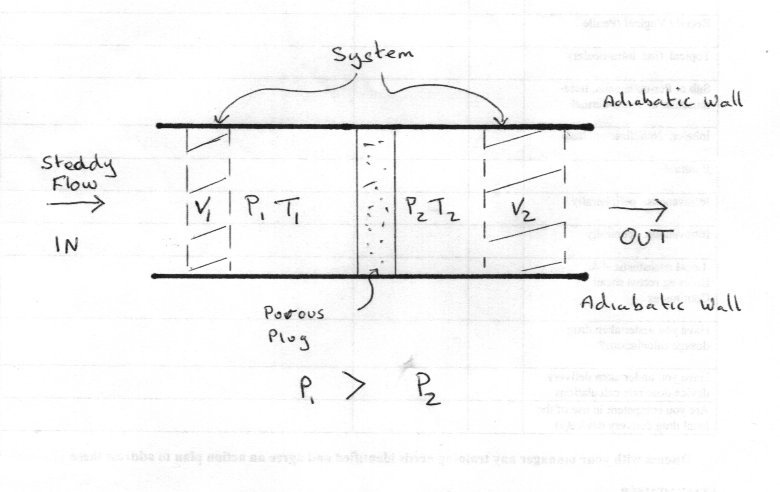

Part 1 The analysis if the Joule-Thompson or Joule-Kelvin flow or throttling is interesting because it demonstrates so many points in a successful thermodynamic analysis. Appropriate system description Distinction from similar systems Identification of appropriate variables Correct application of states Distinction between the fundamental laws and the equations of state and their application JT flow is a continuous steady state process. The system is not isolated or necessarily closed, but may be treated as quasi-closed but suitable choice of variables. It cannot be considered as closed, for instance, if we consider a 'control volume' approach, common in flow processes, since one of our chosen variables (volume) varies. By contrast, the Joule effect is a one off or one shot expansion of an isolated system. So to start the analysis here is a diagram. 1 mass unit eg 1 mole of gas within the flow enters the left chamber between adiabatic walls and equilibrates to the V1, P1, T1 and E1. Since P1 > P2 the flow takes this 1 mole through the porous plug into the right hand chamber where it equilibrates to V2, P2, T2 and E2. The 'system' is just this 1 mole of gs, not the whole flow. The system thus passes from state1 to state 2. The First Law can thus be applied to the change. Since the process is adiabatic, q = 0 and the work done at each state is PV work. E2 - E1 = P1V1 - P2V2 since the system expands and does negative work. Rearranging gives E2 + P2V2 = E1 + P1V1 But E + PV = H or enthalpy. So the process is one of constant enthalpy or ΔH = 0. Note this is unlike ΔE which is not zero. Since ΔE is not zero, P1V1 is not equal to P2V2 More of this later. Since H is a state variable and ΔH = 0 [math]dH = 0 = {\left( {\frac{{\partial H}}{{\partial T}}} \right)_P}dT + {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}dP[/math] [math]{\left( {\frac{{\partial H}}{{\partial T}}} \right)_P}dT = - {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}dP[/math] [math]{\left( {\frac{{\partial T}}{{\partial P}}} \right)_H} = - \frac{{{{\left( {\frac{{\partial H}}{{\partial P}}} \right)}_T}}}{{{{\left( {\frac{{\partial H}}{{\partial T}}} \right)}_P}}}[/math] Where [math]{\left( {\frac{{\partial T}}{{\partial P}}} \right)_H}[/math] is defined as the Joule-Thompson coefficient, μ, However we actually want our equation to contain measurable quantities to be useful so using [math]H = E + PV[/math] again [math]dH = PdV + VdP + dE[/math] [math]0 = TdS - PdV - dE[/math] add previous 2 equations [math]dH = Tds + VdP[/math] divide by dP at constant temperature [math]{\left( {\frac{{\partial H}}{{\partial P}}} \right)_T} = T{\left( {\frac{{\partial S}}{{\partial P}}} \right)_T}dP + V[/math] But [math]{\left( {\frac{{\partial S}}{{\partial P}}} \right)_T} = - {\left( {\frac{{\partial V}}{{\partial T}}} \right)_P}[/math] so [math]V = T{\left( {\frac{{\partial V}}{{\partial T}}} \right)_P} + {\left( {\frac{{\partial H}}{{\partial P}}} \right)_T}[/math] combining this with our fraction for μ we have [math]\mu = {\left( {\frac{{\partial T}}{{\partial P}}} \right)_H} = \frac{{T{{\left( {\frac{{\partial V}}{{\partial T}}} \right)}_P} - V}}{{{C_P}}}[/math] Which give a practical form with quantities that can be measured. [math]\Delta T = \frac{{T{{\left( {\frac{{\partial V}}{{\partial T}}} \right)}_P} - V}}{{{C_P}}}\Delta P[/math] Joule and Thompson found that the change in temperature is proportional to the change in pressure for a range of temperature restircted to the vicinity of T. The next stage is to introduce the second law and the connection between different equations of state and their meanings or implications. Edit I think I've ironed out all the latex now but please report errors to the author.1 point

-

Not a good start. Solar and wind are cheaper. That article doesn’t say that energy consumption is being slashed. (you have a bad link, BTW) https://www.sciencedaily.com/releases/2019/07/190712151926.htm It won’t rise if they install green energy. And that last part is easier, now that wind and solar are cheaper sources of electricity. One problem here is that you are basing your “argument” on old data. What was true in the 1980s is not necessarily true today1 point

-

The use of R^2 isn't particularly practical for your first equation. I would recommend using the full ds^2 line element and applying the full four momentum and four velocity. Also the EM field has symmetric and antisymmetric that involve the Lorenz transforms from the E and B fields of the Maxwell equations. So to state the EM field is symmetric with spacetime isn't accurate. For example To to define the right hand rule for EM fields in your equations. Spacetime doesn't require the Right hand rule so you obviously have vector components of the EM field that is not symmetric with the spacetime metric. This will also become important for different observers/ detectors at different orientations. A key point being many of the Maxwell equations employ the cross product for the angular momentum terms. However the Minkowskii metric employs the inner products of the vectors. This is an obvious asymmetry between the two fields. Not to mention the curl operators of the EM field. Ie Spinors. You will find some of this important for the Pauli exclusion principle as well.1 point

-

</sarcasm> I meant moose. Sorry. </sarcasm> There. I think it's clearer now. Carry on. The elk bit was also sarcasm, but as you are incapable of distinguishing climate prediction from weather forecasting, maths from experiments and who knows what more, I'm giving you some visual help.1 point

-

I think that Turing's contributions were very important but computers would have been developed anyway. Possibly in a more ad-hoc or experimental way in some respects. People were working on computing machines before Turing. One of his major contribution was to the theory of what it is possible to compute (which had been developed by others) by showing that a certain type of computer (a Turing machine) can solve the same problems as any physical computer. And this set of problems is exactly the same as that which can be computed by any method (the Church-Turing thesis). He also did some important work on the application of computers to information theory (algorithmic information theory). These concepts were probably important in the development of cryptography and compression algorithms, by helping to define the limits of computation and compression, and these are important to the development of the Internet.1 point

-

! Moderator Note Kindly lay off the ad hominems. Note that Ken Fabian did not downvote your posts; other folks did it (not that it matters).1 point

-

CAPTCHA is the reverse of the Turing test. https://en.wikipedia.org/wiki/CAPTCHA "Because the test is administered by a computer, in contrast to the standard Turing test that is administered by a human, a CAPTCHA is sometimes described as a reverse Turing test." https://en.wikipedia.org/wiki/Reverse_Turing_test ..and yes, this website has CAPTCHA during registration..1 point

-

Present evidence that this is happening, or it’s a reasonable expectation. You claimed they were “completely ignoring possible benefits” and I debunked that. If they feel that the negatives outweigh the positives, how can you be sure this isn’t a conclusion? How about presenting evidence, instead of moving the goalposts. Disparagement and assertion. No science. A pity you won’t apply your education here. How much warmer is it up in those mountains?1 point

-

Good question, inspires further reading. My answer at this time is "yes" and "maybe not so much" due to: 1: AFAIK pretty much each and every component in the internet infrastructure relies on digital equipment governed by principles of computability going back to Turing. From Turings perspective I do not see much difference, in principle, between for instance an internet router and a computer connected to internet. Router and computer carries out instructions in their respective program. So from that point of view; if we say that Turing helped develop computers and contributed to theory of computation then the same can be said about internet. 2: I do not know to what degree Turing worked on early theories in distributed computing. If we choose to say that theorems regarding consensus, fault tolerance, routing, topology and others are what was really required for a successful development of internet then "maybe not so much" was contributed by Turing. (I may need to revisit some topics I've not touched for a while.)1 point

-

1 point

-

At one point he worked in/with Bell Labs even. I would still say that others were more instrumental in developing the internet itself.1 point

-

Building machines to decode enigma seems pretty practical to me? However I thank the OP for asking the original question since it resulted in my finding out something I did not know about Alan. His main purely theoretical work, an early prediction of chaotic behaviour, resulted in the discovery of B-Z reaction in chemistry.1 point

-

I know Joigus and Eise said they didn't care to introduce Quantum Uncertainty into the discussion, but that could be the randomizing element for both natural and artificial intelligence.1 point

-

A Touring was more involved with theoretical computing, rather than practical applications. Hey, maybe every new member should have to pass a Touring Test, to ensure they are not 'bots.1 point

-

1 point

-

My recollection is that the beginnings of the internet idea was developed by ARPA (now DARPA) around 1960 or so. Their version involved connecting various science research computer facilities and included remote access by outsiders.1 point

-

While we don't know it to 100% absolute certainty, ( experiments have only been able to set an upper limit for photon mass), It is consistent with our understanding of the universe. Relativity, which has passed every test thrown at it so far, Says that anything that has zero rest mass must travel at c and only c (in a vacuum). If something has even the slightest bit of rest mass, it can travel at any speed from 0 up to, but not including, c. So, if a photon had any rest mass at all, we should be able to find at least some photons traveling at speeds well below c. So far this has not been the case. So both theory and observation seem to indicate that photons are indeed massless, and unless something crops up to cast doubt on this, it's the way to bet.1 point

-

Yes, sorry. I was neither clear nor thorough. What I meant is: Once a photon is "born" left-handed, it's left-handed forever, as long as it doesn't interact, and conversely. But on the other hand photons can be produced with equal probability as left or right handed. The electron that is "born" in a beta decay, on the other hand, is always left-handed. Once it's in free flight, though, you can see it as right-handed by just changing to a different inertial system, which you can't do to a photon. AAMOF, handedness of photons and electrons must be defined in different ways. For photons it's helicity, while for electrons it's quirality. Only in v --> c limit do they coincide in some sense. Please, feel free to check me for mistakes, @Sensei. +1. Linearly polarized photons have no definite handedness, for example. Photons and fermions have very different handedness properties. Neutrons will always "spit" lefty electrons when they decay. Electrons, on the other hand, "spit" photons every which handed way. No bias.1 point

-

Of course it helped. Although Richard Hamming is rightly credited with developing the organised structure for digital computing, Alan Turing must have known some of this since he was in at the beginning of digital machines, so must have known and used some of this. By organised structure I mean the combination of several digital units into data 'packets'. By digital I mean the use of a few definite values, not a continous spectrum of values.1 point

-

Marvellous vegetable, the potato. It was one important factor that powered the development of 19 century America. If you ever visit Ireland, visit the American-Irish Folk museum. Lot's on the history of the potato there, including the cooking and nutritional values.1 point

-

Either that, or it is an attempt to gloss over the huge difference in meaning between "conspiracy" and "conspiracy theory". Note: theorising that terrorists conspired to carry out the attack is not a "conspiracy theory". Not all idioms can be analysed in terms of their component parts. A cat burglar is neither someone who steals cats nor a cat who carries out burglaries.1 point

-

When I first saw "Earth's satellite and its first space speed" I immediately assume that the "Earth's satellite" referred to the moon! Then I opened it and saw "the artificial satellite" my first thought was "the"? There are many satellites- "an artificial satellite". In any case, you can calculate the speed at which any satellite, at a given height,, must travel to maintain that height. And a "geosynchronous" satellite must travel at a speed that completes a full orbit (circle) in 24 hours.1 point

-

Yes, that sounds totally right. I should have written it down. My bad. But just for calculations, it's simpler to assume radial escape, right? Correcting myself. Radial trajectory most efficient for calculations, as escape velocity doesn't depend on angle. Efficiency is a different matter completely. Thanks a lot, Janus.1 point

-

Orbital velocity can be expressed as the tangential velocity for a circular orbit. The magnitude of the escape velocity is independent of direction. (technically it is escape speed). An escape velocity trajectory will follow a parabola. So, if you are already in an orbit, it is most efficient to achieve escape velocity by boosting your speed in the direction of your current orbital velocity. In fact, the equations for circular orbital velocity and escape velocity only differ from each other by a numerical factor.1 point

-

If the planet warms then for every plot of land that becomes infertile due to excessive heat there should be another plot of land that was once infertile due to excessive cold which becomes arable after it warms. The total amount of arable land could increase. Look at Canada and Russia, don't you think much of that frozen land would become arable if the planet warmed? Antarctica will probably become arable after a few hundred years too, a whole new continent to settle! Africans are used to the heat it won't bother them, and we can send them more food if they need it. That way the population of Africa can continue growing and natural resources can be continue being extracted and shipped here cheaply. As for coastal regions getting flooded, it will be a good opportunity to revitalize the infrastructure around our coasts and renovate while moving stuff away from the coast if necessary. I don't see a problem, it will all work out.-1 points

-

Elk should be able to shift their habitat north, but they can probably adapt to warmer temperatures anyway. Elk look kind of like impalas, so I don't see why they couldn't adapt to live in a warm climate. We can put the penguins in a zoo.-1 points

-

Why are you concerned with Elk? They are classified as a least-concern species by the IUCN. https://en.wikipedia.org/wiki/Elk https://en.wikipedia.org/wiki/Least-concern_species-1 points

-

Look at the attached pictures.Their body structures are so similar that they could take up each other's habitats without issue. The elk has a little bit more insulation, but there is probably enough genetic variance for fur growth in the elk species that shorter furred elk could be selected for quickly in order to adapt to a hot climate.-1 points

-

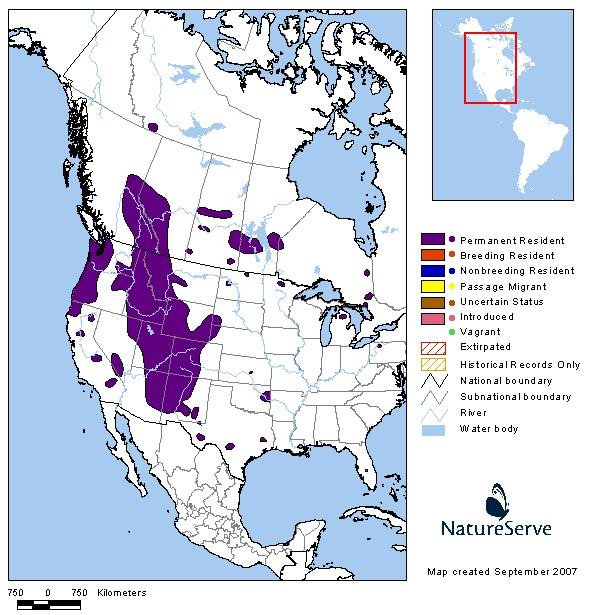

The evidence that elk could live a warm climate is overwhelming. In 1913, 83 elk from Yellowstone were transplanted in Arizona near Chevelon Lake in the Arizona White Mountains region. Even with harvesting via licensed hunting, today the Arizona elk population has grown to about 35,000. See the picture below of the western hemisphere range for elk.-1 points

-

And how many humans will die when we slash energy consumption by prematurely moving away from fossil fuels before suitable replacements exist? Where do you think the energy to create the civilization around you comes from? It is not moot if those scientists display their bias by unnecessarily stoking fear and focusing on potential negative outcomes. I am not sure if we should call them scientists either. Scientists are supposed to test if the hypotheses they have developed actually work. Have you seen some of the mathematical/statistical models these researchers use? Convoluted, ridiculous and utterly useless. Most of the models that climate scientists develop are so useless that they make Neil Ferguson's epidemiological models look good in comparison. Make no mistake, the goal with these convoluted models is to intimidate people into submission; "We've done the math. You're just too dumb to understand it. Shut up and trust the experts, us." Luckily I have a masters in applied math so I can tell when math is used in a BS fashion. Descartes said it best when he said: "Then as to the Analysis of the ancients and the Algebra of the moderns, besides that they embrace only matters highly abstract, and, to appearance, of no use, the former is so restricted to the consideration of figures, that it can exercise the Understanding only on condition of greatly fatiguing the Imagination; and, in the latter, there is so complete a subjection to certain rules and formulas, that there results an art full of confusion and obscurity calculated to embarrass." You brought up elk. I am addressing what you said. It's OK to be wrong.-1 points

-

I see that I struck so telling a blow, that not only are you running away with your tail between your legs, you also went back through this thread to downvote 5 of my replies. Many of which, ostensibly, you left untouched earlier. Did their contents change? I will reply later with a thorough explanation of why most of the major climate models in use today are practically useless.-2 points