All Activity

- Past hour

-

Why Lorentz relativity is true and Einstein relativity is false

joigus replied to externo's topic in Speculations

Will you just read what's answered to you??? Otherwise it's a monumental waste of time for everyone involved. (my emphasis.) The returning twin is subject to accelerations. Is it not? This is the major bone of contention with people who don't understand the twin's paradox. (Or should I say it just goes over their heads?) It is practically a socio-physical theorem that there will always be people who don't understand it. You are living proof of it. -

If all spatial dimensions loop back on themselves seamlessly, so that whichever direction you travel in, after n light years you are back where you started, then what does 'centre' even mean? It's definitely finite with a volume oto (n light years)3. But there is no point more remote from the boundary than any other because there is no boundary. All points within the space are geometrically exactly equivalent.

-

Here there are wave simulators for lorentz transformations : https://ondes--relativite-info.translate.goog/AlainCabala/telechar/download.html?_x_tr_sch=http&_x_tr_sl=fr&_x_tr_tl=en&_x_tr_hl=fr&_x_tr_pto=wapp

-

I am thankful to anybody who finds time to read and comment.

-

Why Lorentz relativity is true and Einstein relativity is false

externo replied to externo's topic in Speculations

You cannot prove that it remains invariant, on the other hand I proved in my first message that it cannot remain invariant. When an object accelerates it necessarily changes speed relative to light, which invalidates Einstein. -

So from what I know about the eco system is that it's got producers and consumers. The producers are the plants that take in sunlight to undergo photosynthesis and to grow. That provides a food source for consumers, the consumers are the animals that eat the plants and animals that eat other animals. So the food chain starts with plants and then herbivores and then predators and so forth. However, the plants are always the producers and the animals, whether they be herbivores, carnivores, or omnivores, are always the consumers. However, I would think there are some cases in which a life form can be both a producer and a consumer. There are some plants that in addition to using photosynthesis will also feed off of other life forms as predators, perhaps the best known example would be the Venus Fly Trap. In addition to the Venus Fly Trap there are other plants that also eat insects so I would think they would be both producers and consumers, is that correct?

- Today

-

Surface is not volume ...a finite volume has a center ...if I accept to extend my thinking from 2 dimensional to 3 dimensional , not 4 dimensional who is a wrong interpretation of our Universe, then You should admit the Zeno paradox is true ....Achilles and the Tortoise ...In a race, the fastest runner can never overtake the slowest, because the pursuer must first reach the point where the pursued started, so the slowest must always have the advantage.

-

Not if you want mainstream answers. There is no ether in mainstream physics

-

Turning Information into Energy

Ghideon replied to Sora Tōgo's topic in Modern and Theoretical Physics

Did you read what the AI generated? From your document: Your post looks like a joke about LLM usage. Was that the intention? -

Thank you so much externo This helps a lot

-

WHO: Zoonotic Animal Pandemic (bird flu)

Wigberto Marciaga replied to Wigberto Marciaga's topic in Science News

It seems to you that I was comparing it to the coronavirus in that aspect. What I was saying is that there is a pandemic that puts humans at risk, but not that it was identical to the coronavirus. Maybe I didn't know how to present the idea. But, it seems to me that this zoonotic pandemic thing is not something that happens every weekend either. I suppose that it is because of the risk of spreading between humans that WHO representatives have expressed being very concerned. Blessing. -

Why Lorentz relativity is true and Einstein relativity is false

Mordred replied to externo's topic in Speculations

The speed of light remains invariant to all observers that is precisely what invariant means. The confusion is on your end -

Time dilation occurs in a propagating medium when confined standing waves are set in motion. Lorentz transformations are classical wave physics. Here are references: https://arxiv.org/pdf/1401.4356.pdf https://arxiv.org/abs/1401.4534 https://web.archive.org/web/20120228112717/http://glafreniere.com/sa_Lorentz.htm So the Lorentz transformations tell us that matter is made up of standing waves of ether. Electron is probably a standing wave according to Milo Wolff's model.

- 5 replies

-

-2

-

Why Lorentz relativity is true and Einstein relativity is false

externo replied to externo's topic in Speculations

In a light clock the speed of light is invariant with respect to space but certainly not with respect to the light clock. You are confusing the invariance of the speed of light with respect to space or ether which is Lorentz's postulate and the invariance of the speed of light with respect to all inertial frames which is Einstein's. -

That's interesting. I had always understood that magnetic fields have energy, as for example in the stored energy in an energised electromagnet. If they do not, where does the energy come from when an object moves towards another under the influence of magnetic attraction? And you yourself say a magnetic field has an energy density.

-

Why Lorentz relativity is true and Einstein relativity is false

Bufofrog replied to externo's topic in Speculations

The whole point of the light clock is that the speed of light doesn't change. You don't seem to have much of an understanding of relativity. You should listen to the people on this forum, you will learn a lot. -

Just FYI, the energy density of the earth’s magnetic field is about a millijoule per cubic meter. Scale up as necessary for a stronger magnet. The energy for doing stuff with magnets is not contained in the magnetic field. https://brainly.com/question/17055580

-

corovet73 joined the community

-

Turning Information into Energy

exchemist replied to Sora Tōgo's topic in Modern and Theoretical Physics

Information is related to entropy, but is not energy and can’t be “turned into” it. You don’t know what you are talking about. -

Why Lorentz relativity is true and Einstein relativity is false

swansont replied to externo's topic in Speculations

You need to read more carefully. I specified inertial frames. There are a number of explanations, easily found on the web, of how a light clock would work, based on an invariant c -

Why Lorentz relativity is true and Einstein relativity is false

Mordred replied to externo's topic in Speculations

Precisely time is not absolute. If that's what you believe you need to catch up to modern research. -

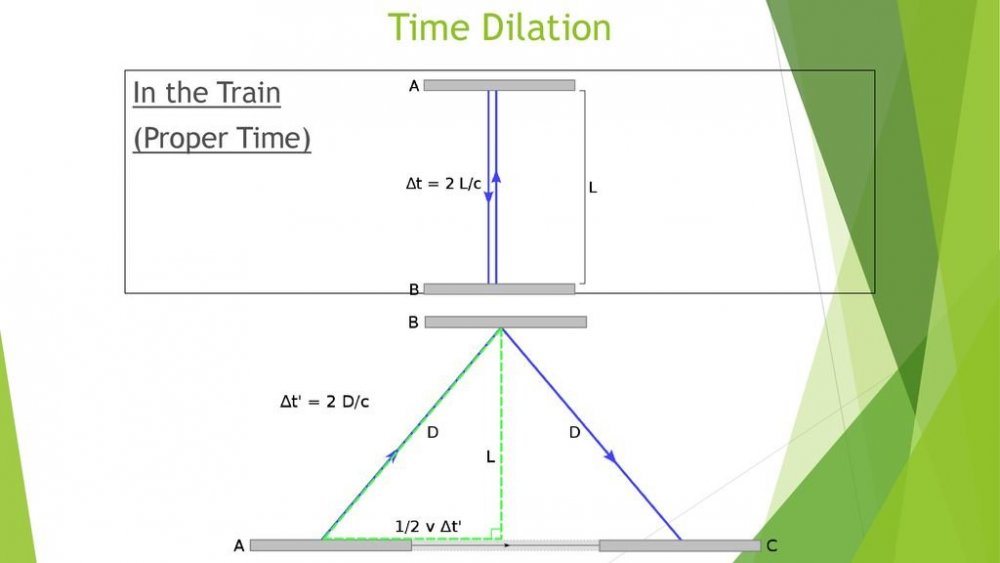

Hi could this be a more simple way to calculate time in light clock time dilation experiment? On the lower picture the side of triangle 1/2xvxdelta t’ is actually 0.5 sec time - for spweed v=0 to c I will repeat this - the time tv =0.5 sec for any speed from 0 to 3x10e8 . for side L of left triangle at the bottom picture file is equal to delta t this time is easy to calculate delta t=2L/c to calculate delta t’ (Delta t’)^2=(0.5 sec)^2 + (delta t)^2 is this more simple way to calculate time dilation for light clock experiment ? And is there time dilation at all?

-

Why Lorentz relativity is true and Einstein relativity is false

externo replied to externo's topic in Speculations

If time dilation was a relative effect, it would not be absolute and the twin would not return younger. If the speed of light does not change relative to a moving object, how does a light clock work? It is the measurement of the speed of light that does not vary, not the speed itself. Meters and measuring standards are transformed in such a way that they always measure the same round-trip speed.- 33 replies

-

-1

-

Why Lorentz relativity is true and Einstein relativity is false

Mordred replied to externo's topic in Speculations

No I'm not I know precisely how SR and GR works including the related math. I use it all the time as a professional physicist. Here is a challenge for describe at point between two observers in different reference frames where simultaneaty can be said to occur. Then add a third observer -

WHO: Zoonotic Animal Pandemic (bird flu)

CharonY replied to Wigberto Marciaga's topic in Science News

Yes of course, zoonotic disease are an ongoing concern. COVID-19 was one. It is just not a pandemic as COVID-19 is, as suggested in OP. -

Why Lorentz relativity is true and Einstein relativity is false

externo replied to externo's topic in Speculations

How do you know that the speed of light is invariant? This is a eisteinian postulat, not a physical reality. https://en.wikipedia.org/wiki/One-way_speed_of_light So this convention has nothing to do with experimentally verifiable predictions. I have demonstrated that this convention leads to a physical contradiction. This has been proven, for example, walking droplets in oil baths obey Lorentz transformations while moving. Lorentz transformations are proof that matter consists of moving standing waves of ether. C is invariant, but the speed of light relative to moving objects is not. In the absence of gravitation the speed of light is invariant with respect to ether or space The Doppler effect comes from relative speed and is generated during the acceleration period. If you don't accelerate you can't move. So you say yourself that the space twin change is velocity and produces a Doppler effect, so if it is the one moving it is not the Earth that is moving and there is no physical symmetry. Matter waves, gravitaional waves, any ether waves. What you say there is Einstein's interpretation. In this interpretation the lengths contract because the simultaneity physically changes. If simultaneity does not change physically there is no possible length contraction of. But an object which accelerates has no influence on outer space, it therefore cannot change the simultaneity of outer space, it can only change its own simultaneity, that is to say it physically transforms itself because the speed of light changes relative to him and he has to adapt. So the outer space has not changed in simultaneity so there is a simultaneity of the outer space and it is a privileged frame of reference. No, so there is no spacetime in the Minkowski sense. But there is quaternionic spacetime where time is the scalar dimension of space as Hamilton thought when creating quaternions. https://en.wikipedia.org/wiki/Algebra_of_physical_space Einstein's interpretation is based on a literal interpretation of the equations and on the physical existence of Minkowski space-time or block universe. But time is not a vector dimension.